Profile Lightbits Labs claims to have invented NVMe/TCP. This is a big claim but the early-stage startup has demonstrable product technology for running fast access NVMe-over-fabrics (NVMe-oF) across standard data centre LANs using TCP/IP.

Lightbits Labs was founded in 2015 by six Israeli engineers. The company is 50-strong and runs two offices in Israel and a third in Silicon Valley. The company has revealed no funding details – it is possibly owner-financed and/or has angel investment.

It does not yet have a deliverable product but we anticipate it will come to market in 2019 with a hardware accelerator using proprietary VLSI or FPGA technology. These will work with commodity servers, Lightbits says, but we must wait for the product reveal to see how that pans out in practice. It seems reasonable to infer that a funding round is on the cards to cover the costs of the initial business infrastructure build-out.

Lightbits Labs has considerable competition for an early-stage startup. The company is in competition with other attempts to run NVMe-oF across standard Ethernet, e.g. SolarFlare with kernel bypass technology and Toshiba with its Kumoscale offering.

These companies are, in turn, up against NetApp, IBM and Pure Storage, which are developing competing technology that enables NVMe over standard Fibre Channel.

Lightbit’s rivals are established and well-capitalised. For example, Solarflare, the smallest direct competitor, is 17 years-old and has taken in $305m in an improbable 24 funding rounds.

Lightbits, with a startup’s nimbleness and single-minded focus, may be able to develop its tech faster. And of course we have yet to see what product or price differentiation the company may have to offer.

NVMe over TCP

Let’s explore in some more detail the problem that Lightbits is trying to solve.

NVMe-oF radically reduces access latency to block data in shared array. But to date, expensive data centre-class Ethernet or InfiniBand has been required for network transport.

By running NVMe-oF over a standard Ethernet LAN using TCP/IP its deployment and acquisition costs would be reduced.

NVMe is the protocol used to connect direct-attached storage, typically SSDs, to a host server’s PCIe bus. This is much faster, at say 120-150µs, than using a drive access protocol such as SATA or SAS. An NVMe explainer can be found here.

NVMe over Fabrics (NVME-oF) extends the NVMe protocol to shared, external arrays using a network transport such as lossless Ethernet, with ROCE, and InfiniBand, with iWARP.

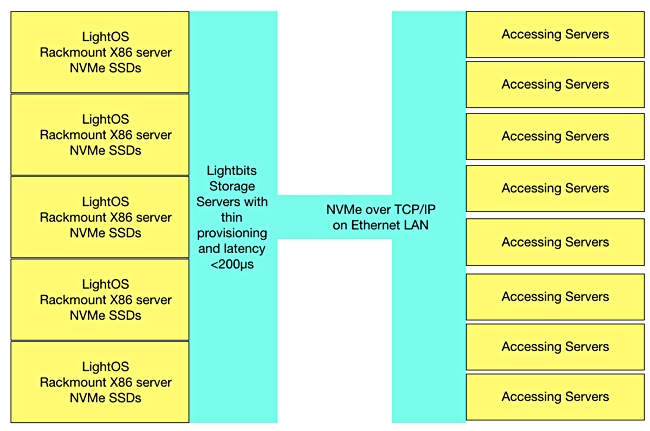

Both are expensive and fast, with remote direct memory access speeds; think 100-120µs latency. Running NVMe-oF across a TCP/IP transport adds 80 – 100 microseconds of latency compared to ROCE and iWARP, but the net result is much faster than iSCSI or Fibre Channel access to an external array; think <200µs compared to >100,000µs.

Lightbits Labs system

Lightbits maps the many parallel NVMe I/O queues to many parallel TCP/IP connections. It envisages NVMe-over TCP as the NVMe migration path for iSCSI.

The Lightbits pitch is that you don’t need a separate Fibre Channel SAN access infrastructure. Instead, consolidate storage networking with everyday data centre Ethernet LAN infrastructure, and gain the cost and manageability benefits. Carry on using your everyday Ethernet NICs, for example.

In its scheme, storage accessing servers direct their requests to an external array of commodity rack servers which run the LightOS and are fitted with NVMe SSDs. The SSD capacity is thinly provisioned and managed as an entity, with garbage collection, for example, run as a system-level task and not at drive-level. Random write accesses are managed to minimally affect SSD endurance.

Lightbits says the NVMe/TCP Linux drivers are a natural match for the Linux kernel and use the standard Linux networking stack and NICs without modification. The technology can scale to tens of thousands of nodes and is suitable for hyperscale data centres, the company says.

It provides more information in a white paper.

Hardware acceleration

Lightbits pushes access consistency with its technology, claiming reduced tail latency of up to 50 per cent compared to direct-attached storage. It uses unspecified hardware acceleration and system-level management to double SSD utilisation.

The white paper notes: “The LightOS architecture tightly couples many algorithms together in order to optimise performance and flash utilisation. This includes tightly coupling the data protection algorithms with a hardware-accelerated solution for data services.”

And it summarises: “Lightbits incorporates high-performance data protection schemes that work together with the hardware-accelerated solution for data services ad read and write algorithms.”

It adds: “a hardware-accelerated solution for data services that operates at full wire-speed with no performance impact.”

Hardware acceleration in the data path seems important here, and we note co-founder Ziv Tishel is director of VLSI/FPGA activities at Lightbits. This suggests that LightOS servers are fitted with Lightbits’ proprietary VLSI or FPGA technology.