The amount of data to be stored, processed and protected grows incessantly. That’s fuelling product growth and partnerships between suppliers as they jostle to taker advantage of the benign storage weather climate.

Cisco HyperFlex and Nexenta target VDI

Cisco is using Nexenta’s Virtual Storage Appliance (VSA) to provide NFS and SMB file services to its HyperFlex hyper-converged system. This SW-based NAS features inline data reduction, snapshots, data integrity, security, and domain control, with management through VMware’s vCenter plugin.

The two say HyperFlex plus Nexentastor VSA is good for VDI with support for home directories, user profiles, and home shares.

It obviates the need for HyperFlex customers to add a separate NAS filer to support VDI.

The two suggest the combined system is suited for remote office/branch office (ROBO) and backup/disaster recovery (BDR), supporting legacy enterprise, new cloud-native, and 5G-driven telco cloud apps, in part as it eliminates the need for separate file servers.

Cray meets good weather in South Africa

A Cray XC30-AC supercomputer, with a Lustre file system, used by the South African Weather Service’s (SAWS) since 2014, has been upgraded.

SAWS has doubled its compute capacity, tripled storage capacity and mire than doubled bandwidth.

The upgrade involved;

- Growing from 88 to 172 Ivy Bridge compute nodes

- Upgrading processors from Ivy Bridge 2695v2 to Ivy Bridge 2697v2

- The system went from 1.5 cabinets to 3 cabinets (48 blades)

- Storage was upgraded from Sonexion 1600 arrays with 0.52 PB capacity and 12 GB/sec bandwidth to ClusterStor L300 storage with 1.8PB capacity and 27 GB/sec.

Ilene Carpenter, earth sciences segment director at Cray, said: “With the Cray system upgrades in place, SAWS has the storage and compute resources needed to handle an increasing number of hydro-meteorological observations and to run higher fidelity weather and climate models.”

IDC’s Global StorageSphere

IDC’s inaugural Global StorageSphere forecast; a variation on the old Digital Universe reports, predicts the amount of stored data is going to grow; there’s a thing.

The installed base of storage capacity worldwide will more than double to 11.7 zettabytes (ZB) over the 2018-2023 forecast period. IDC says it measures the size of the worldwide installed base of storage capacity across six types of storage media, but doesn’t publicly identify the six media types.

We asked IDC and spokesperson Mike Shirer tells us: “The six types of storage media in the report are: HDD, Tape, Optical, SSD, and NVM-NAND / NVM-other. Share is dominated by HDD, as you might expect.” Indeed.

The three main findings, according to IDC, are;

- Only 1-2 per cent of the data created or replicated each year is stored for any period of time; the rest is either immediately analysed or delivered for consumption and is never saved to be accessed again. Suppliers added more than 700 EB of capacity across all media types to the worldwide installed base in 2018, generating over $88 billion in revenue,

- The installed base of enterprise storage capacity is outpacing consumer storage capacity, largely because consumers increasingly rely on enterprises, especially cloud offerings, to manage their data. By 2023, IDC estimates that enterprises will be responsible for managing more than three quarters of the installed base of storage capacity.

- The installed base of storage capacity is expanding across all regions, but faster in regions where cloud datacentres exist. The installed base of storage capacity is largest in the Asia/Pacific region and will expand to 39.5 per cent of the Global StorageSphere in 2023.

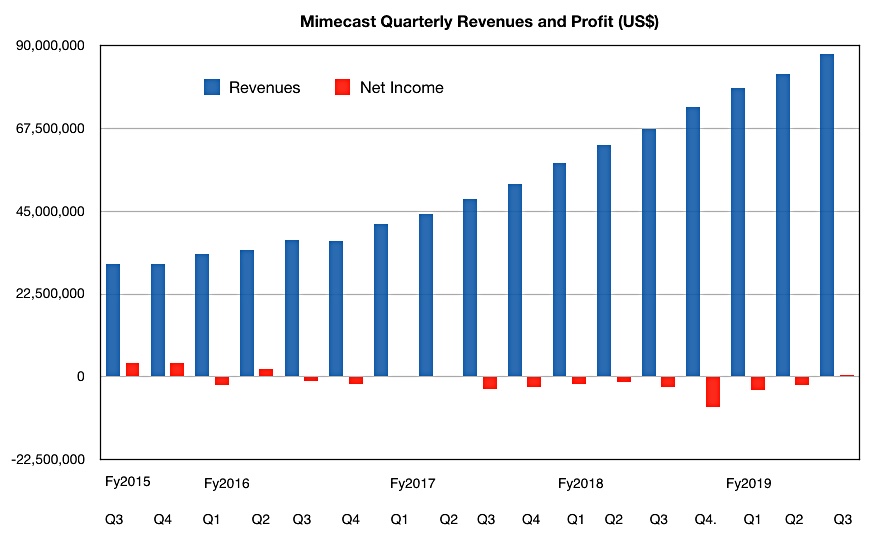

Mimecast’s textbook example of business growth

Mail archiver and protector Mimecast delivered yet another quarter of record growth.

Revenues for its third fiscal 2019 quarter were $87.6m, providing a $350m annual run rate, and an increase of 30 per cent on last year.

There was a $500K profit; unusual as Mimecast generally runs with a regular quarterly loss to fuel its growth.

Mimecast closed a record number of six figure transactions and a seven figure deal that was the largest ever for the company. It was with a financial services customer working across the Middle East and Africa.

A thousand new customers were recruited in the quarter, more than last quarter’s 900 but less than the year-ago’s 1,200. The total customer count is 33,000.

The outlook for the fourth quarter of 2019 revenue is a range of $90.6m to $91.5m. Full year 2019 revenue is expected to be in the range of $338.7m to $339.7m. Full-year 2020 revenue is expected to be in the range of $413m to $427m.

Mimecast bought Simply Migrate last month, with software technology to migrate mail archives into Mimecast’s Cloud Archive vaults. The price was not disclosed.

Seagate and the EMEA Datasphere

Another IDC report, commissioned by Seagate, looks at the data produced (not necessarily stored) in the EMEA region. It predicts the EMEA Datasphere will grow from 9.5ZB to 48.3ZB from 2018 to 2025, a growth rate slightly lower than the global average (CAGR of 26.1 per cent vs 27.2 per cent.)

The main findings;

- AI, IoT and entertainment streaming services rank among the key drivers of data growth,

- Streaming data is expected to grow by 7.1 times from 2015 to 2025; think YouTube, Netflix and Spotify,

- AI data is growing at a 68 per cent CAGR, while IoT-produced data will grow from 2 per cent to a 19 per cent share of the EMEA datasphere by 2025,

- The percentage of data created at the edge will nearly double, from 11 per cent to 21 per cent of the region’s datasphere,

- China’s Datasphere is currently smaller than the EMEA Datasphere (7.6ZB vs 9.5ZB in 2018), but by 2025 it will have overtaken Europe to emerge as the largest Datasphere in the world, with 48.6ZB.

Prissily Seagate and IDC advise that businesses need to see themselves as responsible stewards of data, taking proactive steps to protect consumer data while reaping the benefits of a global, cloud-based approach to data capitalisation, whatever data capitalisation means. Yeah, yeah.

Veeam did well – again

Backup and recovery supplier Veeam, which has a succession of record-breaking quarters has had another one leading to a near $billion year. Total global bookings in 2018 were $963m were, 16 per cent higher than 2017’s $827m.

The global customer count is over 330,000. At the end of 2017 it was 282,000, and Veeam says it has been acquiring new customers at a 4,000/month rate. More than 9,900 new EMEA customers in EMEA were acquired in the fourth 2018 quarter; mind-boggling numbers.

Cloudhas been the fastest growing segment of Veeam’s business for the past 8 quarters. Veeam reported 46 per cent y-o-y growth in its overall cloud business for 2018. The Veeam Cloud & Service Provider (VCSP) segment grew 23 per cent y-o-y, and there are 21,700 Cloud Service Providers, 3,800 licensed to provide Cloud Backup & DRaaS using Veeam Cloud Connect.

Ratmir Timashev, co-founder and EVP of Sales & Marketing at Veeam, said: “We have solidified our position as the dominant leader in Intelligent Data Management and one of the largest private software companies in the world.”

Veeam recently took in a $500m investment to help drive its growth. Timashev said: “We are leading the industry by empowering businesses to do more with their data backups, providing new ways for organisations to generate value from their data, while solving other business opportunities.”

Blocks & Files thinks acquisitions are coming as Veeam strengthens its data management capabilities to compete more with Actifio, Cohesity and Rubrik. We reckon it will hit the $1bn by the mid-year point.

WANdisco gets cash and stronger AWS partnership

Replicator WANdisco has raised $17.5m through a share issue taken up by existing shareholders;

- Merrill Lynch International

- Ross Creek Capital Management, LLC

- Global Frontier Partners, LP

- Davis Partnership, LP

- Acacia Institutional Partners LP, Conservation Fund LP and Conservation Master Fund (Offshore), LP

CEO and chairman Dave Richards said the cash will be used to: “to leverage a number of significant opportunities to expand our existing partner relationships.”

WANdisco has received Advanced Technology Partner status with Amazon Web Services, the highest tier for AWS Technology Partners. Richards said this new partner status: “significantly expands our sales channel opportunities.”

Shorts

Amazon Elastic File System Infrequent Access (EFS IA) is now generally available. It is a new storage class for Amazon EFS designed for files accessed less frequently, enabling customers to reduce storage costs by up to 85 per cent compared to EFS Standard storage class. With EFS IA, Amazon EFS customers simply enable Lifecycle Management, and any file not accessed after 30 days gets automatically moved to the EFS IA storage class.

A major US defence contractor is implementing an Axellio FabricXpress system using Napatech’s FPGA-based SmartNIC software and hardware. The Axellio/Napatech 100G capture and playback appliance will be used as a test and measurement system to capture and store 100 gigabits per second data-to-disk to allow precise replay. Napatech provides high-speed lossless data capture and replay.

Cisco’s second fiscal 2019 quarter results earnings call revealed this HyperFlex HCI snippet; CFO Kelly Kramer said; “We had a great Q2, we executed well with strong top-line growth and profitability.

“Total product revenue was up 9 per cent to $9.3bn, Infrastructure platform grew 6 per cent. Switching saw double-digit growth in the campus. … Wireless also had double-digit growth … Routing declined due to weakness in service provider. We also saw decline in data centre servers partially offset by strength in hyperconverged.” So HyperFlex revenues grew though UCS servers generally did not.

Disk array supplier INFINIDAT has been recognised as a February 2019 Gartner Peer Insights Customers’ Choice for General-Purpose Disk Arrays, based on reviews and ratings from end-users. So too have DEll EMC, HPE and NetApp.

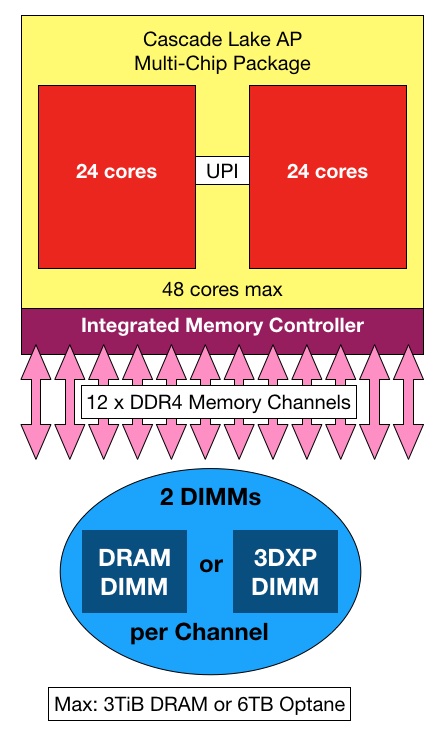

Intel will build a new semiconductor fab in Hillsboro Oregon, but hasn’t said what kind of chips it will make; CPUs, NAND or Optane. The new fab will be a a third phase of the existing D1X manufacturing complex and its exact size and timing haven’t been specified.

Mail manager and archiver Mimecast’s third fiscal 2019 quarter revenues were up 30 per cent y-o-y to $87.6m. It added 1,000 new customers in the quarter to reach a total of 33,300; impressive. A total of 41 per cent of Mimecast customers used it in conjunction with Microsoft Office 365 during the quarter compared to 29 per cent a year ago.

Scale-out filer start up Qumulo said that, in FY19;

- Its partner ecosystem, including HPE, was responsible for 100 per cent of bookings and the source of about 40 per cent of its deals.

- More than 65 per cent of new business was partner-initiated

- It saw year-over-year triple-digit growth with HPE, with new customers across enterprise, commercial, State/Local government and Education (SLED), Federal government and small/medium business (SMB).

People

Rafe Brown has been appointed as Mimecast’s CFO. He was previously CFO of SevOne, being CFO of Pegasystems before that.

Leonard Iventosch has joined scale-out filer Qumulo as its Chief Channel Evangelist, coming from HPE’s Nimble unit, where he was VP for world-wide channels.