Start reading already 🙂

Qumulo updates software

Scale-out filer supplier Qumulo has streamed out a set of software updates over the past quarter:

- Alternate Data Streams – Increase data access across both Windows and Mac environments by enabling files to receive more than one stream of data.

- Audit Tracking – View who has accessed, modified, or deleted files.

- Enhanced Replication.

- Mac OS X Finder Enhancements – Display performance is now 10 times faster than SMB display performance. Improved file management with the “._hidden” files no longer being created and the “finder tags” being retain with their tags when copied.

- IO Assurance – Balance performance during a drive rebuild

- C5n clusters now available in AWS.

- Enhanced Real-Time Analytics – Visibility into capacity usage and trends for snapshots, metadata, and data independently.

- Refreshed Management Interface.

- Upgrade Path Options – Move between bi-weekly and quarterly upgrade paths flexibly.

Seagate LaCie Rugged SSDs

Seagate has launched a set of LaCIe external SSDs for entertainment and media professionals.

The handheld Rugged SSD has a Seagate FireCuda NVMe SSD inside, Seagate Secure self-encrypting technology with password protection. Users will get USB 3.1 Gen 2 speeds of up to 950MB/sec.

The palm-sized and faster Rugged SSD Pro is a scratch disk with a FireCuda NVMe SSD inside for Thunderbolt 3 speeds up to 2800MB/s sec. It’s suitable for up to 8K high-res and super slow motion footage and has the latest Thunderbolt 3 controller. USB 3.1 is also supported.

The Rugged BOSS SSD has 1TB of capacity with speeds of up to 430MB/sec and direct file transfers via the integrated SD card slot and USB port. A built-in status screen provides real-time updates on transfers, capacity, and battery life. LaCie BOSS apps on iOS and Android enable users to view, name and delete footage.

The Rugged SSD has MSRPs of £189.99 (500GB), £319.99 (1TB) and £529.99 (2TB) and is available from Amazon and other resellers.

The Rugged SSD Pro has MSRPs of £429.99 (1TB) and £749.99 (2TB), and is available from Amazon and the following resellers: Jigsaw; CCK; Protape; Wex.

The Rugged BOSS SSD has an MSRP of £499.99, and is available from Amazon.

StorageCraft

StorageCraft, the US-based data protection business, has published the findings of an independent global research study on experiences and attitudes of IT decision-makers around data management.

Some 88 per cent of UK respondents believe data volume will increase 10-fold or more in the next five years. And 81 per cent expressed concern about risk and business impact when asked about the potential impact of this growth.

- 61 per cent expect an increase in operational costs

- 47 per cent foresee an inability to recover quickly enough in the event of a data outage

- 46 per cent anticipate they will be more susceptible to security risks

- 32 per cent envisage that strategic projects will fail

- 29 per cent predict that revenue generation will lag

So they had better buy StorageCraft data protection.

Shorts

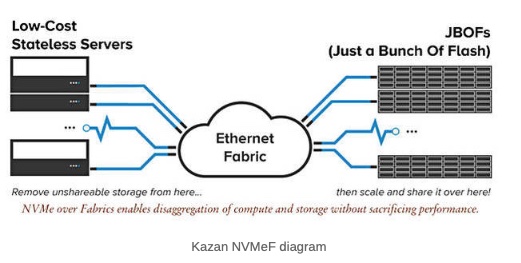

Architecting IT has published a user guide ‘NVMe in the Data Centre’.It can be bought and downloaded here.

Cloudera results for the second quarter of fiscal year 2020, ended July 31, 2019, saw total revenue of $196.7m, subscription revenue of $164.1m and annualised recurring revenue grew 16 per cent Y/Y. Loss from operations was $89.1m, compared to -$29.4m a year ago.

Object storage supplier Cloudian says it had record (but unquantified) revenue in the first half of its fiscal year ended July 31, 2019, with significant year-over-year growth in both quarters. Sales in North America for the period increased more than 65 per cent.

Cloud-based data protection supplier Cobalt Iron has announced Cyber Shield, an extension of its Adaptive Data Protection SaaS product that locks down and shields its customers’ backup data from loss, corruption, or attack.

Enterprise storage software startup Datera claimed first-half business growth of over 500 per cent which included its largest quarterly billings, largest new deal and largest expansion deal. CEO Guy Churchward said: “It’s like a switch was thrown in the last six months.”

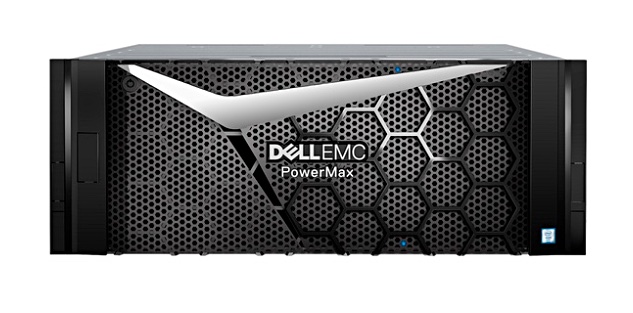

Dell EMC Isilon and NVIDIA’s joint reference architecture, featuring the all-flash Isilon F800 with the NVIDIA DGX-1 GPU server, is now commercially available in EMEA as an integrated turnkey AI system sold through joint strategic channel partners.

Research house Dell ‘Oro Group said the worldwide server and storage systems market declined eight per cent year-over-year in 2Q 2019. This was the first decline in eight quarters with softness in the enterprise and cloud sectors.

Hitachi Vantara has launched Lumada Manufacturing Insights, a suite that uses AI and ML to eliminate data silos and help manufacturers optimise machine, production and quality outcomes.

HPE said it delivered robust performance in all flash array revenue with 22.7 per cent year over year growth in the second 2019 quarter, according to IDC. It brags that the the other two vendors in the top three – Dell and NetApp – posted year over year declines.

IBM Spectrum Scale can be used as platform storage for running containerised Hadoop/Spark workloads.

Kingston Technology Europe has been ranked the top DRAM module supplier in the world, according to the latest rankings by DRAMeXchange.

South Africa’s Newzroom Afrika, a 24/7 news channel, has bought a 1PB cluster of MatrixStore object storage. It has access to content provided by Interconnect (for Avid Interplay) and the Vision application. The cluster will be installed in a facility in Johannesburg.

Mellanox is on track to ship over one million ConnectX and BlueField Ethernet network adapters in Q3 2019, a new quarterly record.

Cloud file services supplier Nasuni has a new patent No. 10,311,153 for a “versioned file system with global lock” that covers Nasuni Global File Lock technology.

HPC storage supplier Panasas has published a case study featuring the marine survey activities of customer Magseis Fairfield, a Norway-based geophysics firm providing ocean bottom seismic acquisition services for exploration and production companies.

Cloud file collaboration service provider Panzura has a signed partnership deal with Workspot. The latter’s Global Desktop Fabric for Cloud VDI enables Panzura customers to deploy cloud desktops and workstations in any Azure region around the globe.

IDC lists UK-based Redstor in all five main categories of Market Glance: Data Protection as a Service, Q3 2019: backup as a service, archive as a service, disaster recovery as a service, workload migration and backup/recovery tools.

Seagate has sold a 140,000 square feet Cupertino office building to a realty investment firm, Rubicon Point Partners, for $107.5m

VAST Data has joined the STAC (financial industry benchmarking) council.

IDC examines Western Digital’s ActiveScale product family. The report – ‘The Economic and Operational Benefits of Moving File Data to Object-Based Storage’ is available for download via WD.

People

Acronis has appointed Kirill Tatarinov as executive vice chairman “to help lead the company in its next phase of growth,”. A board member since Dec 2018, Tatarinov previously held leadership positions at Citrix and Microsoft.

Veritas has appointed Mike Walkey as head of channel sales and the company’s alliances with Amazon Web Services, Google, Microsoft and other cloud providers. He was SVP of strategic partners and alliances at Hitachi Vantara where he resigned – to apparently retire.