DDN is being certified by Nvidia to provide the storage component of a reference architecture that is to be used by cloud service providers (CSP) to build Nvidia GPU-powered AI factory infrastructure.

This CSP reference architecture (RA) is a blueprint for building datacenters that can supply generative AI and large language model (LLM) services similar to the big three public clouds and hyperscalers. The Nvidia Cloud Partner scheme covers organizations that offer hosted software and hardware services in a cloud or managed services model to customers using Nvidia products.

DDN’s SVP and CMO, Jyothi Swaroop, said in a statement: “This reference architecture, developed in collaboration with Nvidia, gives cloud service providers the same blueprint to a scalable AI system as those already in production in the largest AI datacenters worldwide, including Nvidia’s Selene and Eos supercomputers.

“With this fully validated architecture, cloud service providers can ensure their end-to-end infrastructure is optimized for high-performance AI workloads and can be deployed quickly using sustainable and scalable building blocks, which not only offer incredible cost savings but also a much faster time to market.”

Marc Hamilton, Nvidia VP for Solutions Architecture and Engineering, writes in blog: “LLM training involves many GPU servers working together, communicating constantly among themselves and with storage systems. This translates to east-west and north-south traffic in datacenters, which requires high-performance networks for fast and efficient communication.”

Nvidia’s cloud partner RA includes:

- Nvidia GPU servers from Nvidia and its manufacturing partners, including Hopper and Blackwell.

- Storage offerings from certified partners, including those validated for DGX SuperPOD and DGX Cloud.

- Quantum-2 InfiniBand and Spectrum-X Ethernet east-west networking.

- BlueField-3 DPUs for north-south networking and storage acceleration, elastic GPU computing, and zero-trust security.

- In/out-of-band management tools and services for provisioning, monitoring, and managing AI datacenter infrastructure.

- Nvidia AI Enterprise software, including:

- Base Command Manager Essentials, which helps cloud providers provision and manage their servers.

- NeMo framework to train and fine-tune generative AI models.

- NIM microservices designed to accelerate deployment of generative AI across enterprises.

- Riva, for speech services.

- RAPIDS accelerator to accelerate Spark workloads.

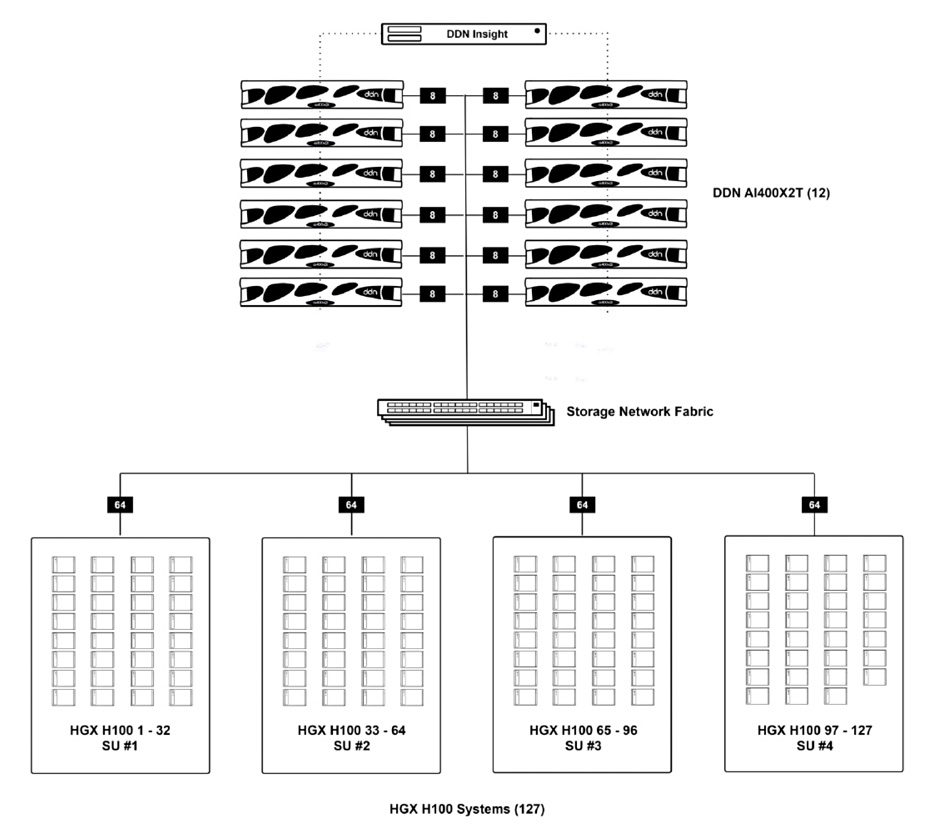

The DDN storage component uses its Lustre-based A³I (Accelerated, Any-Scale AI) system based on the AI400X2T all-flash Turbo appliances and DDN’s Insight manager. It is, DDN says, a fully validated and optimized AI high-performance storage system for CSPs featuring Nvidia’s HGX H100 8-GPU-based server services.

Each AI400X2T appliance delivers over 110 GBps and 3 million IOPS directly to HGX H100 systems.

An A³I multi-rail feature enables the grouping of multiple network interfaces on an HGX system to achieve faster aggregate data transfer capabilities without any switch configuration such as channel groups or bonding. It balances traffic dynamically across all the interfaces, and actively monitors link health for rapid failure detection and automatic recovery.

A Hot Nodes software enhancement enables the use of NVMe drives in an HGX system as a local cache for read-only operations. It improves the performance of apps where a dataset is accessed multiple times during a workflow. This is typical with deep learning (DL) training, where the same input data set or portions of the same input data set are accessed repeatedly over multiple training iterations.

The A³I has a shared parallel architecture with redundancy and automatic failover capability, and enable and accelerate end-to-end data pipelines for DL workflows of all scale running on HGX systems. DDN says significant acceleration can be achieved by executing an AI application across multiple HGX systems simultaneously and engaging parallel training efforts of candidate neural network variants.

The DDN RA specifies that “for NCP (Nvidia Cloud Partner) deployments, DDN uses two distinct appliance configurations to deploy a shared data platform. The AI400X2T-OSS appliance provides data storage through four OSS (Object Storage Server) and eight OST (Object Storage Target) appliances and is available in 120, 250 and 500 TB useable capacity options. The AI400X2-MDS appliance provides metadata storage through four MDSs (Metadata Server) and four MDT (Metadata Target) appliances. Each appliance provides 9.2 billion inodes. Both appliance configurations must be used jointly to provide a file system and must be connected to HGX H100 systems through RDMA over Converged Ethernet (RoCE) using ConnectX-7 HCAs. Each appliance provides eight interfaces, two per OSS/MDS, to connect to the storage fabric.”

The RA contains configuration diagrams for hooking up DDN storage to 127, 255, 1,023, and 2,047 HGX H100 systems; this is big iron infrastructure by any standards.

DDN says a single AI400X2T delivers 47 GBps read and 43 GBps write bandwidth to a single HGX H100 GPU server. Yesterday we noted Western Digital’s OpenFlex Data24 all-flash disaggregated NVMe drive storage delivers a claimed 54.56 GBps read and 52.6 GBps write bandwidth to a GPU server using GPUDirect. However, WD is not an Nvidia Cloud Partner.

We recently covered MinIO’s DataPOD, which delivers stored objects to GPU servers “with a distributed MinIO setup delivering 46.54 GBps average read throughput (GET) and 34.4 GBps write throughput (PUT) with an eight-node cluster. A 32-node cluster delivered 349 GBps read and 177.6 GBps write throughput.” You scale up the cluster size to reach a desired bandwidth level. Like WD, MinIO is not an Nvidia Cloud Partner.

DDN says its cloud reference architecture addresses the needs of service providers by ensuring maximum performance of their GPUs, reduced deployment times and guidelines to handle future expansion requirements. Check out DDN’s CSP RA here.