Data orchestrator Hammerspace has produced an AI reference architecture (RA) and is testing its GPUDirect compliance.

Hammerspace provides a global parallel filesystem enabling data in globally distributed and disparate sites to be located, orchestrated and accessed as if it were local. It’s RA is for a data architecture used for training inference for Large Language Models (LLMs) within hyperscale environments. GPUDirect is an Nvidia file access protocol enabling NVMe storage to send data to and receive data from Nvidia’s GPUS without a host server being involved and delaying the data transfer. A Hammerspace front-ended file storage system can now be used to feed data to LLM training systems with hundreds of billions of parameters and tens of thousands of GPUs.

David Flynn, Hammerspace Founder and CEO, said in a statement: “The most powerful AI initiatives will incorporate data from everywhere. A high-performance data environment is critical to the success of initial AI model training. But even more important, it provides the ability to orchestrate the data from multiple sources for continuous learning. Hammerspace has set the gold standard for AI architectures at scale.”

Hammerspace’s software uses parallel NFS. It says a parallel file system architecture is critical for training AI as countless processes or nodes need to access the same data simultaneously. Its orchestration software provides an AI training data pipeline that can deliver the necessary data to very large numbers of GPUs.

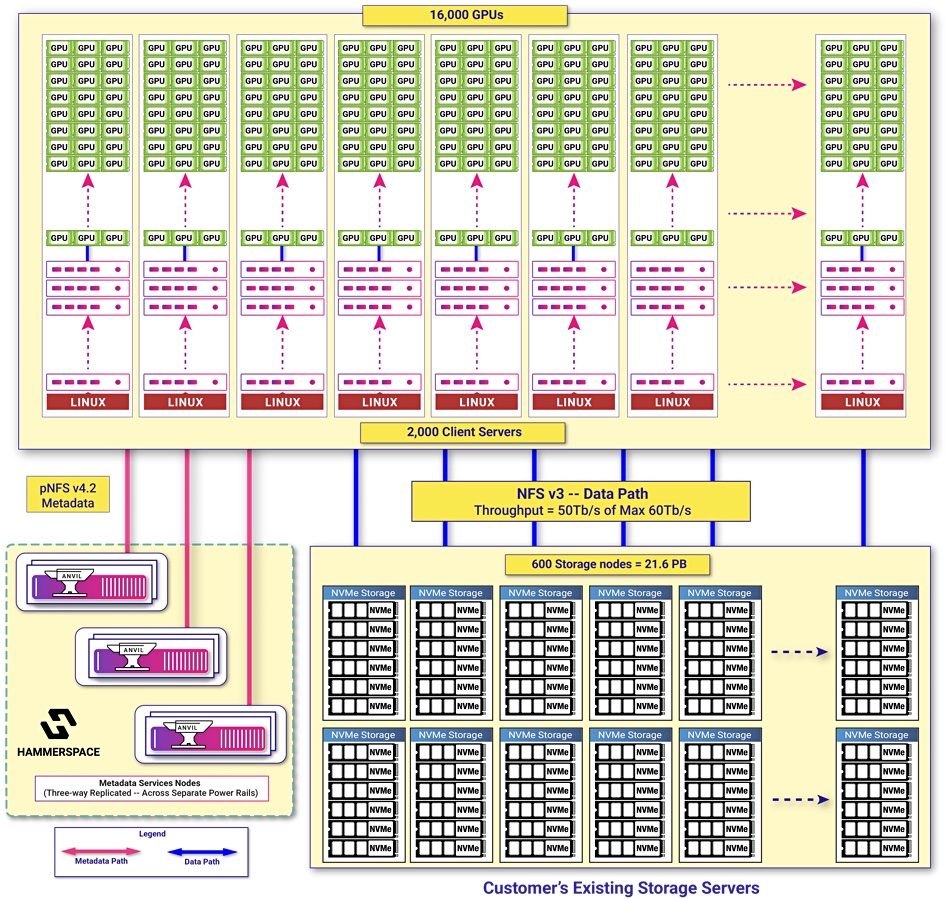

The AI RA involves several components:

- GPUs

- Client servers

- DSX (Data Storage eXtension) storage nodes with NVMe SSDA

- Anvil Metadata service nodes

- NFS v3 data path between storage nodes, client servers and GPUs

- pNFS v4.2 control path between metadata servers and client servers

The DSX storage node can be bare metal, virtual or containerized software, and provide parallel linearly scalable performance from any block storage.

A diagram, based on an actual Hammerspace installation at a customer site, shows how they are related:

The Hammerspace parallel file system client is an NFS4.2 client built into Linux, using Hammerspace’s FlexFiles (Flexible File Layout) software in the Linux distribution. This enables standard Linux client servers to achieve direct, high-performance access to data via Hammerspace’s software.

The storage nodes can be existing commodity white box Linux servers, Open Compute Project (OCP) hardware, Supermicro servers or NAS filers. Hammerspace says that, by using training data wherever it’s stored, it streamlines AI workloads by minimizing the need to copy and move files into a consolidated new repository.

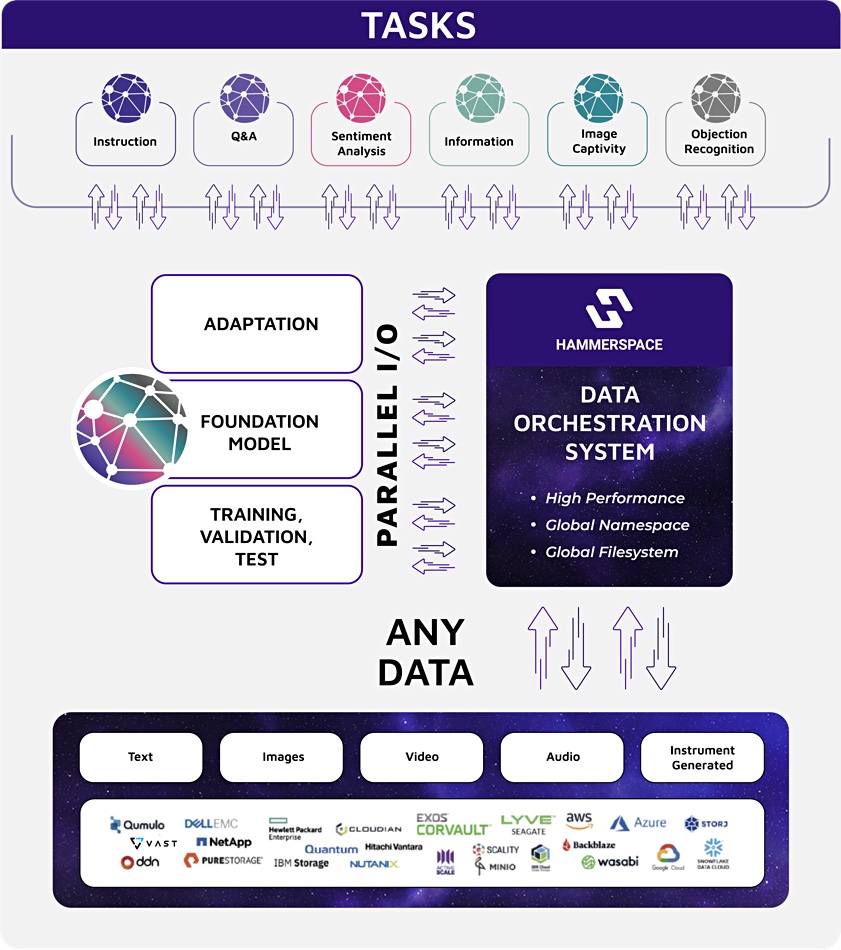

At the application level, data is accessed through a standard NFS file interface to ensure direct access to files in the standard format applications are typically designed for. There is no need for sophisticated parallel file systems such as Lustre or Storage Scale (GPFS as was). A second diagram shows the possible data sources to the AI pipeline:

An IEEE article, “Overcoming Performance Bottlenecks With a Network File System in Solid State Drives” by David Flynn and Thomas Coughlin, describes how the Hammerspace software provides a high-speed data path. It discusses the idea of a decentralized storage architecture with elements of file systems embedded in solid state drives.

The authors note that: “With Network File System (NFS) v4.2, the Linux community introduced a standards-based software solution to further drive speed and efficiency. NFSv4.2 allows workloads to remove the file server and directory mapping from the data path, which enables the NFSv3 data path to have uninterrupted connection to the storage.” This is partly because the metadata server is out of the data path.

It then says: “Yet another data path efficiency is now available leveraging GPUDirect architectures. This passes a single PCIe hop and copy through the host CPU and memory offering similar data path efficiencies to those of NVMe-oF.”

GPUDirect

Hammerspace presented at a TechLive event in London on November 16 and B&F asked if Hammerspace had ideas about actually supporting GPUDirect itself. Molly Presley, SVP Marketing for the company said: “Hammerspace is testing Nvidia GPUDirect now.”

Since a Hammerspace installation is a front end to existing filers, that implies it could provide a GPUDirect connection to those filers, whether they natively supported GPUDirect or not. Was this the case? Presley said: “Yes.”

Hammerspace can provide GPUDirect access to files stored in a shared environment.

If this is the case then, as long as AMD and Intel GPUs support RDMA, Hammerspace could theoretically provide GPUDirect-level data access speeds to those GPU systems. No other supplier, as far as we know, can so far do this.