Volumez has developed a cloud-delivered block storage provisioning service for containerized applications. Storage features such as performance, resiliency and security are specified by customer’s declarative input. Volumez provisions the data path to local on-premises or raw public cloud storage services, such as Amazon EC2 Instance Store, Google Compute Local SSDs, and Azure Ephemeral OS Disks. Volumez says it makes high performance data services composable, scalable, and universal across public and private clouds.

Update: Lightbits’ objections to Volumez’ competitive comparison tables added to Footnote below; 13 November 2023.

The vendor is positioning its software as a boost to cloud providers’ component capabilities, saying it allows them to provide tailored, optimized, robust data infrastructure for data-intensive applications, plus storage services.

B&F talked to Volumez’ chief product officer, John Blumenthal, about Volumez’ technology characteristics.

Blocks & Files: What issues do you see for buyers of cloud storage when comparing suppliers?

John Blumenthal: The storage industry has a long history of competitive comparisons with shifting criteria. Well-established forums, such as the Storage Performance Council, the Transaction Processing Performance Council, workload-specific benchmarks, etc, were created to generate a basis for a marketing war. Though the forums continue to define evaluation (benchmarking) criteria, the industry has not stoked much creativity since Pure led the shift in the purchasing mindset from cost per storage capacity to cost per IOPS.

Since that shift, cloud storage buyers must incorporate two unaccounted factors:

- Cloud Awareness

The cloud is not too unlike early VMware environments. When deploying virtual machines on existing storage systems, there were often challenges with performance, resilience, and storage services. Cloud infrastructure suffers from similar challenges where layers of code sit between the application and the storage resource, making it difficult, costly, and inconsistent to deploy storage in the cloud. This lack of “cloud awareness” keeps organizations from moving critical applications to cloud infrastructure; and - Weak Cloud Infrastructure Measurement

Measuring performance accurately in the cloud, especially as new media is made available, is stunted due to lack of calibrated, consistent benchmarking tools. The challenge is further exacerbated by SDS (Software-Defined Storage) layered on top of public cloud infrastructure.

Blocks & Files: Could you explain what you mean by cloud awareness please?

John Blumenthal: All feature/function storage requirements are known and available in cloud storage…except for one: Cloud Awareness. While market dynamics make it nearly impossible for meaningful public storage solution comparisons, Cloud Awareness is the new comparison dimension that changes the sad situation. Today, most vendors are reduced to providing a set of “pdf comparisons” researchers pull from one another’s public websites. As a result, qualified evaluations are conducted in private, internal bake-offs which are never made public. Licenses are crafted with language to prohibit using non-public information and performance results in a public comparison.

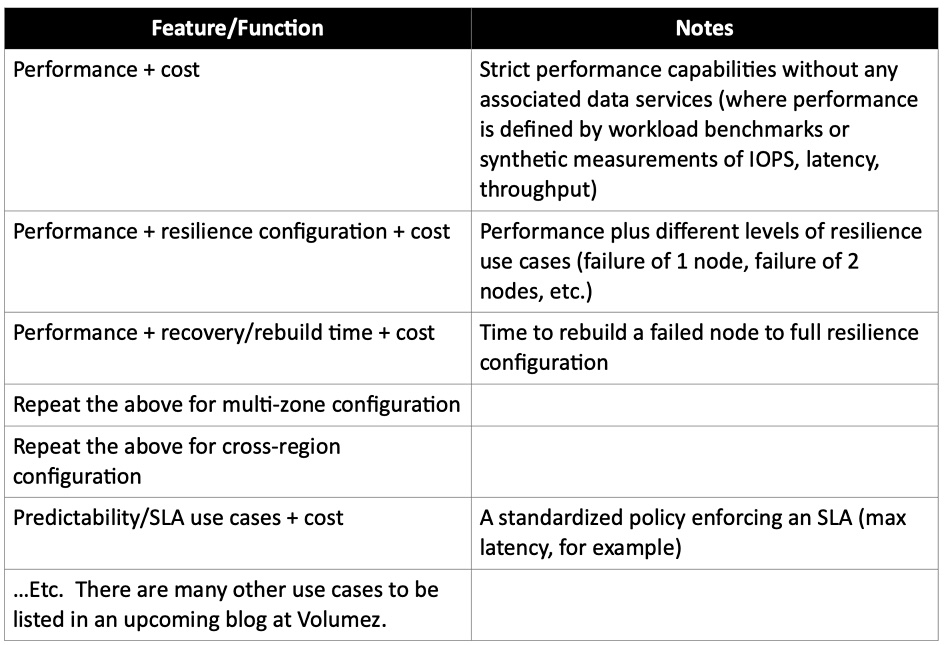

Cloud Awareness, as a new dimension, starts by first demonstrating all the customary, well-known feature/functions every storage system contains. Next, these feature/functions must be exercised by a set of normalized workloads/benchmarks to generate data on a set of standardized and well-known storage use cases:

Blocks & Files: How does cloud awareness relate to the public cloud infrastructure?

John Blumenthal: Cloud Awareness is more than just (ephemeral) storage/media. It encompasses all the cloud infrastructure components and capabilities in which storage/media are set and operate on data – “Cloud Awareness” extends the concept of storage to the full cloud infrastructure for data intensive applications. It is central to understanding and controlling data infrastructure performance and costs.

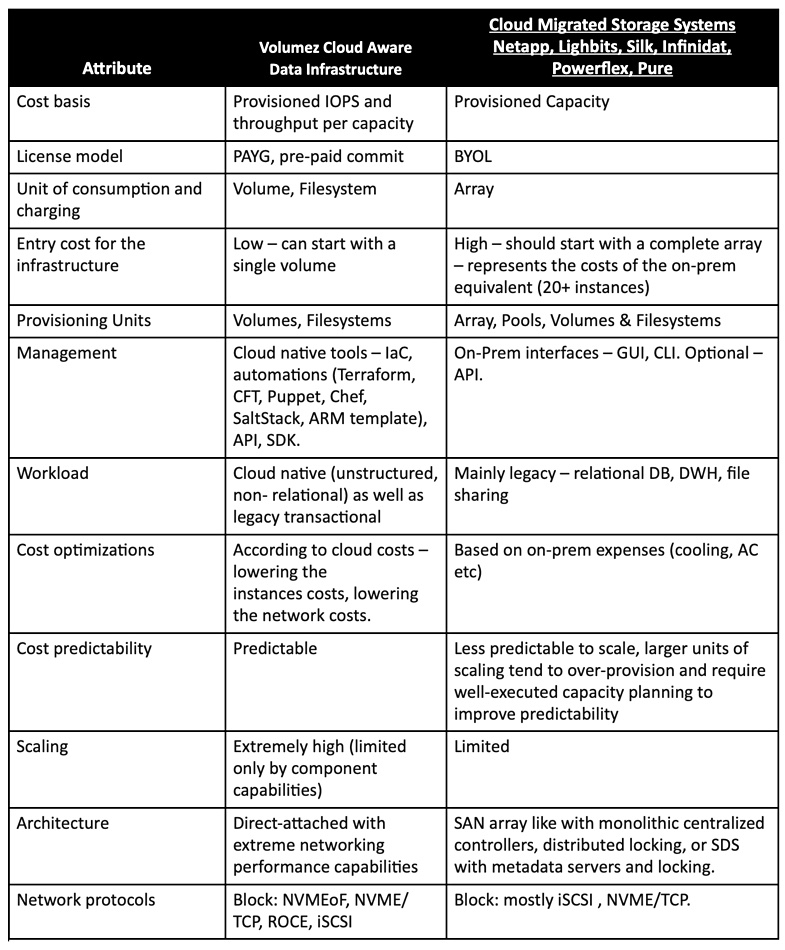

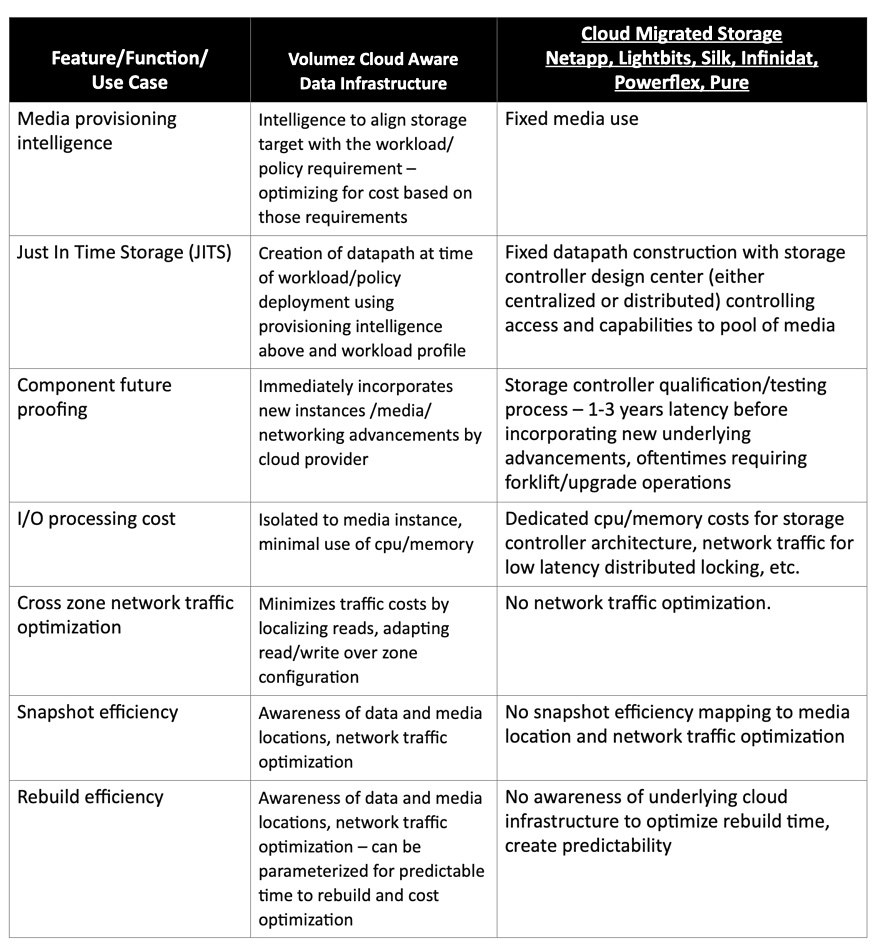

We define “cloud awareness” as a mapping of the common storage feature/function set against cloud infrastructure used by storage system operations. Here are some examples of how Volumez differentiates block services in the public cloud through being “cloud aware” vs. “cloud migrated.”

Blocks & Files: Where does cost come in?

John Blumenthal: The one constant across industry testing is cost. In the cloud age cost is Sauron. It sees everything and infects everything. With telco-level usage itemization appearing in cloud monthly bills circulated to finance teams, cloud storage users cannot evade its gaze. Back in the good ‘ole capex days, costs could be buried in less detailed or non-existent operational usage data. The visibility and pain associated with cloud cost has spawned an entirely new role and persona in corporate IT: finops. A Linux Foundation project, Finops Foundation, has been created to build a community and convey its criticality.

Most of the storage projects in finops involve best practice configurations in the use of public cloud native storage and proper accounting. There is little concerning storage systems containing “cloud aware” architectural design centers or use cases. To paraphrase Wittgenstein, “whereof one cannot speak of cost in the public cloud, thereof one must be silent.” My point here is that cloud storage comparisons must include cost to be useful to the consumer since all feature/functions remain constant – the crux is around the cost dimension for operating those feature/functions in a public cloud infrastructure.

Blocks & Files: How are storage systems in the cloud influenced by CapEx and OpEx?

John Blumenthal: The fundamental differences outlined above arise from the design center of the respective offerings. All storage offerings in the public cloud today were designed and built during the Age of Capex, with on-premises assumptions around resource types, availability, and configurations. They are mostly unmodified versions of what was built for on-premises/capex operating models. The Age of OpEx is upon us with the adoption of the public cloud infrastructure, and while CPU, memory, and networking have taken on new dimensions of capability and cost, storage remains the laggard, anchored in the Age of Capex and the dependence on the centrality of the storage controller. The result is the inability to deliver economically viable data infrastructure for demanding modern workloads in the cloud.

Blocks & Files: How is Volumez different?

John Blumenthal: Volumez is the only solution on the market with a design center built with public cloud infrastructure awareness. Its architecture fundamentally differs from other solutions by leveraging raw storage resources available in a public cloud infrastructure based on workload requirements. Intelligent composition of components and capabilities go beyond the actual storage media to include awareness of the network, logical and physical fault domains, application location and profile, and the cost structure of the assembly. This is why Volumez is a Data Infrastructure company and not a storage vendor. Volumez transforms the cloud provider’s component capabilities into a tailored, optimized, robust data infrastructure for data intensive applications. Morphing ephemeral media instances into storage units is only part of the requirement to deliver the incredible performance, availability, and cost benefits our customers enjoy.

Finally, Volumez’ declarative, policy-based expressions (YAML) integrate with Infrastructure as Code (IaC) frameworks driving public cloud consumption, where the Volumez contribution to IaC is called “Data Infrastructure as Code.” Cloud awareness drives the next generation of data infrastructure capability, automation, and economics. Today it can only be found in Volumez.

Blocks & Files: What storage benchmarking problems do you see in the Public Cloud?

John Blumenthal: Gaining visibility into cloud aware storage functions/operations requires understanding the characteristics of the resources used by those functions. To reveal these characteristics in a measurable, quantifiable way requires benchmarking and workload profiling that lag the capabilities of cloud infrastructure today. As a result, an accurate depiction of limits and constraints remain elusive until today’s measurement toolchains are improved.

Synthetic workload testing (fio, vdbench), for example, is complex to configure properly because of the interplay of different settings. With new performance levels delivered by NVMe, how to ensure the workload generator is keeping pace with the media performance it is loading to test is problematic – the distribution of I/O sent to the media is not uniform. As a result, latencies are oftentimes the latency of the workload generator and not the media itself. Benchmarking today’s modern media and interconnects becomes a lesson in workload generator tuning and optimization.

Fluctuations introduced by the network complicate matters. These variations can add to queuing times, further distorting the performance picture of the media under test. The public cloud requires a new storage performance toolchain accurately measuring end-to-end performance characteristics. This is a pre-requisite for understanding the cost optimizations cloud awareness introduces to data infrastructure. Volumez is currently working on developing a best practices approach and software to provide visibility into cloud data infrastructure performance much of which is available in the Volumez Block Service UI today.

Lightbits footnote

Lightbits told us Volumez’ competitive characterisation of its technology was wrong. It said that, because the competitors were combined into one cell, not all of the analyses are accurate for Lightbits. Specifically;

- Entry cost for the infrastructure **not accurate for Lightbits,

- Workload **not 100% true for Lightbits. We have a customer using us in an AI cloud,

- Cost Optimizations **not accurate for Lightbits,

- Cost Predictability **not accurate for Lightbits,

- Scaling **not accurate for Lightbits.