The reason that CERN, Europe’s atom-smashing institution, can store more than an exabyte of online data comes down to its in-house EOS filesystem.

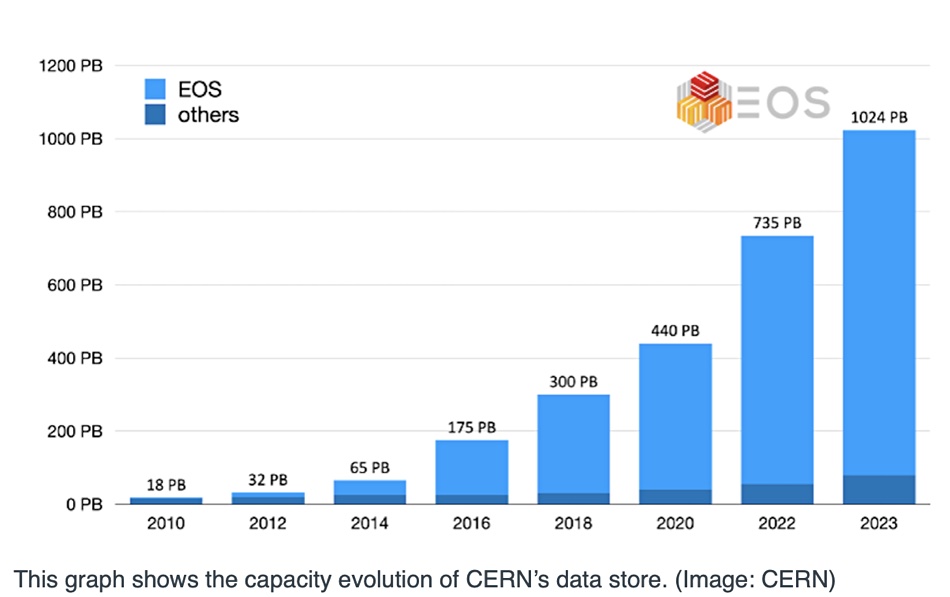

EOS was created by CERN staff in 2010 as an open source, disk-only, Large Hadron Collider data storage system. Its capacity was 18PB in its first iteration – a lot at the time. When atoms are smashed into one another they generate lots of sub-atomic particles and the experiment recording instruments generate lots of data for subsequent analysis of particles and their tracks.

The meaning of the EOS acronym has been lost in time; one CERN definition is that it stands for EOS Open Storage, which is a tad recursive. EOS is a multi-protocol system based on JBODs and not storage arrays, with separate metadata storage to help with scaling. CERN used to have a hierarchical storage management (HSM) system, but that was replaced by EOS – which is complemented with a separate tape-based cold data storage system.

There were two originial EOS data centers: one at CERN in Meyrin, Switzerland, and the other in the WIGNER center in Budapest, Hungary. They were separated by 22ms network latency in 2015. CERN explains that EOS is now deployed “in dozens of other installations in the Worldwide LHC Computing GRID community (WLCG), at the Joint Research Centre of the European Commission and the Australian Academic and Research Network.”

EOS places a replica of each file in each datacenter. CERN users (clients) can be located anywhere, and when they access a file the datacenter closest to them serves the data.

EOS is split into independent failure domains for groups of LHC experiments, such as the four largest particle detectors: LHCb, CMS, ATLAS, and ALICE.

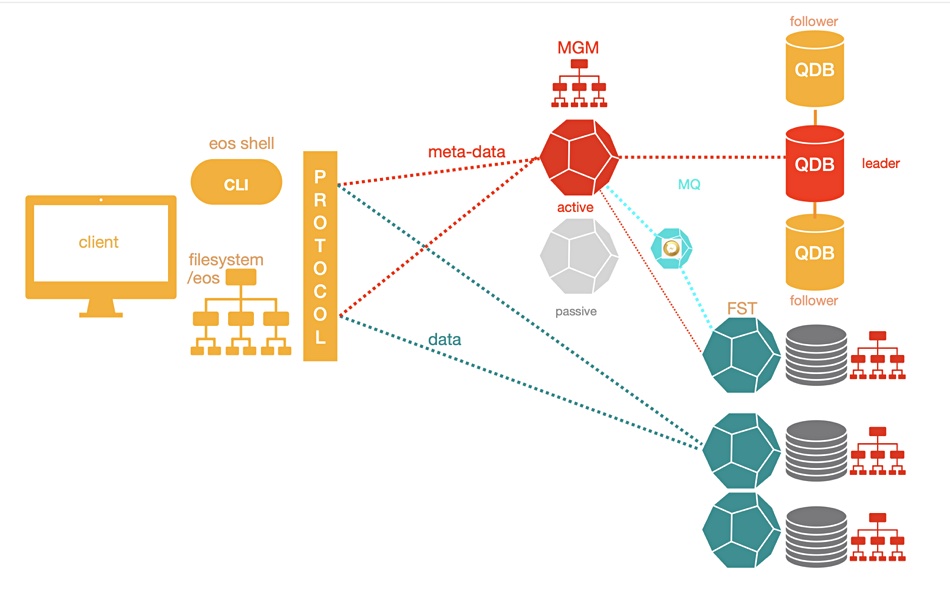

As shown in the diagram below, the EOS access path has one route for metadata using an MGM (metadata generation mechanism) service and another route for data using FST. The metadata is held in memory on metadata service nodes to help lower file access latency. These server nodes are active-passive pairs with real-time failover capability. The metadata is persisted to QuarkDB – a high-availability key:value store using write-ahead logs.

Files are stored on servers with locally-attached drives, the JBODs, distributed in the EOS datacenters.

Each of the several hundred data-holding server nodes has between 24 and 72 disks, as of 2020. Files are replicated across JBOD disks, and EOS supports erasure coding. Cluster state and configuration changes are exchanged between metadata servers and storage with an MQ message queue service.

The MGM, FST and MQ services were built using an XRootD client-server setup, which provides a remote access protocol. EOS code is predominantly written in C and C++ with a few Python modules. Files can be accessed with a Posix-like FUSE (Filesystem in Userspace) SFTP client, HTTPS/WebDav or the Xroot protocol – also CIFS (Samba), S3 (MinIO), and GRPC.

The namespace (metadata service) of EOS5 (v5.x) runs on a single MGM node, as above, and is not horizontally scalable. There could be standby nodes to take over the MGM service if the active node becomes unavailable. A CERN EOS architecture document notes: “The namespace is implemented as an LRU driven in-memory cache with a write-back queue and QuarkDB as external KV store for persistency. QuarkDB is a high-available transactional KV store using the RAFT consensus algorithm implementing a subset of the REDIS protocol. A default QuarkDB setup consists of three nodes, which elect a leader to serve/store data. KV data is stored in RocksDB databases on each node.”

Client users are authenticated, with a virtual ID concept, by KRB5 r X509, OIDC, shared secret, JWT and proprietary token authorization.

EOS provides sync and share capability through a CERNbox front-end service with each user having a terabyte or more of disk space. There are sync clients for most common systems.

The tape archive is handled by CERN Tape Archive (CA) software, and it uses EOS as the user-facing, disk-based front end. Its capacity was set to exceed 1EB this year as well.

Exabyte-level EOS can now store a million terabytes of data on its 111,000 drives – mostly disks with a few SSDs – and an overall 1TB/sec read bandwidth. Back in June last year it stored more than 7 billion files in 780PB of disk capacity using more than 60,000 disk drives. The user base then totalled 12,000-plus scientists from institutes in 70-plus countries. Fifteen months later the numbers are even larger, and set to go higher. The EOS acronym could reasonably stand for Exabyte Open Storage.