Aerospike, focused on real-time data, is now a member of the HPE Complete program which allows customers to acquire Aerospike software directly from HPE and its authorized partners. The Aerospike Real-time Data Platform, has been tested and validated with the HPE Alletra 4110 data storage server.

…

Since the beta release of the Airbyte connector builder two months before the official launch, more than 100 connectors have been built and deployed to production by users to support critical data movement workloads for long-tail connectors. Those are in addition to the 350 available data connectors. Airbyte started up in January 2020 with the aim of building open-source connectors from data sources to data lakes and warehouses.

…

Data intelligence vendor Alation announced the Open Data Quality Framework to bring best-of-breed data quality and data observability capabilities to the Snowflake Data Cloud. This allows users to capture data health, including DQ metrics and warnings for consumers, within their workflow and at the points of consumption across numerous data sources. Launch partners include Acceldata, Anomalo, Bigeye, Datactics, Experian, FirstEigen, Lightup, and Soda. With the Open Data Quality Framework, Alation customers can strengthen data governance for Snowflake by making data quality information visible, it says.

…

Spreadsheets remain the most popular data analytics tool in use, with 78 million users worldwide using the tool every day, but they are also some of the most error-prone tools, as 90 percent of spreadsheets contain errors due to incorrect or out-of-date data. Alation says its Connected Sheets for Snowflake allows users to instantly find, understand, and trust the data they need without leaving their spreadsheet. They can access Snowflake source data directly from the spreadsheets in which they work without the need to understand SQL or rely on central data teams.

…

There is a public preview of Azure NetApp Files double encryption at rest. It provides multiple independent encryption layers, protecting against attacks to any single encryption layer. This feature is currently available in West Europe, East US 2, East Asia regions and will roll out to other regions as the preview progresses.

…

Italian startup Cubbit, a geo-distributed cloud storage enabler, has an advisory board with the first members being Alec Ross, Mikko Suonenlahti, and Nicolas Ott. It launched the Next Generation Cloud Pioneers programme dedicated to Italian companies 18 months ago. More than 130 companies have joined the network, including Aeroporto Marconi di Bologna, Amadori, Bonfiglioli, CNP Vita (Unicredit Group), Granarolo, Marcheno Municipality, and SCM Group. Cubbit is preparing to expand its offer across Europe.

Cubbit DS3 is a multi-tenant S3-compatible object store optimised for edge and multi-cloud use cases. Files are encrypted, fragmented, redundantly stored, and distributed across geo-distributed networks called Swarms. The Swarm is built on recycled and dedicated computing and storage resources, helping to optimise the storage infrastructure of companies and enterprises at a fraction of the cost of traditional cloud providers while guaranteeing complete data sovereignty and avoiding lock-in. At least that is the pitch. Cubbit is present in 70+ countries and its technology has been deployed with over 5,000 active nodes.

…

Lakehouse shipper Databricks has added a Universal Format to the open-source Delta lake which allows data to be read from Delta as if it were Apache Iceberg or Apache Hudi. UniForm offers automatic support for Iceberg and Hudi within Delta Lake 3.0. The Apache Iceberg format tables, are used in big data and enable SQL querying. Query engines such as Spark, Trino, Flink, Presto, Hive, Impala, StarRocks, and others can work on the tables simultaneously. The tables are managed by metadata tracking and snapshotting changes.

Apache Hudi (Hadoop Upserts Deletes and Incrementals)is an open-source framework for building transactional data lakes with processes for ingesting, managing, and querying large volumes of data. Uber developed it in 2016 and Hudi became an Apache Software Foundation top-level project in 2019. It manages the storage of large analytical datasets on DFS (Cloud stores, HDFS or any Hadoop FileSystem compatible storage) and is a transactional data lake platform that brings database and data warehouse capabilities to the data lake.

…

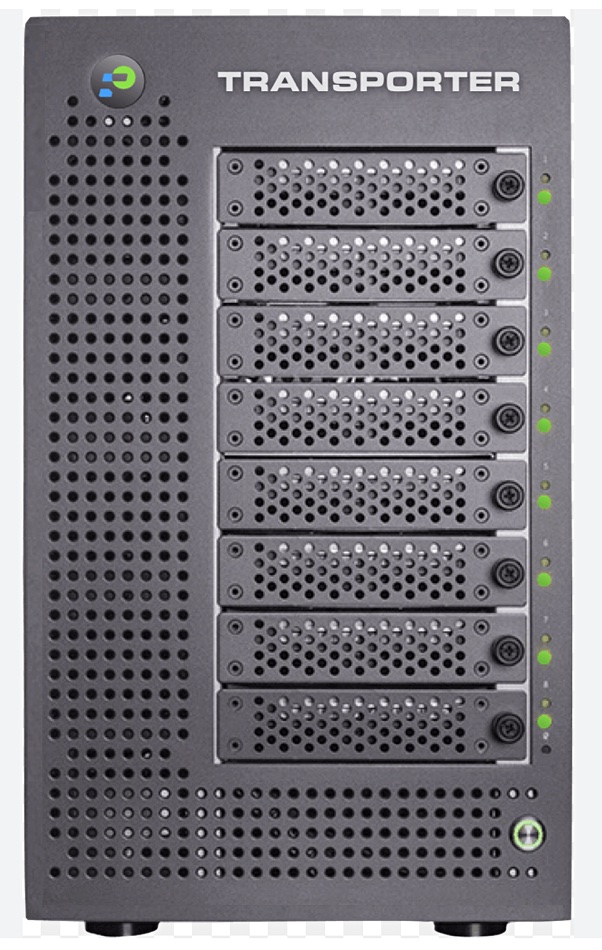

DataCore says its Perifery Transporter is now shipping. The purpose-built physical media appliance, we’re told, provides local nearline storage and intelligently initiates long-term archiving by transferring content from remote and on-set locations to on-premises and cloud facilities. The content is fully encrypted and protected, even during transport, providing end-to-end data security. Automated preprocessing capabilities on the edge appliance save significant time and effort, eliminating the need for expensive, complex servers and other hardware, DataCore says.

…

Singapore-based DapuStor, a provider of high-end enterprise and data center SSD storage products, and Taknet Systems, a distributor of IT products and services in the APAC region, have announced a strategic partnership to offer enterprise SSD storage systems in the APAC market.

…

Multi-cloud data security supplier Fortanix has released Fortanix Confidential Data Search, which it describes as a high-performance product that supports highly scalable searches in encrypted databases with sensitive data, without compromising data security or privacy regulations. It is said to be thousands of times faster than current technologies and supports off-the-shelf databases to accelerate adoption.

…

NetApp has updated its BlueXP management control plane for on-prem and public cloud NetApp storage arrays and instances. BlueXP backup and recovery has a single control plane that simplifies customized backup strategies on a workload-by-workload basis. BlueXP backup and recovery capabilities have support for app-consistent database deployments in major clouds using either NetApp software-defined and hyperscaler-native storage offerings such as Oracle databases on Amazon FSx for NetApp ONTAP.

NetApp storage and data services can be deployed in highly secure, compliance-sensitive environments, including government clouds and “dark sites” with full isolation from internet connectivity via BlueXP private and restricted modes of deployment, NetApp says. Cloud Insights Federal Edition is now available for FedRAMP deployments. Cloud Volumes ONTAP (CVO) is available in the AWS Marketplace for the U.S. Intelligence Community (IC).

…

Quantum has announced the qualification of its backup storage portfolio with Veeam Backup & Replication (VBR) V12. Quantum offers a portfolio of Veeam Ready storage products including ActiveScale object storage with immutable object locking and versioning. Quantum integrated Veeam’s Smart Object Storage API (SOSAPI) into ActiveScale. Quantum’s DXi physical and virtual backup appliances integrate with the Veeam Data Mover Service (VDMS). Its Scalar Tape provides physically air-gapped storage to protect against ransomware and cyberthreats.

Quantum’s Myriad all-flash file and object storage platform is designed for rapid recovery of mission-critical data and expected to provide Veeam customers with an additional solution to minimise recovery time objective (RTO) and recovery point objective (RPO). Quantum intends to apply for the Veeam Ready program once it is generally available later this year.

…

RisingWave Labs, a startup founded in 2021, announced GA of RisingWave Cloud, its fully managed SQL stream processing platform, built on a cloud-native architecture. RisingWave Cloud consumes streaming data, performs incremental computations when new data comes in, and updates results dynamically at a fraction of the cost compared to Apache Flink.

RisingWave is built with Rust, and is optimized to support high-throughput and low-latency stream processing in the cloud. It guarantees data consistency and service reliability using checkpointing and shared nothing architecture. It has its own native storage for persisting data and serving user-initiated queries, and offers dozens of connectors to other open standards and computing frameworks, allowing users to freely connect to external systems.

…

SanBlaze announced availability of its SBExpress-DT5, a turnkey PCIe 5.0 NVMe SSD validation test system. It works in conjunction with the company’s Certified by SANBlaze Test Suite, bringing enterprise class NVMe validation to the developer’s desktop. The system is self-contained, quiet, and portable, good for a work-from-home environment or traveling to customer sites, the vendor says.

…

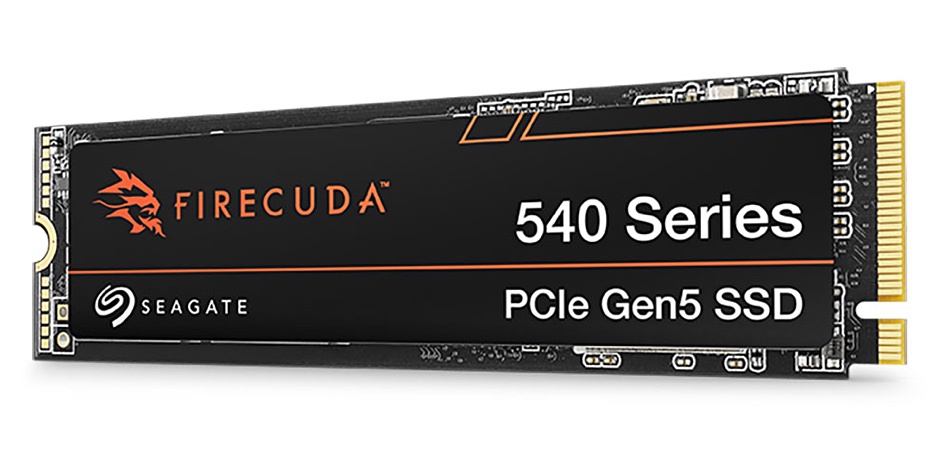

Seagate has formally announced its FireCuda 540 M.2 gaming SSD after many of its details were leaked last week. Maximum capacity is 2TB, not 4TB as leaked info suggested. It has a 10,000 MBps sequential write and read speed. There is a 1.8 million MTBF rating, a five-year warranty and three years of Seagate’s data recovery services are included.

…

Research house TrendForce says High Bandwidth Memory (HBM) is emerging as the preferred solution for overcoming memory transfer speed restrictions due to the bandwidth limitations of DDR SDRAM in high-speed computation. HBM is recognized for its transmission efficiency and plays a pivotal role in allowing core computational components to operate at their maximum capacity. Top-tier AI server GPUs have set a new industry standard by primarily using HBM. TrendForce forecasts that global demand for HBM will experience almost 60 percent growth annually in 2023, reaching 290 million GB, with a further 30 percent growth in 2024.