Durham University’s DiRAC supercomputer is getting composable GPU resources to model the evolution of the universe, courtesy of Liqid and its Matrix composability software.

The UK’s Durham University DiRAC (Distributed Research utilising Advanced Computing) supercomputer department has both Spectra Logic tape libraries and Rockport switchless networks taking advantage of the university’s largesse.

Liqid has put out a case study about selling its Matrix composable system to Durham so researchers can get better utilization from their costly GPUs.

Durham University is part of the UK’s DiRAC infrastructure and houses COSMA8, the eighth iteration of the COSmology MAchine (COSMA) operated by the Institute for Computational Cosmology (ICC) as a UK-wide facility. Specifically, Durham provides researchers with large memory configurations needed for running simulations of the universe from the Big Bang onwards, 14 billion years ago. Such simulations of dark matter, dark energy, black holes, galaxies and other structures in the Universe can take months to run on COSMA8. Then there can be long periods of analysis of the results.

More powerful supercomputers are needed, exascale ones. A UK government ExCALIBUR ((Exascale Computing Algorithms and Infrastructures Benefitting UK Research) programme has provided £45.7 million ($55.8 million) funding to investigate new hardware components with potential relevance for exascale systems. Enter Liqid.

The pattern of compute work at Durham needs GPUs and, if these are physically tied to accessing client computers they can be tied up and dedicated to specific jobs, and then stand idle because new jobs are started in other servers with fewer GPUs.

The utilization level of the expensive GPUS can be low, and the workload of multiple and large partially overlapping jobs will get worse at the exascale level. More GPUs will be needed and their utilization will remain low, driving up power and cooling costs. At least that is the fear.

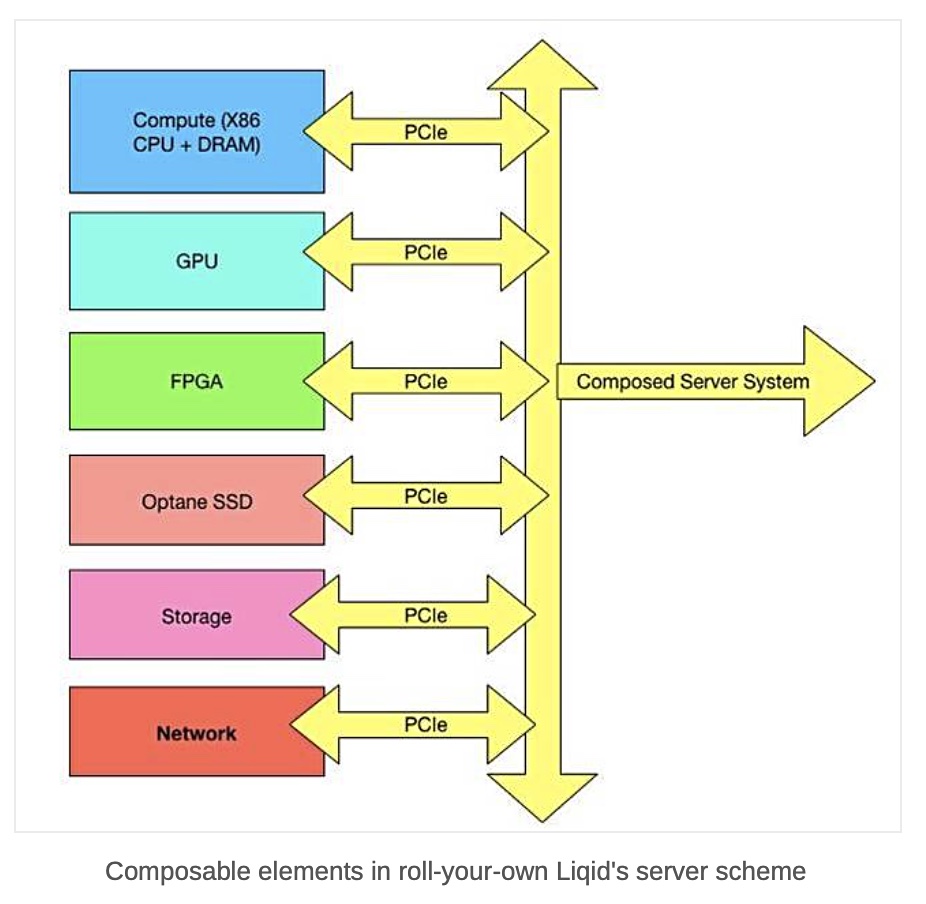

Liqid’s idea is that GPUs and other server components, such as CPUs, FPGAs, accelerators, PCIe-connected storage, Optane memory, network switches and, in the future, DRAM with CXL, can all be virtually pooled. Then server configurations, optimized for specific applications, can be dynamically set up by software pulling out resources from the pools and returning them for re-use when a job is complete. This will drive up individual resource utilization.

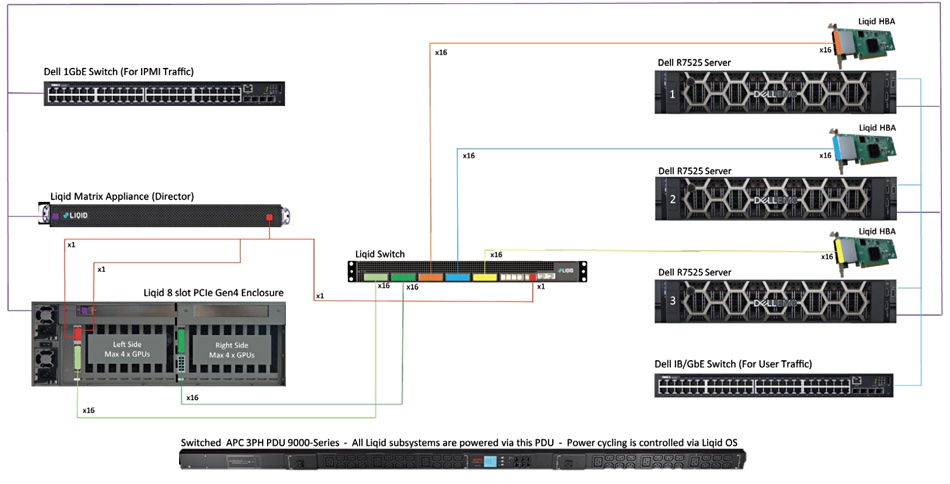

Alastair Basden, technical manager for the DiRAC Memory Intensive Service at Durham University, was introduced to the concept of composable infrastructure by Dell representatives. Basden is composing NVIDIA A100 GPUs to servers with Liqid’s composable disaggregated infrastructure (CDI) software. This enables researchers to request and receive precise GPU quantities.

Basden said: “Most of our simulations don’t use GPUs. It would be wasteful for us to populate all our nodes with GPUs. Instead, we have some fat compute nodes and a login node, and we’re able to move GPUs between those systems. Composing our GPUs gives us flexibility with a smaller number of GPUs. We can individually populate the servers with one or more GPUs as required at the click of a button.”

“Composing our GPUs can improve utilisation, because now we don’t have a bunch of GPUs sitting idle,” he added.

Basden noted an increase in the number of researchers exploring artificial intelligence and expects their workloads to need more GPUs, and Liqid’s system will support their importation: “When the demand for GPUs grows, we have the infrastructure in place to support more acceleration in a very flexible configuration.”

He is interested in the notion of DRAM pooling and the amounts of DRAM accessed over CXL (PCIe gen 5) links. Each of the main COSMA8 360 compute nodes at Durham is configured with 1TB of RAM. Basden said: “RAM is very expensive – about half the cost of our system. Some of our jobs don’t use RAM very effectively – one simulation might need a lot of memory for a short period of time, while another simulation doesn’t require much RAM. The idea of composing RAM is very attractive; our workloads could grab memory as needed.”

“When we’re doing these large simulations, some areas of the universe are more or less dense depending on how matter has collapsed. The very dense areas require more RAM to process. Composability lets resources be allocated to different workloads during these times and to share memory between the nodes. As we format the simulation and come to areas that require more RAM, we wouldn’t have to physically shift things around to process that portion of the simulation.”

Liqid thinks all multi-server data centres should employ composability. If you have one with low utilization rates (say 20 percent or so) for PCIe or Ethernet or InfiniBand-connected components like GPUs, it says you should consider giving it a try.