Rockport Network’s flat, switchless network is interconnecting compute nodes and storage systems in ExCALIBUR, the UK’s DiRAC exascale supercomputer test environment.

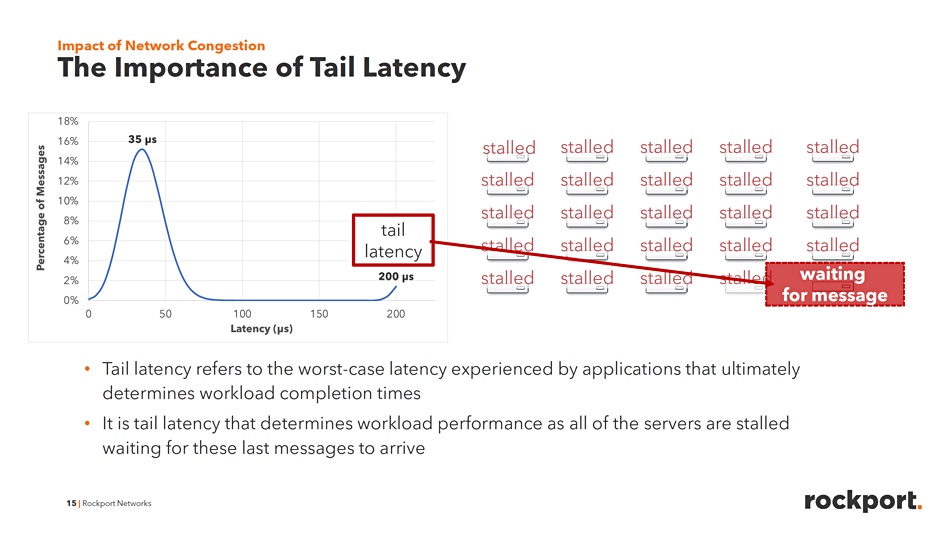

Rockport believes that hierarchical leaf-spine, switched networks are inherently limited at scale, and prone to switch port congestion and tail latency effects which affect overall application run times. DiRAC is the UK’s tier 1 national supercomputer facility for theoretical modeling and HPC-based research in particle physics, astronomy, and cosmology. It is distributed across Cambridge, Durham, Edinburgh, and Leicester University sites with Durham’s Institute for Computational Cosmology (ICC) COSMA6 and COSMA7 massively parallel nodes focused on memory-intensive applications.

Dr Alastair Basden, technical manager of the COSMA HPC Cluster for DiRAC at Durham University, said: “Based on the results and our first experience with Rockport’s switchless architecture we were confident in our choice to improve our exascale modeling performance – all supported by the right economics.”

Because it eliminates layers of switches, Rockport says its technology ensures compute and storage resources are no longer waiting for data, and researchers have more predictability regarding workload completion time.

The COSMA systems

COSMA (DiRAC’s COSmology MaChine) has gone through several generations. COSMA6 has about 600 x86 nodes with 128GB of RAM and 16 cores (2 x Xeon E5-26700 @2.60GHx); 8GB DRAM/core, linked across FDR InfiniBand in a 2:1 blocking configuration. There is 2.6PB of Lustre storage capacity.

COSMA7 has 452 x 512GB DRAM, 28-core compute nodes (2 x Xeon Gold 5120 @2.20GHz); 18GB DRAM/core, with InfiniBand EDR-connectivity also in a 2:1 blocking configuration. It has Dell storage (MD controllers and JBODs) configured as 3.1PB of data capacity and 440TB of fast IO checkpointing NVMe storage capacity. There is also 28PB of Spectra LTO-8 tape library capacity.

The high DRAM capacity makes the COSMA systems suitable for large-scale cosmological simulations. It’s typical of such simulations that there is a large amount of inter-node traffic as progress in one part of a simulation is used to update another. In general the larger the number of nodes the more inter-node traffic there will be. It is anticipated that exascale computing systems will have many times more nodes than COSMA6 and 7. Any InfiniBand port congestion and long-tail latency will be worse when the code runs on thousands of nodes in an exascale system.

Rockport CTO Matt Williams said: “It’s all about controlling worst-case performance. In highly parallel applications the party doesn’t start until the last guest arrives.”

ExCALIBUR

ExCALIBUR (Exascale Computing Algorithms and Infrastructures Benefitting UK Research) is a test initiative to prepare the UK HPC community for forthcoming exascale services.

The Rockport network is a distributed, high-performance (300Gbit/sec) interconnect in which every network endpoint can efficiently forward traffic to every other endpoint. It provides pre-wired supercomputer topologies through a standard plug-and-play Ethernet interface.

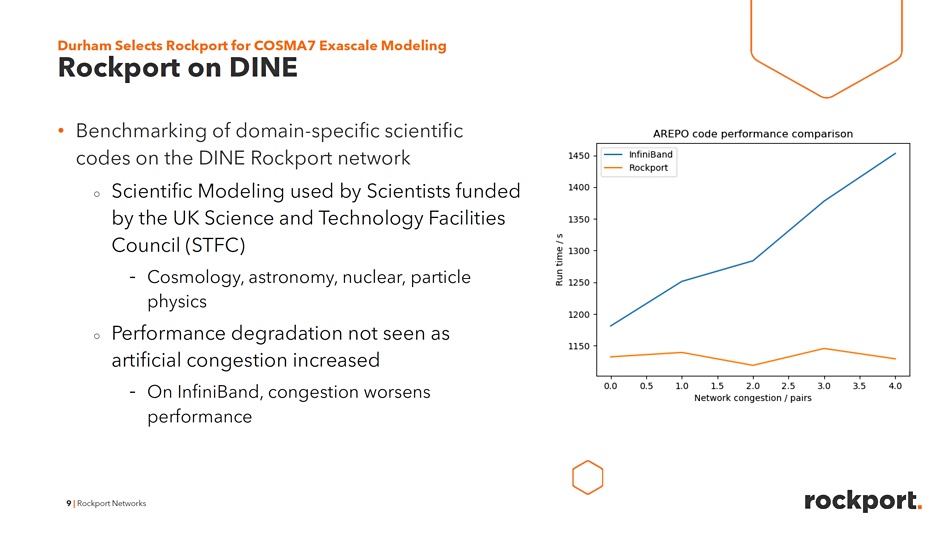

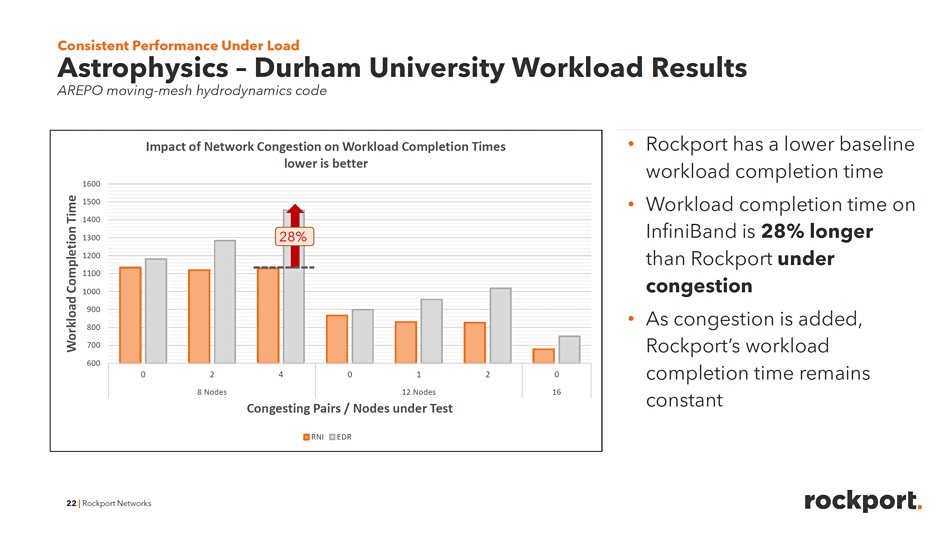

An initial proof-of-concept, Rockport deployment was carried out in a 16-node Durham Intelligent NIC Environment (DINE) supercomputer. Tests were run on the InfiniBand and Rockport-connected sub-systems with artificially induced network congestion to see what the effects would be. Application run times in the InfiniBand environment were worse than with Rockport:

The addition of more congestion drove InfiniBand subsystem application run times up whereas it did not on the Rockport subsystem. Application completion time was up to 28 percent longer on the InfiniBand nodes than on the Rockport nodes.

As a result, Basden said that the COSMA7 system will be split into InfiniBand and Rockport subsystems for next-stage testing. Some 224 nodes of the COSMA7 system will have their InfiniBand connectivity replaced by the Rockport network. Lustre or Ceph file systems will be directly connected on both fabrics.

Applications (codes in DiRAC-speak) will be run on the modified COSMA7 to compare and contrast run times and network loads for InfiniBand and Rockport-connected nodes to see how they might perform on a full exascale system. Results could come through in July.

Rockport’s Autonomous Network Manager (ANM) will help because it provides a holistic view of an active network, with insight into end-to-end traffic flows. It can be used to troubleshoot network performance in near real time. The Rockport network can absorb additional traffic better than InfiniBand because there are simply more paths from any node to any node across the network. Through ANM, the network admins can see noisy neighbor-type nodes and look to spread their processing or data IO burden across other nodes so as to get rid of high network traffic hot spots.

Comment

The 16-node Rockport proof of concept has delivered promising results. If the superiority of Rockport over InfiniBand at the 224-node level in the next test is confirmed, Rockport should have a terrific chance of being selected for the UK’s exascale supercomputer. That will be a feather in its cap and confirm that Rockport’s switchless networking concept is good for multi-node, distributed enterprise compute and storage environments as well as for HPC shops.

DiRAC stands for Distributed Research Utilising Advanced Computing. DiRAC is a pun on the name of Paul Dirac, a highly regarded English physicist involved with quantum mechanics and electrodynamics.