GigaIO announced yesterday it is using silicon from Microchip in its FabreX PCIe 4.0 network fabric to dynamically compose server systems and share memory, including Optane, between servers.

FabreX is a unified fabric, intended to replace previously separate Ethernet, InfiniBand and PCIe networks. It’s all PCIe, and this lowers overall network latency as there is no need to hop between networks.

GigaIO CEO Alan Benjamin said in a statement: “The implication for HPC and AI workloads, which consume large amounts of accelerators and high-speed storage like Intel Optane SSDs to minimise time to results, is much faster computation, and the ability to run workloads which simply would not have been possible in the past.”

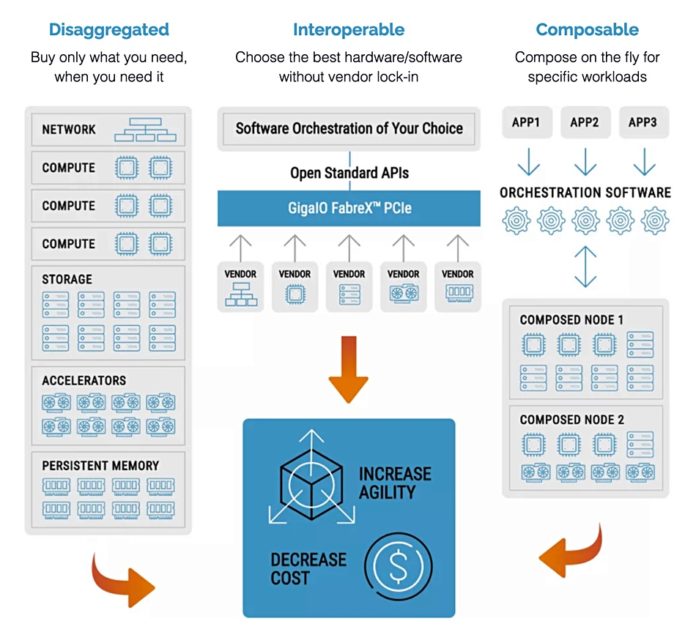

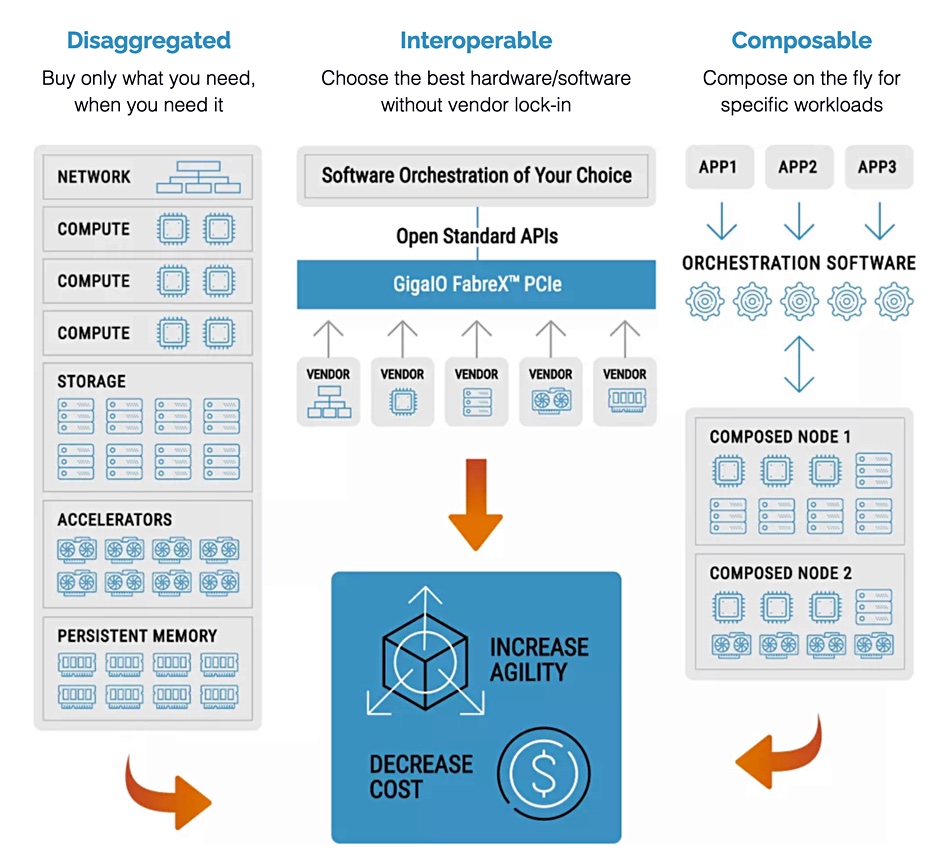

The main rationale of composability is that server-attached resources such as GPUs, FPGAs or Optane Persistent Memory, are stranded on their host server and may not be in use much of the time. By making them shared resources, other servers can use them as needed, and so improve their utilisation. GigaIO is small California startup that competes with composability system suppliers such as DriveScale, Fungible and Liqid.

FabreX

FabreX dynamically reconfigure systems and enables server-attached GPUs, FPGAs ASICs, SoCs, networking, storage and memory (3D XPoint) to be shared as pooled resources between servers in a set of racks. The fabric supports GDR (GPU Direct RDMA), MPI (Message Passing Interface), TCP/IP and NVMe-oF protocols.

GigaIO supports several composability software options to avoid software lock-in. These include open source Slurm (Simple Linux Utility for Resource Management) integration; automated resource scheduling features with Quali CloudShell; and running containers and VMs in an OpenStack-based Cloud with vScaler. The management architecture is based on open standard Redfish APIs.

Microchip

Microchip provides Switchtec silicon for GigaIO’s PCIe Gen 4 top-of-rack switch appliance. This has 24 non-blocking ports with less than 110ns latency – claimed to be the lowest in the industry. Every port has a DMA (Direct Memory Access) engine. The appliance delivers up to 512Gbits/sec transmission rates per port at full duplex, and will soon scale up to 1,024Gbits/sec with PCIe Gen 5.0.

The switch provides direct memory access by an individual server to system memories of all other servers in the cluster fabric, for the industry’s first in-memory network. This enables load and store memory semantics across the interconnect.