Nvidia has crafted a direct link between its GPUs and NVMe-connected storage to speed data transfer and processing.

Until now a GPU was fed with data by the host server’s CPU, which fetched it from its local or remote storage devices. But GPUs are powerful and can be kept waiting for data; overwhelmed server hosts simply can’t deliver data fast enough.

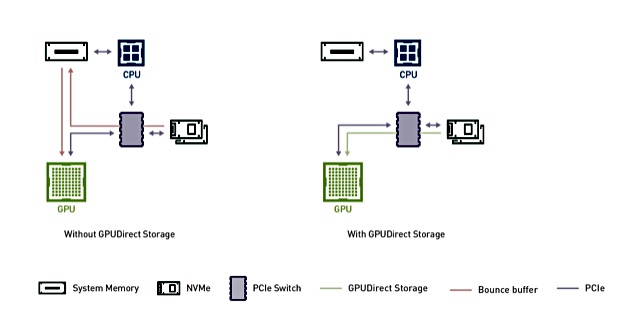

GPUDirect Storage cuts out the host server CPU and its memory, calling it a bounce buffer, and sets up a direct link between the GPU, a DGX-2 for example, and NVMe-connected storage, including NVMe-over-Fabrics devices.

GPUDirect Storage uses direct memory access to move the data into and out of the GPU, which has a direct memory access engine.

The results are promising. For instance, in a blog the company noted: “NVIDIA RAPIDS, a suite of open-source software libraries, on GPUDirect and NVIDIA DGX-2 delivers a direct data path between storage and GPU memory that’s 8x faster than an unoptimised version that doesn’t use GPUDirect Storage.”

And in a developer blog Nvidia said: “Whereas the bandwidth from CPU system memory (SysMem) to GPUs in an NVIDIA DGX-2 is limited to 50 GB/sec, the bandwidth from many drives and many NICs can be combined to achieve maximal bandwidth, e.g. over 200 GB/sec in a DGX-2. “

Read the developer blog for lots more details.