Startup MemVerge is developing software to combine DRAM and Optane DIMM persistent memory into a single clustered storage pool for existing applications to use with no code changes.

It says it is defeating the memory bottleneck by breaking the barriers between memory and storage, enabling applications to run much faster. They can sidestep storage IO by taking advantage of Optane DIMMs (3D XPoint persistent memory). MemVerge software provides a distributed storage system that accelerates latency and bandwidth.

Data-intensive workloads such as AI, machine learning (ML), big data analytics, IoT and data warehousing can use this to run at memory speed. According to MemVerge, random access is as fast as sequential access with its tech – lots of small files can be accessed as fast as accessing data from a few large files.

The name ‘MemVerge’ indicates converged memory and its patent-pending MCI (Memory Converged Infrastructure) software combines two dissimilar memory types across a scale-out cluster of server nodes. Its system takes advantage of Intel’s Optane DC Persistent memory – Optane DIMMs – and the support from Gen 2 Xeon SP processors to provide servers with increased memory footprints.

MemVerge discussed its developing technology at the Persistent Memory summit in January 2019 and is now in a position to reveal more details.

Technology

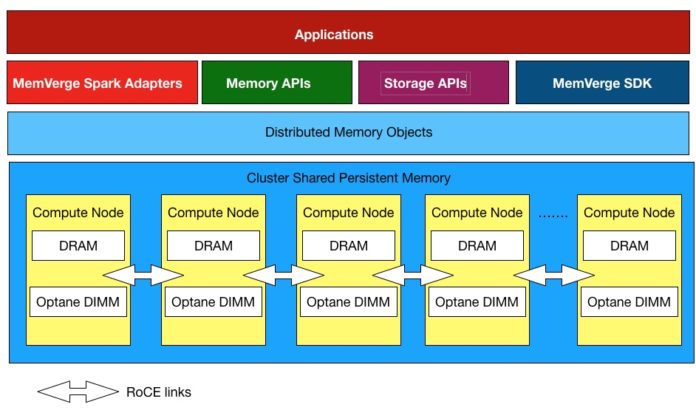

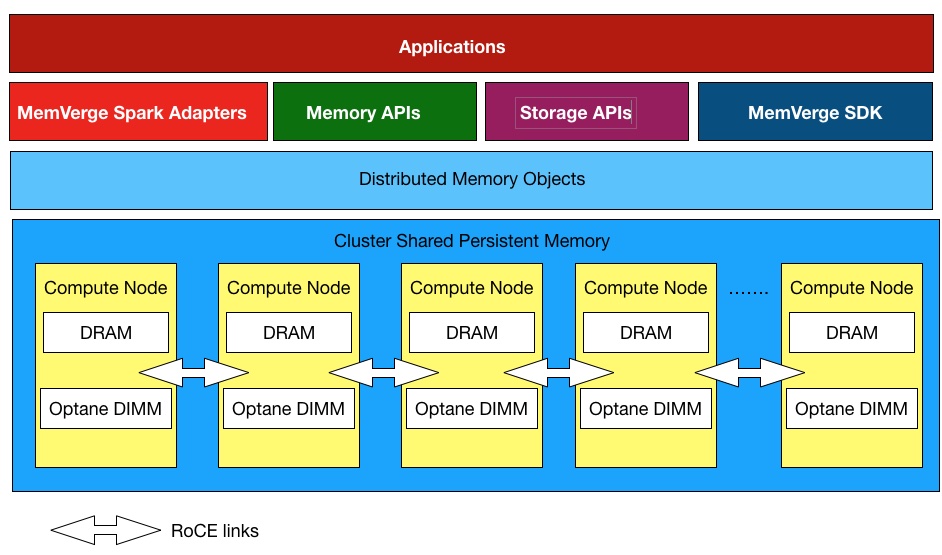

The technology clusters local DRAM and Optane DIMM persistent memory in a set of clustered servers presenting a single pool of virtualised memory in which it stores Distributed Memory Objects (DMOs). Data is replicated between the nodes using a patent-pending algorithm.

MemVerge software has what the company calls a MemVerge Distributed File System (MVFS.)

The servers will be interconnected with RDMA over Converged Ethernet (RoCE) links. It is not a distributed storage memory system with cache coherence.

DMO has a global namespace and provides memory and storage services that do not require application programming model changes. It supports memory and storage APIs with simultaneous access.

Its software can run in hyperconverged infrastructure (HCI) servers or in a group of servers representing an eternal, shared, distributed memory pool; memory constituting the DRAM+ Optane DIMM combination. However, in both cases applications up the stack “see” a single pool of memory/storage.

Charles Fan, CEO, says MemVerge is essentially taking the VSAN abstraction layer lesson and applying it to 3D XPoint DIMMs.

The Tencent partnership, described here, involved 2,000 Spark compute nodes and MemVerge running as their external store. However MemVerge anticipates that HCI will be the usual deployment option.

It’s important to note that Optane DIMMs can be accessed in three ways:

- Volatile Memory Mode with up to 3TB/socket this year and 6TB/socket in 2020. (See note 1 below.) In this mode the Optane is twinned with DRAM and data in the two is not treated as persistent but volatile. (See note 2.)

- Block storage mode in which it is accessed, but not at byte-level, via storage IOs and has a lower latency that Optane SSDs.

- App Direct Mode in which it is byte-accessible using memory semantics by applications using the correct code. This is the fastest access persistent memory mode.

MemVerge’s DMO software uses App Direct Mode but the application software up the stack does not have to change.

Product delivery

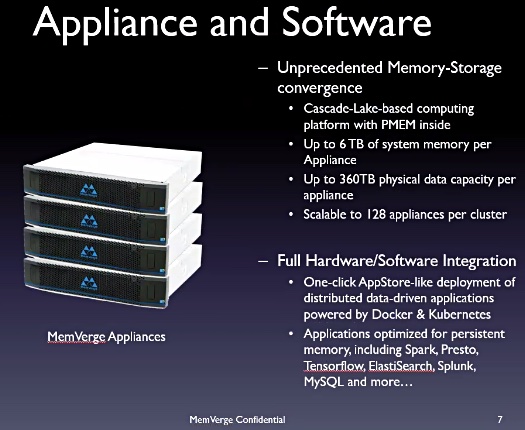

The product can be delivered as software-only or inside an off-the-shelf, hyperconverged server appliance. The latter is based on 2-socket Cascade Lake AP server nodes with up to 512 GB DRAM and 6TB of Optane memory and 360TB of physical storage capacity, provided by 24 x QLC (or TLC) SSDS. There can be 128 such appliances in a cluster, linked with two Mellanox 100GbitE cards/node. The full cluster can have up to 768TB of Optane memory and 50PB of storage.

The nodes are interlinked with RoCE but they can also be connected via UDP or DPDK (a UDP alternative). Remote memory mode is not supported for the latter options.

DRAM can be used as memory or as an Optane cache. A client library provides HDFS-compatible access. The roadmap includes adding NFS and S3 access.

The hyperconverged application software can run in the MemVerge HCI appliance cluster and supports Docker containers and Kubernetes for app deployment. Fan envisages an App Store. and likens this to an iPhone for the data centre.

The software version can run in the public cloud.

Speed features

MemVerge says its MCI technology “offers 10X more memory size and 10X data I/O speed compared with current state-of-the-art compute and storage solutions in the market.”

According to Fan, the 10X memory size idea is based on a typical server today having 512GB DRAM. The 10X data I/O speed claim comes from Optane DIMMs having 100 – 250ns latency while the fastest NVME drive (Optane SSD) has a 10 micro seconds latency.

He says 3D XPoint’s theoretical endurance is in the range of 1 million to 100 million writes (ten to the 6 to ten to the 8), with Intel’s Optane being towards the lower end of that. It is dramatically better than NAND flash with its 100 to 10,000 write cycle range (ten to the 2 and ten to the 4) – this embraces SLC, MLC and TLC flash.

MemVerge background

MemVerge was founded in 2017 by VMware’s former head of storage, CEO Charles Fan, whose team developed VSAN, VMware’s HCI product; chairman Shuki Bruck, the co-founder of XtremIO, and CTO Yue Li. There are some 25 employees.

Fan and Bruck were also involved in founding file virtualization business Rainfinity, later bought by EMC.

The company has announced a $24.5 million Series A funding round from Gaorong Capital, Jerusalem Venture Partners, LDV Partners, Lightspeed Venture Partners and Northern Light Venture Capital.

The MemVerge Beta program is available in June 2019. Visit www.memverge.com to sign up.

Note 1. The jump from 3TB/socket this year to 6TB/socket next year implies either that Optane DIMM density will double or that more DIMMs will be added to a socket.

Note 2. See Jim Handy’s great article explaining this.