The VAST Data system employs a Universal Storage filesystem. This has a DASE (Disaggregated Shared Everything) datastore which is a byte-granular, thin-provisioned, sharded, and has an infinitely-scalable global namespace.

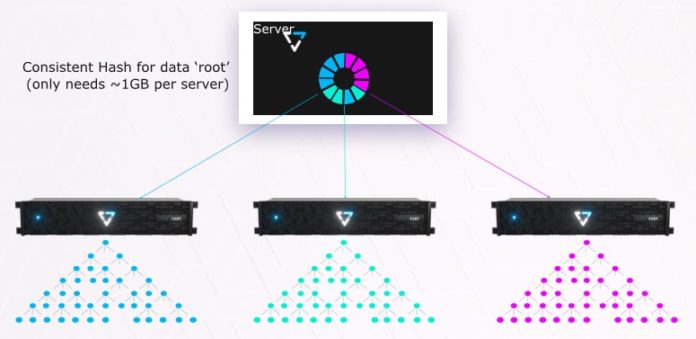

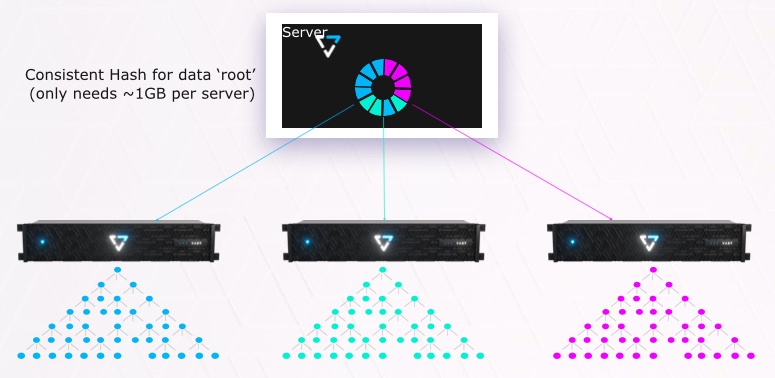

It involves the compute nodes being responsible for so-called element stores.

These element stores use V-tree metadata structures, 7-layers deep with each layer 512 times larger than the one above it. This V-tree structure is capable of supporting 100 trillion objects. The data structures are self-describing with regard to lock and snaps states and directories.

VAST Data tech evangelist Howard Marks said: ‘Since the V-trees are shallow finding a specific metadata item is 7 or fewer redirection steps through the V-Tree. That means no persistent state in the compute-node (small hash table built at boot) [and] adding capacity adds V-Trees for scale.”

There is a consistent hash in each compute node which tells that compute node which V-tree to use to locate a data object.

The 7-layer depth means V-trees are broad and shallow. Marks says: “More conventional B-tree, or worse, systems need tens or hundreds of redirections to find an object. That means the B-Tree has to be in memory, not across an NVMe-oF fabric, for fast traversal, and the controllers have to maintain coherent state across the cluster. Since we only do a few I/Os we can afford the network hop to keep state in persistent memory where it belongs.”

V-trees are shared-everything and there is no need for cross-talk between the compute nodes. Jeff Denworth, product management VP, said: “Global access to the trees and transactional data structures enable a global namespace without the need of cache coherence in the servers or cross talk. Safer, cheaper, simpler and more scalable this way.”

There is no need for a lock manager either as lock state is read from XPoint.

A global flash translation system is optimised for QLC flash with four attributes:

- Indirect-on-write system writing full QLC erase blocks which avoids triggering device-level garbage collection,

- 3D XPoint buffering ensures full-stripe writes to eliminate flash wear caused by read-modify-write operations,

- Universal wear levelling amortises write endurance to work toward the average of overwrites when consolidating long-term and short-term data,

- Predictive data placement to avoid amplifying writes after application has persisted data.

The idea of long-term and short-term data enables long-term data (with low rewrite potential) to be written on erase blocks with limited endurance left. Short-term data with higher rewrite potential can go to blocks with more write cycles left.

VAST buys the cheapest QLC SSDs it can find for its databoxes as it does not need any device-level garbage collection and wear-levelling, carrying out these functions itself.

VAST guarantees its QLC drives will last for 10 years with these ways of reducing write amplification. That lowers the TCO rating.

Remote Sites

NVMe-oF is not a wide-area network protocol as it is LAN distance-limited. That means distributed VAST Data sites need some form of data replication to keep them in sync. The good news is, Blocks & Files envisages, is that only metadata and reduced data needs to be sent over the wire to remote sites. VAST Data confirms that replication is on the short-term roadmap.

Next

Explore more aspects of the VAST Data storage universe;

- VAST data reduction

- VAST decoupling compute and storage

- VAST striping and data protection

- VAST Data’s business structure and situation

- Return to main VAST Data story here.