The A800 is a freshly released Huawei AI storage system designed to accelerate AI training on multi-petabyte datasets, featuring a scale-out architecture that supports 100 million IOPS and petabytes-per-second bandwidth.

Update: PCIe bus and DPU details added. 10 May 2024.

It follows the A310 launch from August last year. The A-series are AI-focused storage arrays and sit alongside the Dorado all-flash arrays and Pacific scale-out hybrid data lake products in Huawei’s OceanStor product line. Huawei first revealed the A800 AI storage system in China in September last year and discussed the product at its Data Infrastructure Forum event in Berlin yesterday.

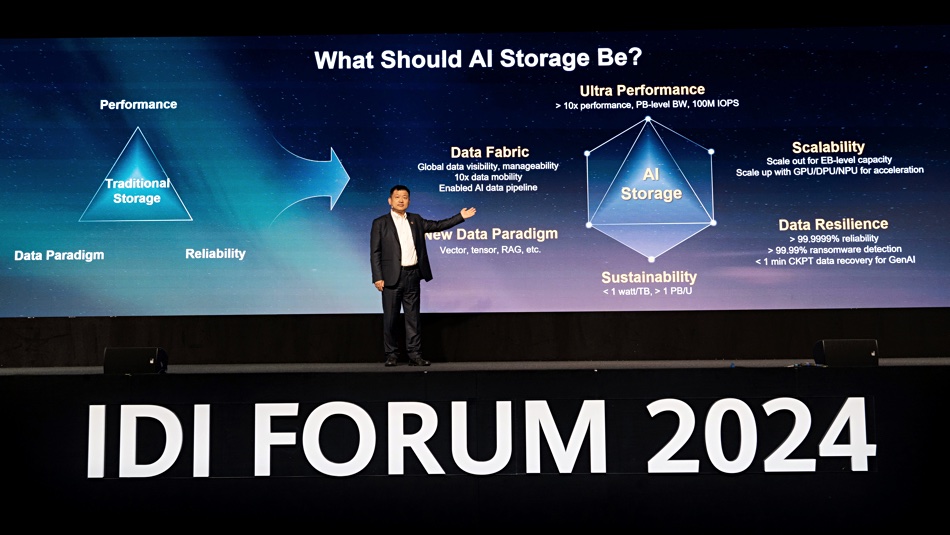

Dr Peter Zhou, Huawei VP and President of Huawei Data Storage Product Line, talked about “Redefining Data Storage in the Data Awakening Era.” He said he expects the future of data storage to be driven by multiple capabilities including ultra performance, data resilience, new data paradigms, scalability, sustainability, and a data fabric.

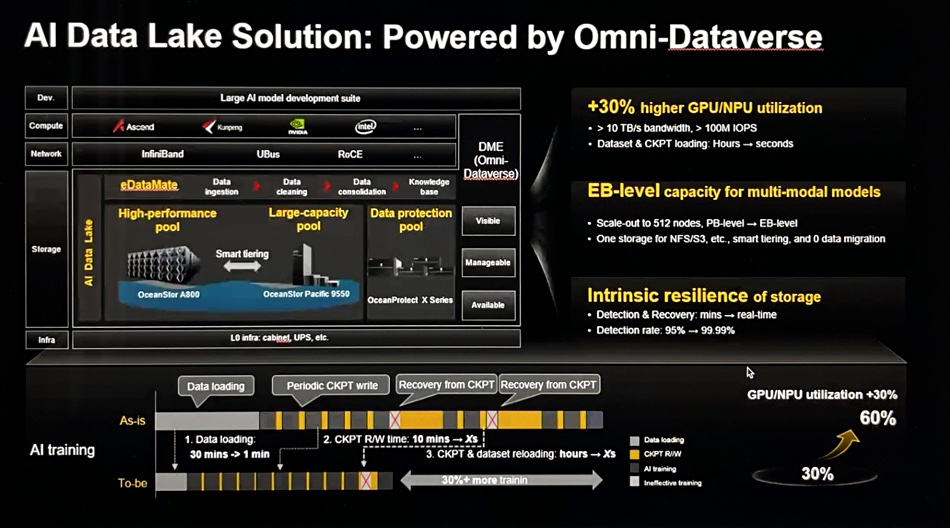

Huawei believes that the industry is entering an AI era in which data storage has to become much more performant and highly scalable, out to the exabyte level, by using new architectures and software.

The A800 is, Huawei says, a next-gen, high-performance NAS storage system. It provides an AI storage power foundation with a data and control plane separation architecture, OceanFS high-performance parallel file system, flexible bi-directional expansion, and other capabilities, all designed to support real-time hyper-cluster scheduling. OceanFS supports the standard protocols – NFS, SMB, HDFS, S3, POSIX, MP-IO – for applications. The system has built-in ransomware detection with a 100 percent detection rate on tests run by the Tolly Group.

It has a SmartMatrix Pro data and control plane separation architecture, enabling it to transfer data via interface modules to/from drives, and bypass bottlenecks that are created by CPUs and memory. We aretold that the A800 uses PCIe gen 5 in the control plane. There’s a high-speed data direct path for the A800 data flow from the DPU to the SSD. This direct data path can bypass the CPU and memory. Only the metadata and other control information are processed by the CPU. Meanwhile, the DPU can be expanded to various near-data computing modules based on different computing power combinations. For example, when the DPU initiates data migration, it performs offload acceleration for security, encryption and even data preprocessing in near-data (near storage) processing mode.

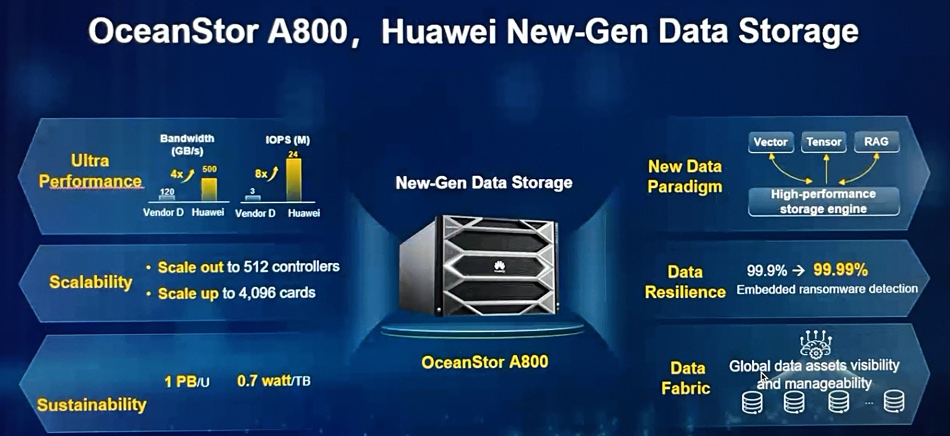

Huawei claims all this enables the A800 to deliver ten times higher performance than traditional storage, at 24 million IOPS per controller enclosure.

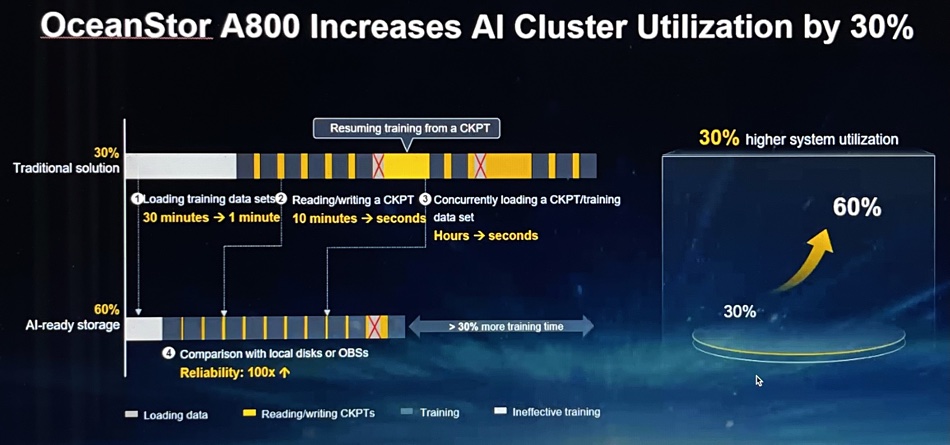

Huawei claims this level of performance enables it to checkpoint long-running AI training jobs in seconds rather than minutes, restoring checkpoints in the same kind of time, leading to shorter training runs and increased system utilization.

The scale-out A800 can have up to 512 controllers and 4,096 computing cards, taking it to exabyte capacity levels.

A NetApp ONTAP cluster can scale out to 24 dual controller nodes in a NAS configuration, putting the A800’s scalability in a different category altogether. The A800 uses Huawei-designed, Arm-based Kunpeng processors and not x86 CPUs.

The A800 chassis, looking to be 8RU-sized in images, houses two processing shelves, supporting both CPUs and GPUs, and two SSD storage shelves. Each processing shelf an be scaled up with more GPUs, DPUs, or NPUs for near-storage computing. We understand that, as with the A310, node software can provide data pre-processing such as filtering, video and image transcoding, and enhancement.

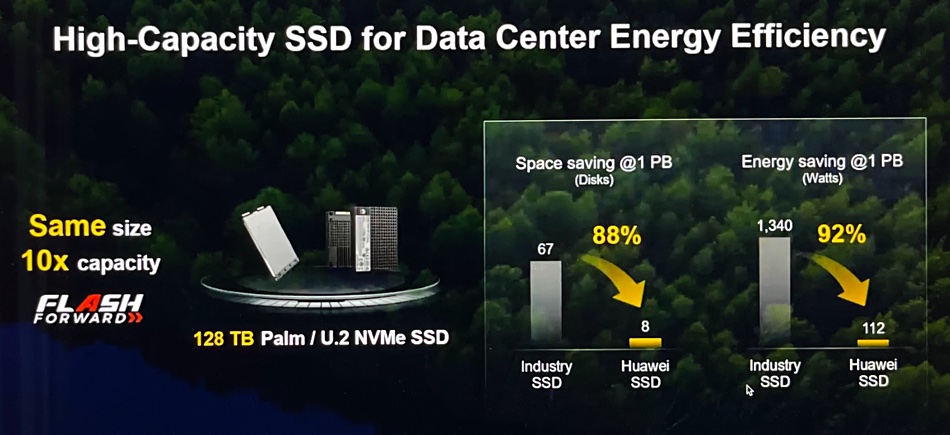

The system supports 30 TB and 50 TB SSDs today and will support 128 TB drives next year.

Michael Fan, Huawei Data Storage marketing VP, told us these will use a mix of TLC and QLC flash to optimize capacity and drive lifetime. They have a Huawei-developed controller and come in U.2 and palm-size physical formats. The palm-size format is thinner than a U.2 SSD and could be either longer or shorter than that format. It appears to be similar to the EDSFF E3.S format but Huawei does not use EDSFF nomenclature.

We don’t know if these 128 TB drives will be available outside Huawei and, in effect, these are proprietary SSDs, like IBM’s FlashCore Modules and Pure’s Direct FlashModules.

Huawei says the A800 has a storage density of more than 1 PB per rack unit (PB/u) and an 0.7 watt/TB electricity draw.

The A800 has a six nines data reliability measure, we’re told. For AI work, it supports vector index tensor data, and retrieval-augmented generation (RAG). We understand that a vector index is a specialized data structure optimized for efficient vector similarity searches on tensor data, such as embeddings, in data lakes and warehouses like BigQuery.

It has an Omni-Dataverse global file system, namespace, and data fabric built in the Data Management Engine (DME). The DME helps manage mass data for on-demand data access and mobility, and secures data protection. It also uses AI for IT operations (AIOps) to identify potential system risks and forestall problems, such as locating performance issues.

The A800 will be available later this year.

Read a Huawei white paper for more information.