With the dust settling after Peter McKay’s October 2018 departure from being Veeam’s CEO how has the company changed and where is it going?

A conversation with Ratmir Timashev, co-founder of the Veeam rocketship-like data protection business, and now its EVP for sales and marketing, reveals that, in a way, Veeam has swung back to its roots while talking advantage of the business extension that McKay built.

Building the enterprise business

When McKay became co-CEO and President in May 2017 his brief included building up Veeam’s enterprise business. It already had a successful core small, medium and mid-level enterprise transactional business which had broken records with its growth rate capitalising on the VMware virtual server wave washing over the industry. The deal sizes were $25,000 to $150,000 and business was consistently booming, but the feeling was there that they were leaving money on the table, so to speak, by not selling to larger enterprises.

That was for McKay to do, and he did so, establishing Veeam as an enterprise supplier and building up its North American operation. He moved quickly and spent money and organisational effort reshaping Veeam. A little too quickly as it happened.

Timashev explains that the changes took investment away from the main Veeam business with marketing to the core transactional business customers suffering as did inside sales.

So, eighteen months after joining Veeam, McKay left, and three main executives, the tight triumvirate of ; Timashev, co-founder Andrei Baranov who was previously a CTO and then co-CEO with McKay, and Bill Largent, a senior board member from May 2017 after being CEO, resumed operational control, running Veeam in the same kind of way as they had before Mckay’s time.

Enterprise – SME balance

Baranov is now the CEO with Largent the EVP for operations. The three have set up a 70:30 balance scheme for spending and investment.

Seventy per cent goes to the core transactional business, Veeam’s high-velocity business, focussing on TDMs (Technical Decision Makers) in the small, medium and mid-level enterprises.

The remaining thirty per cent is focussed towards EDMs or Executive Decision Makers in the larger enterprises.

Timashev says very few businesses successfully sell to the whole range of small-to-medium-to-mid-to-large-enterprise customers. It’s hard to do with products and its also hard to do with sales and marketing. He cites Amazon and Microsoft as having managed to get over that high bar.

It’s clear he wants Veeam to be in that category.

Hybrid cloud moves

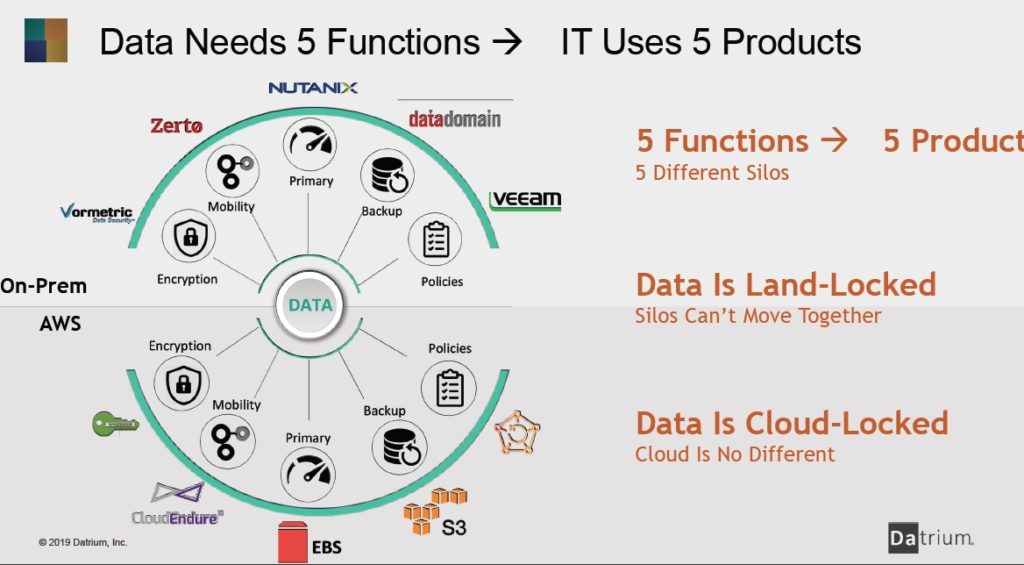

All customers are aware of and generally pursuing a hybrid cloud strategy, meaning cloud-style on-premises IT infrastructure combined with using the public cloud, and generally more than one public cloud. Their board directors and executives have charged the IT department with finding ways to leverage the cost and agility advantages of the public cloud.

For Veeam, with it being a predominantly on-premises data protection modernisation business, this hybrid cloud move represents an opportunity but also a threat. Get it wrong and its customers could buy data protection services elsewhere.

Timashev said Veeam is pursuing its own hybrid cloud strategy. All new products have a subscription license model. The main data protection product still has a perpetual license model but is also available on subscription.

Subscription licenses can be moved from on-premises to the public cloud at no cost with Veeam Instance Licensing. VIL is portable and can be used to protect various workloads across multiple Veeam products and can be used on premises, in the public cloud and anywhere in between. Timashev believes Veeam is the only data protection vendor to offer this capability.

Veeam has also added tiering (aka retiring) of older data to the AWS and Azure clouds, or to on-premises object storage. It has its N2SW offering for backing up AWS EC2 instances and RDS data.

It wants to do more, helping customers migrate to the public cloud if they wish, and provide data mobility between the on-premises and public cloud environments.

Veeam’s triple play

Can Veeam, with what we might call the old guard back in control, pull off this triple play; reinvigorating core transactional business growth, building up its enterprise business, and migrating its product, sales and marketing to a hybrid cloud business model paralleling its customer’s movements?

The enterprise data protection business features long established stalwarts, like Commvault, IBM and Veritas, and newer fast-moving and fast-growing upstarts such as Cohesity and Rubrik who are moving into data management.

We have to add SaaS data protection companies like Druva to the mix as well. Veeam is going to need eyes in the back and sides of its head as well as the front to chart its growth course through this crowded forest of competing suppliers.

It used to say it was a hyper-availability company and will need to be hyper-agile as it moves ahead. It has the momentum and we’ll see if it can make the right moves in what is going to be a mighty marketing, sales and product development battle.