Today is the opening day of the Dell Technologies World event in Las Vegas with wall-to-wall-and-back-again announcements. Before we dive in and get ‘Dell-ified’ here are a set of news items to remind you of life beyond Dell.

We look at Huawei, InfiniteIO, Nutanix, Seagate, Spectrum Scale, StorONE and Tintri, now owned by DDN.

Huawei results

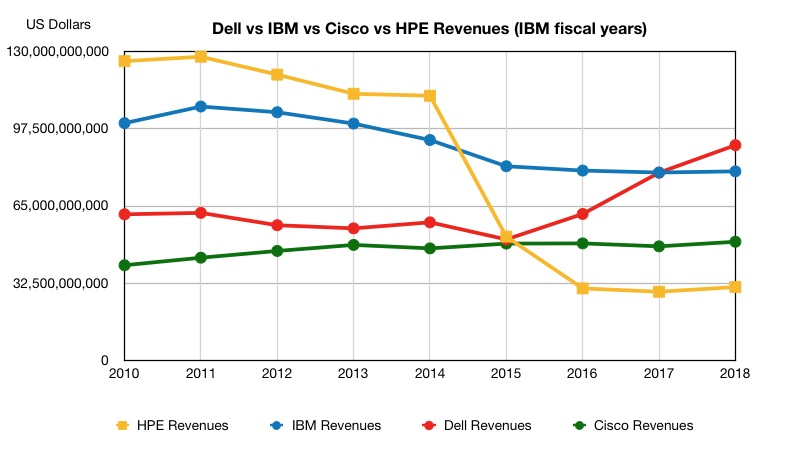

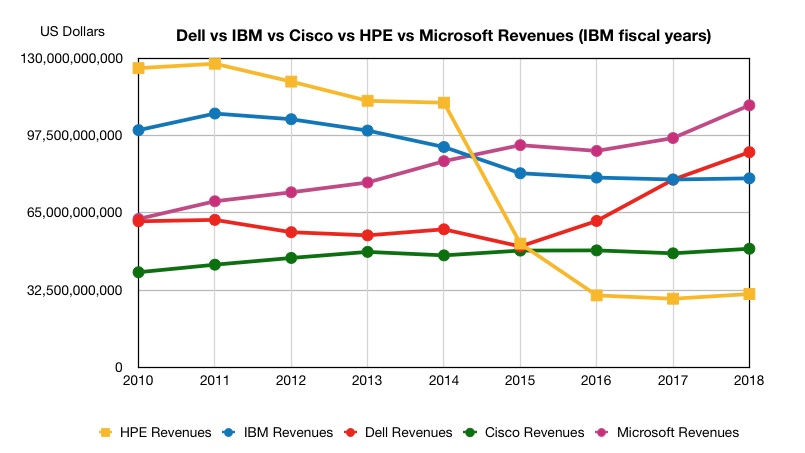

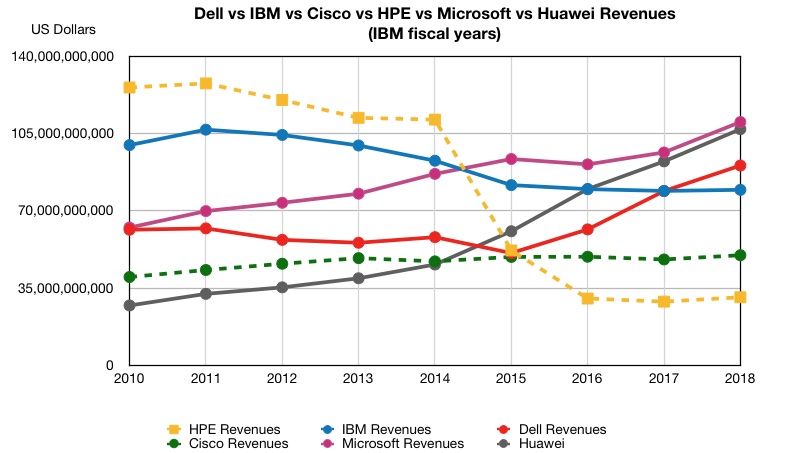

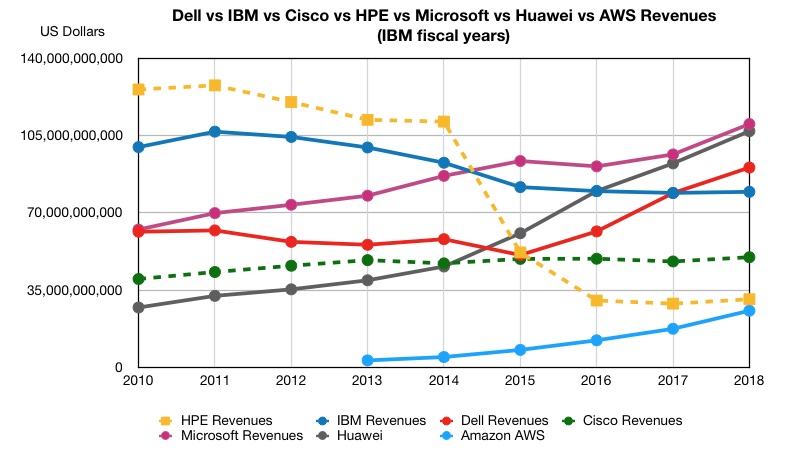

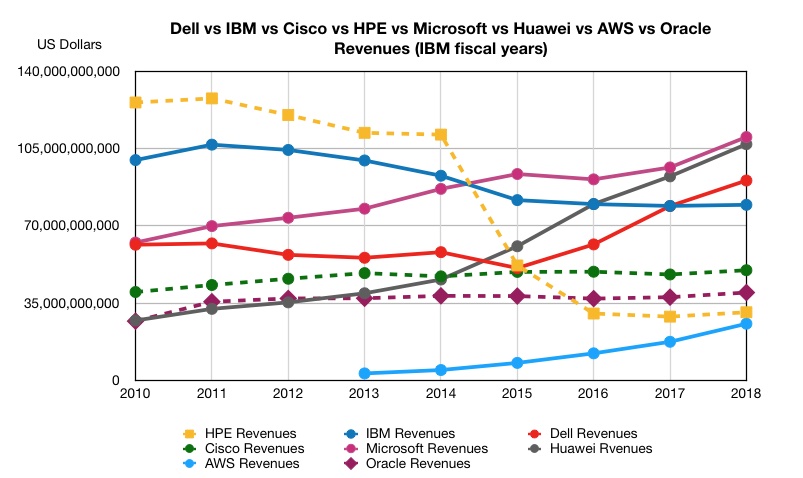

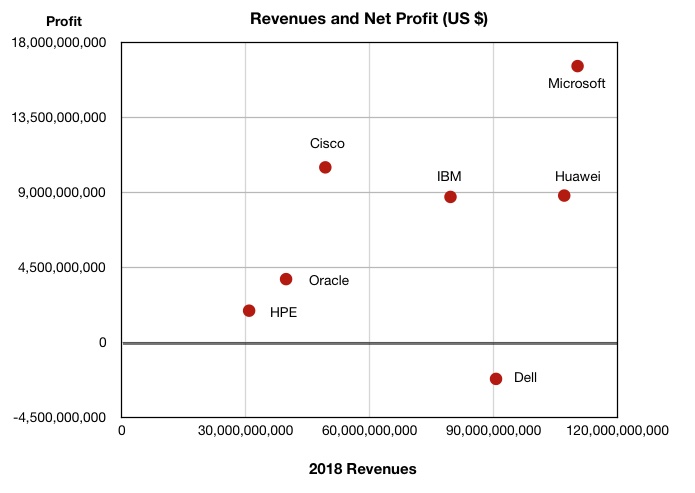

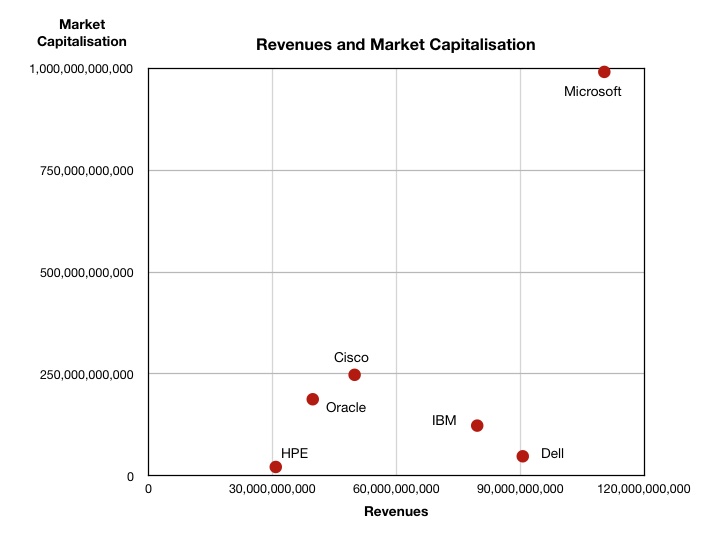

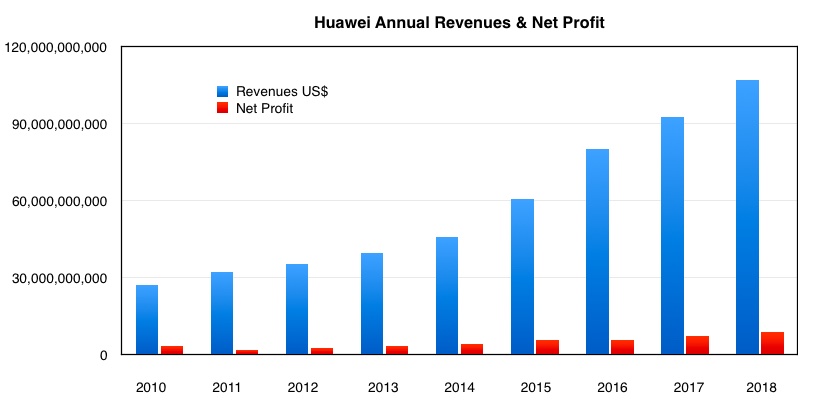

Huawei has become a $100bn-plus business, with $107.13bn revenues in its 2018 year, and $8.81bn net income. This makes it bigger in revenue terms than Dell and IBM.

Here is a chart showing Huawei’s revenues and net income since 2010:

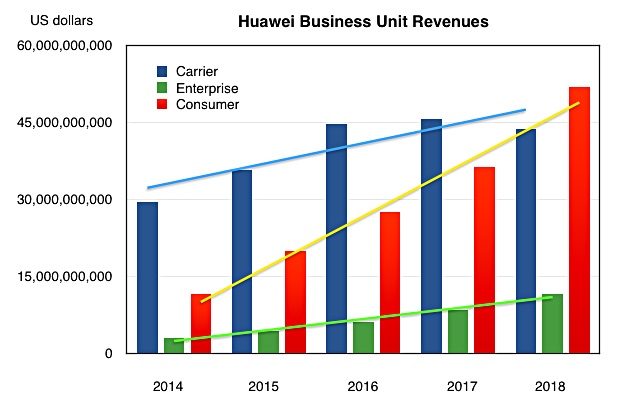

Huawe has three main business units: Carrier, Consumer and Enterprise, and their relative contributions have changed the years with consumer revenues now outpacing the company’s original carrier business.

Revenues from the carrier business declined in 2018 from 2017’s $45.7bn, perhaps reflecting the effects of Huawei’s issues with the USA which claims it is s a security risk.

Huawei is unique in combining a telecommunication carrier business, a smartphone operation and an enterprise server/storage product set. It is as if someone combined Apple’s iPhone, HPE’s servers and storage, and elements of both Ericsson and Cisco networking.

If the smartphone business is now mature and the carrier business ex-growth then Huawei will have to look elsewhere for continued growth. Going by the trendlines on the chart it is unlikely that the steady but relatively low-growth enterprise business could take up the reins if smartphone revenues have peaked.

InfiniteIO

InfiniteIO has release Infinite Insight, an app that scans files in on-premises NAS arrays and identifies those not accessed for some time.

The free of charge app can scan millions of files, and simulates policies, based on file size and the last time that files were accessed or modified. It can move inactive files to a private or public cloud tier. The app generates a shareable report on estimated, yearly cost savings. InfiniteIO said users can set up the app in accordance with financial and operational policies.

A customisable dashboard providing a view of the user’s file environments.

InfiniteIO offers hybrid cloud tiering and fast file access products with real-time analytics and metadata management.

You can download the app.

Seagate HAMR head nanophotonics

Seagate is investing £47.4m into its R&D facility in Northern Ireland, and Invest Northern Ireland is tipping in another £10m. The investment will create 25 new jobs created among the 120 or or positions involved in the project to research and develop nanophotonics – the study of the behaviour of light at the nanometre scale.

Seagate has carried out research into disk recording heads, such as its developing Heat-Assisted Magnetic Recording (HAMR) heads, at its Springtown wafer manufacturing plant in Londonderry, Northern Ireland since 1994.

Jeremy Fitch, executive director of business solutions, Invest NI, said: “Seagate first came to Northern Ireland in 1994, investing £50 million and creating 500 new jobs. Fast forward 25 years and the facility now employs 1,400 people and it is estimated that the company has invested in excess of £1 billion in capital here.”

Spectrum Scale release

IBM released Spectrum Scale version v5.0.3 last week. New features include cloud services changes with an auto container spillover feature. A new container is automatically created during reconcile when the specified threshold of files is reached. This enables improved maintenance.

The Microsoft Azure object storage service is supported.

The Watch folder (based on Apache Kafka Technology) in Spectrum Scale 5.0.3, watch folder has a clustered watch capability. With this an entire file system, fileset, or inode space can be watched.

You can check out a summary of the changes.

StorONE signs up Tech Data

Israeli storage startup StorONE has signed a distribution deal with world-wide distributor Tech Data.

StorONE’s software-defined storage offering is available plus service and support through Tech Data. It says its technology can support multiple enterprise functions, reaching breakthrough performance from all-flash array (AFA), ordinary and NVMe SSD hardware, create high performance, multi-application secondary storage systems, supply persistent storage to virtualised environments, and/or use lower-cost commodity components to provide high capacity, low footprint solutions.

The StorONE product line is hardware-agnostic and Tech Data can sell it bundled with any drive (ordinary and NVMe SSD, disk drives), any protocol (block, file and object), four storage configurations (AFA, high capacity, high performance and virtual machine appliance.) It’s available now.

Joe Cousins, VP for global computing components at Tech Data, said: “StorONE’s Software Defined Storage (SDS) offerings…deliver high performance, high capacity and complete integrated enterprise data protection.”

Tintri progress

Tintri, the storage array startup picked up from bankruptcy by DDN in September 2018, said it is making progress in re-establishing its business.

The company, now called Tintri by DDN, is not revealing any numbers, but claimed it had a good first quarter, with a significant uptake from larger-scale enterprise customers, and sales exceeding targets.

Tintri noted a 40 per cent increase in engineering support staff in the quarter and more sales hires. It plans to recruit more staff in all parts of the company this year including go-to-market, engineering and support.

Paul Bloch, co-founder and president of DDN, seems pleased with his acquistion: “2019 is the year of renaissance for Tintri by DDN,” he said in a statement.”…Now it’s time to focus on bringing some Tintri by DDN magic to a broader customer base…We are poised for a breakout year with Tintri products that are uniquely designed to simplify customers’ virtual environments. We are very excited about the Tintri by DDN roadmap.”

Phil Trickovic, Tintri’s president of worldwide sales, also delivered a quote, in which he said Tintri will “expand our predictive analytics and machine learning capabilities. We are also making great progress on the soon-to-be released DB Aware module as well as our expansion into macro/micro data centre management capabilities.”

Tintri has historically been known for its virtual machine-aware management and provisioning of storage. Extending this to database-aware storage sounds interesting and will give Tintri a useful differentiator.

– – – –

That’s our Monday mix completed. Now prepare yourselves for the Dell Technologies news deluge – or Delluge.