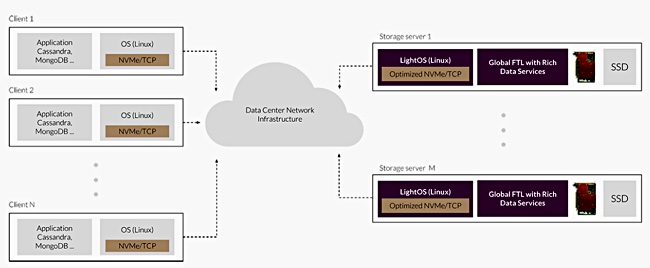

Lightbits Labs has introduced the NVMe-connected SuperSSD all-flash array using its LightOS and LightField accelerating hardware cards.

The SuperSSD is hooked up to accessing servers across dual 100GbitE links and a standard Ethernet infrastructure using NVMe-over TCP. There is no need for lossless, converged data centre-class Ethernet, although RDMA over Converged Ethernet is supported.

Physically it consists of an X86-based controller and 24 x 2.5-inch hot-swap, NVMe SSDs inside a 2U rack shelf unit.

The LightOS contributes a global flash translation layer (GTFL) and thin-provisioning, with data striped across the SSDs. There is a RESTful API providing a standard HTTPS-based interface and CLI support for scripts and monitoring. It has a quality of service SLA per volume, so avoiding noisy neighbour-type problems.

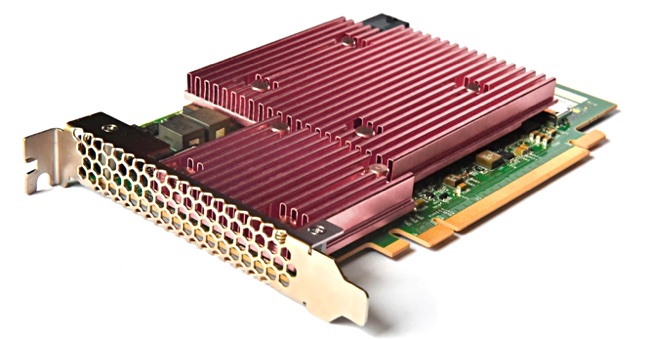

LightField FPGA cards provide hardware compression and append-only, erasure coding. This means that, during SSD failure, the LightField card collects all redundant data for reconstruction without passing the data to the host CPU.

Each LightField accelerator card can simultaneously run compression at 100Gbit/s and decompression at 100Gbit/s, without affecting the SuperSSD’s write/read throughput or latency.

The system is claimed to provide zero downtime. Samer Haija, Director of Product, said; ” SuperSSD is a single controller per node architecture. High-availability is achieved by configuring multiple boxes as a cluster for node level failures. When configuring multiple boxes for HA, the setup could be configured as active/active or active/passive.”

The SuperSSD is designed to support the scale, performance and high-availability needs of customers running applications needing fast and parallel block access to data, such as AI and machine learning. It is scalable from 64TB to 1PB of usable capacity; the raw capacity is not revealed. The system can both scale up in capacity, by adding SSDs, and scale out, by adding appliances.

Lightbits says each SuperSSD is a two node target, and can support up to 16,000 volumes / node or 32,000 total. Therefore, it could support up to 32,000 compute nodes.

We asked what kind of SSDs are supported, such as 3D NAND and TLC? The answer from Haija was; “Lightbits SuperSSD currently supports two variants of NVMe SSD’s from two different vendors that are 3D TLC 64-layer with drive capacities that range from 4TB to 11TB. We are QLC-ready and our SSD offering will continue to support the latest available and released NVMe SSD’s (96 layer TLC and 64 Layer QLC).”

The SuperSSD offers up to 5 million 4K input/output operations per second (IOPs) and with an end-to-end latency consistently less than 200us. The appliance’s own read and write latency is <100us.

The LightOS GTFL provides wear-levelling and garbage collection across the SSDs instead of leaving it to each drive itself and so achieve better endurance. According to Haija, system endurance is dependent on workload and itsIP allows it to achieve minimally 4 DWPD (Drive Writes Per Day) and up to 16DWPD over 5 years.

The LightField cards provide 4X or more compression which is optionally enabled. There can be up to two cards per appliance, meaning each card can support up to 12 SSDs. The cards feature hardware Direct Memory Access for acceleration of the LightOS GFTL.

The gets us thinking that LightOS receives a read request from an accessing server, and it is satisfied by the LightField card doing a DMA transfer from its attached SSDs it reads, with their striped data, to the LightOS memory buffers. In effect we have memory caching,

Lightbits says the SuperSSD is a storage server and can provide in-storage processing so that user-defined functions can run in it. We asked how this was done but no details were provided. The LightField card is said to be “re-programmable and extendable with user-defined in-storage processing functions.”

Blocks & Files understands that this is the in-storage processing capability.

A prepared quote from Eran Kirzner, the CEO and co-founder of LightBits Labs, said: “AI and machine learning are exceedingly resource intensive, involving access to distributed devices and massive amounts of data. As the amount of data analysed increases, direct-access storage solutions become completely ineffective, complex to manage, costly and potentially a single point of failure.

“By ensuring zero downtime, SuperSSD is the only storage solution that can support continuous AI and machine learning processes, reliably, cost-effectively, and with high-performance and simplicity.”

Competition

Blocks & Files reckons the competition for this kind of array are the NVMe-oF startups; Apeiron E8, Excelero and Pavilion Data Systems for example, Kaminario and the mainstream suppliers such as Dell EMC, IBM, HPE, Hitachi Vantara, NetApp, and Pure Storage.

These are all good suppliers with fast-access and reliable kit.

We asked LightBits what is the SuperSSD’s differentiation from competing NVME all-flash arrays? Haija said the SuperSSD is;

- [A] target-only solution; no need to change network or clients

- Based on standard hardware and SSD’s

- Compatible with existing infrastructure

- Isolates SSD failures and (undesired) behaviour from impacting rest of infrastructure and applications minimising service interruptions

- Higher performance and capacity, reduces number of nodes required and allows for ease of scale.

With respect to Lightbits, most of these are features any all-flash array connected via NVMe-over Fabrics across a standard storage access network, such as TCP/IP or Fibre Channel, could claim as well.

This is significant as NVMe/TCP is natively slower, meaning it has a longer latency, that NVMe-over Fabrics using RDMA across lossless Ethernet. Perhaps Lightbit’s HW acceleration counters this disadvantage.

This means the “higher performance and capacity” point will need to be proved and be significant, as a few microseconds faster latency will be of no substance unless it delivers faster application execution because the latency savings per IO add up to a large number.

The company is an early stage start up that emerged last month with $50m in funding. This is a promising start and the unnamed backers clearly think the company is on to something. But the field is crowded and the competition is strong. We asked Haija what he thought Lightbits’ strengths were.

He said; “Our team is comprised of a unique combination of successful leaders with a track record in storage, compute and networking.

“Our capabilities are reflected in our core software (LightOS and GFTL) that tightly integrates the HW to create an easy to deploy, high performance, low latency, reliable product that can improve SSD utilisation by 2x which is what the market needs to achieve the next level of scale and efficiency.

“With our leadership position in NVMe/TCP, LightOS and GFTL we have a head start that we plan to maintain by continuing to invest in a robust roadmap.”

That leadership position needs proving with customer sales.

Availability

The Lightbits Labs SuperSSD appliance comes with a 5-year warranty and is available for purchase now, along with LightOS software and the LightField storage acceleration card. We have no channel partner details.

Lightbits has two offices in Israel, in Haifa and near Tel-Aviv, and a third in San Jose, CA. Get a LightOS product brief here and a LightField product brief here.