HPE Discover HPE yesterday unveiled two hyperconverged systems, a composability extension and much hybrid cloud activity at at its Discover jamboree in Las Vegas. Let’s take a quick look.

HPE Nimble Storage dHCI

This system combines HPE’s ProLiant server with Nimble storage trays to add another hyperconverged product alongside HPE’s Simplicity systems.

HPE said the Nimble Storage dHCI combines the simplicity of hyperconvergence with the flexibility of a converged system.

It features:

- Native, full-stack intelligence from storage to VMs and policy-based automation.

- Over 6-9s of data availability (99.9999 per cent) and consistent, high performance

- Ability to independently scale compute and storage non-disruptively.

Nimble CEO Suresh Vasudevan talked about a possible Nimble HCI offering in February 2017. At the time Nimble was an independent maker of all-flash and hybrid arrays with six “nines” availability, that were monitored and managed with cloud-based analytics – InfoSight. HPE bought Nimble in March that year.

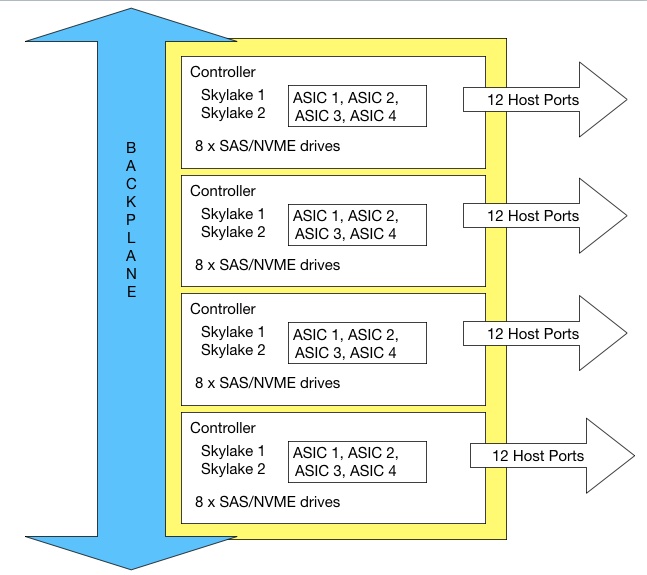

Moor Insight & Strategy senior analyst Steve McDowell told us by mail: “The Nimble HCI is being branded as the ear-catching ‘dHCI’, with ‘d;standing for ‘disaggregated’. Frustratingly vague screenshot from the briefing deck below.”

Language logicians will enjoy the paradoxical notion of having a disaggregated (non-hyperconverged) hyperconverged (aggregated) system. Presumably it’s a single product to order and a single product to install. But like NetApp’s HCI, just because it quacks like a duck and walks like a duck it doesn’t necessarily mean it’s a duck.

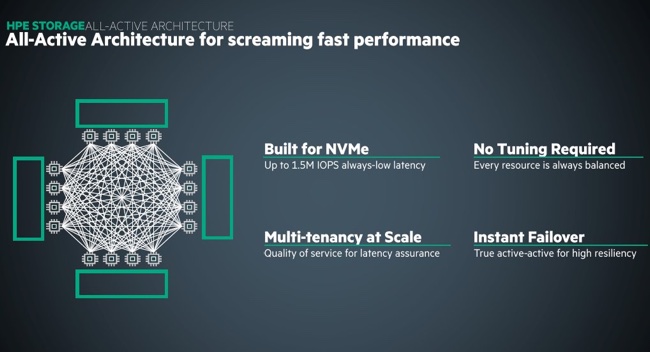

HPE said dHCI is an intelligent platform the enables ordinary and demanding apps to be deployed fast. It has sub-millisecond latency and is managed though InfoSight.

There is no dHCI configuration data available from HPE, which seems quite extraordinary. We understand detailed specs will be available in August. For now we know that three network switches are supported: StoreFabric 2100M, FlexFabric 5700 and Cisco Nexus 3K/5K/9K.

An HPE source told us dHCI supports Proliant Gen 9/10 servers and Gen 5 Nimble arrays with Nimble OS v5.1.2 and up.

Odd duck

McDowell called dHCI an “odd-duck announcement…It flies in the face of HPE’s stance that HCI is all about SimpliVity. dHCI started out as Nimble’s ‘Nutanix killer’ product back in 2017… t’s the same stack. HPE shelved it after the acquisition and disintegrated the team, only to dust it off and productise it this week as dHCI.”

He think dHCI is a “nice approach to HCI with its independent scalability, running directly on the array, etc, and the integration with vCenter is really well done. That said, it’s confusing understanding how that fits into a SimpliVity-first HCI world.“

Beta test experience

Marc Bingham, a senior pre-sales architect at Cristie Data has been involved with dHCI beta testing and likes the product. He blogs: “HCI is predominantly a management and purchasing experience moreover, from a technical standpoint, it’s a scale out architectural design rather than the familiar 3-tier design.”

He says: “dHCI is essentially high performance HPE Nimble Storage, FlexFabric SAN switches and Proliant servers converged together into a stack…deployment, operation and day-to-day management tasks have been hugely simplified …day-to-day tasks, such as adding more hosts or provisioning more storage, are simple “one click” processes.”

Storage, compute and networking can be scaled independently of each other. The whole stack plugs directly into the HPE Infosight portal and support model. Bingham suggests one way if looking at dHCI is to see it as “Converged Infrastructure with significant improvement to the management experience.”

HPE SimpliVity

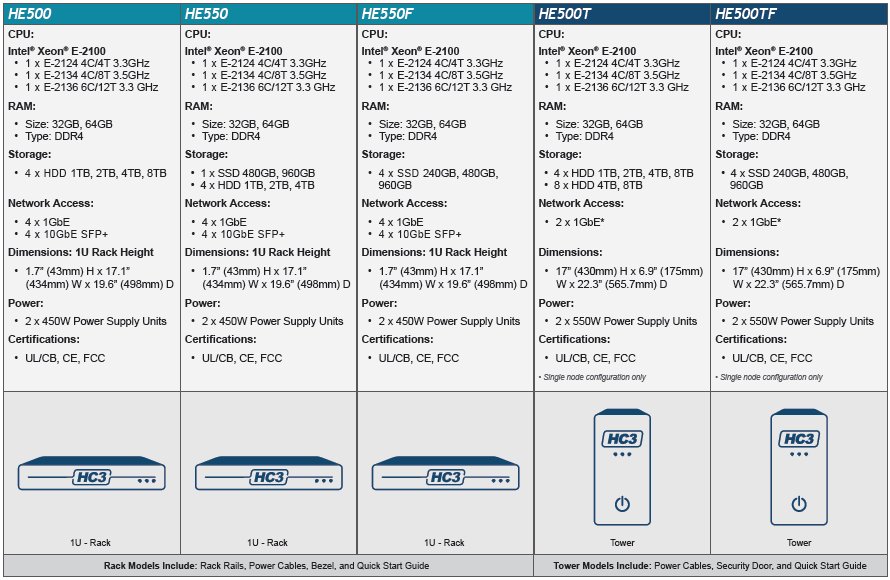

There are two new SimpliVity HCI products: the 325 and 380.

The 1U 325 is for for remote and branch offices and is an AMD EPYC processor system with all-flash storage. The 2U 380 is a larger capacity, storage-centric product for long-term storage. It can centrally aggregate storage from multiple edge SimpliVity systems.

The 380 is presented as a backup and archive node for VMs and workloads but is old news -HPE announced it in March 2017, then basing it on a gen 10 ProLiant server. What is new? Disk drives.

This 380 uses a mix of 4TB disk drives and SSDs. As a 2U system it’s clearly optimised more for small physical space than high disk capacity, as 16TB drives are available but come in a 3.5-inch format. The smaller 2.5-inch format drives, such as Seagate’s Exos 10E2400, top out at 4TB, a quarter of that capacity.

The 380 houses up to 12 x 2.5-inch drives and that gives it a 48TB maximum raw capacity; not that dense in terms of TB per rack unit – 24TB/U .

You have to scale the thing out to get more capacity, and can have up to 16 in a cluster.

HPE said the system backs up and restores a 1TB VM in 60 seconds. Also there is no need for WAN optimisation devices or expensive network bandwidth capabilities to link the edge SimpliVity products.

HPE has exteneded Infosight, its cloud-based storage, server and networking management service, to include SimpliVity systems.

HPE also announced automated configuration of Aruba switches during deployment of new SimpliVity HCI nodes.

HPE composable rack

HPE is adding ProLiant gen 10 360, 380 and 560 servers its Synergy-based composable rack infrastructure. This can use either VSAN, HPE Storage arrays or, now, the SimpliVity HCI as the storage component.

Customers can deploy a pool of SimpliVity HCI nodes alone or alongside the other storage.

Hybrid cloud with Google

HPE and Google are providing a hybrid cloud for containers. It involves Google Cloud’s Anthos, HPE’s ProLiant servers and Nimble storage on-premises, HPE Cloud Data Services, and HPE GreenLake.

HPE’s Cloud Volumes will provide a storage service for Google Cloud Platform and other public clouds in the third quarter of this year.

This hybrid cloud offering will feature bi-directional data and application workload mobility, multi-cloud flexibility, unified hybrid management, and the choice to consume the hybrid cloud as-a-Service, since a planned GreenLake for Google Cloud’s Anthos offering aims to provide the entire hybrid cloud as-a-Service.

Developers can write software in the cloud and can deploy deploy the application on-premises, or vice-versa, protect to the cloud and recover in the cloud, and gain the flexibility to run applications in multiple clouds.

HPE Cloud Volumes with Equinix

Customers using Equinix data centre facilities will be able to use the Equinix Marketplace to get Data as a Service based on HPE Cloud Volumes. HPE Cloud Volumes will be available over high-speed connectivity to compute in Equinix data centres.

Availability

SimpliVity with InfoSight will be available in August 2019. The Nimble dHCI will be available after that in the fourth quarter.

HPE Composable Rack is available in August 2019 in the United States, United Kingdom, Ireland, France, Germany, and Australia. HPE Nimble Storage hybrid cloud capabilities with Google Cloud’s Anthos is available in Summer 2019.

HPE Cloud Volumes support for Google Cloud Platform will appear this summer and Cloud Volumes in the Equinix Marketplace is a third quarter 2019 offering.