Kaminario is taking the consumption-based software subscription model and extending this to hardware, offering customers the option to acquire storage arrays on a pay-as-you-go basis as well.

The move is an extension of Kaminario’s partnership with Tech Data, announced last year. This saw the storage firm quit the hardware business and focus on its software platform, with Tech Data providing the hardware.

Available now as Kaminario Storage Utility, the new offering allows customers to operate all-flash storage arrays through a single subscription payment covering software and hardware, thus shifting costs from capex to opex.

The cost of the hardware gets converted to a consumption-based monthly payment determined by the amount of storage used. Hardware usage metering is tied directly to software usage, with billing integrated into Kaminario’s Clarity analytics platform.

When purchased through Tech Data’s Technology as a Service (TaaS) offering, Kaminario Storage Utility will have an all-inclusive baseline price of $100 per usable TB (terabyte) per month.

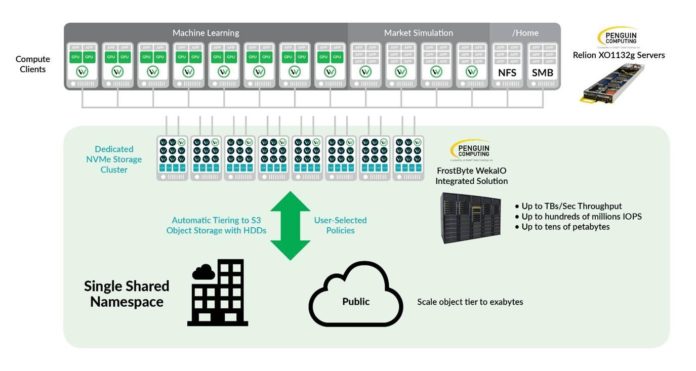

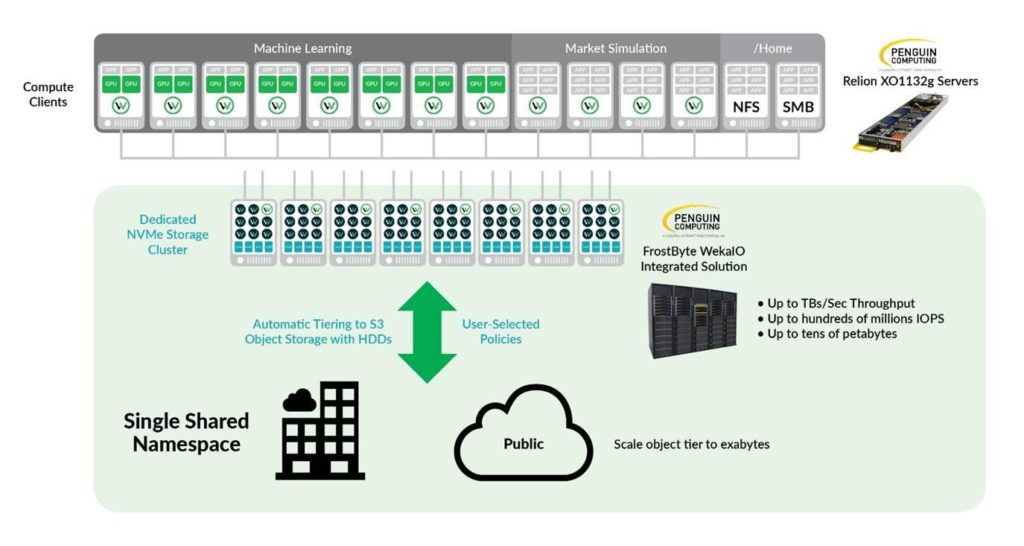

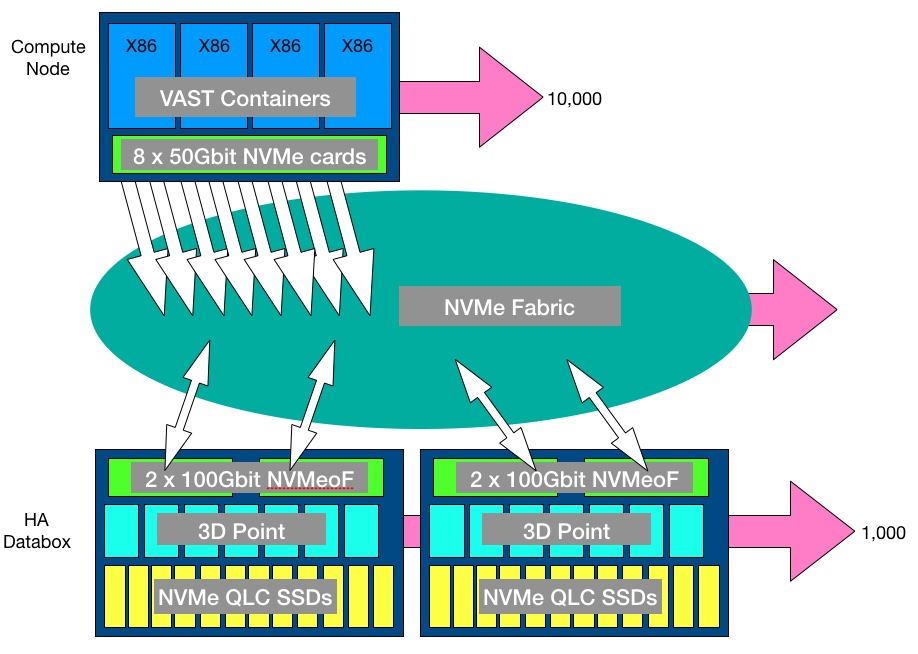

Kaminario’s software runs on standard X86 server hardware, but customer choice is limited to a range of pre-integrated configurations optimised for performance.

DraaS ticks

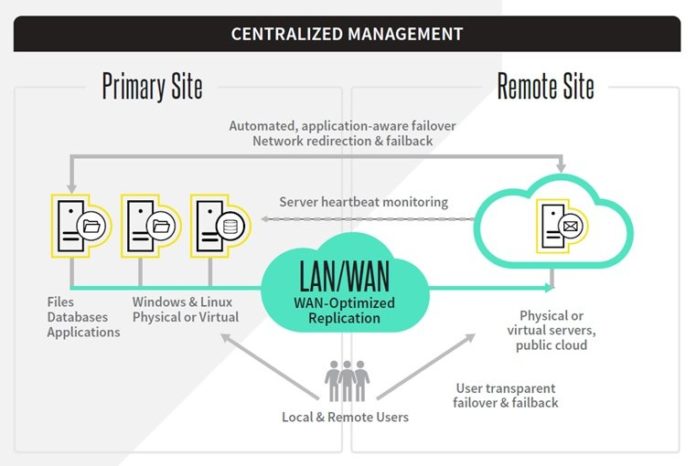

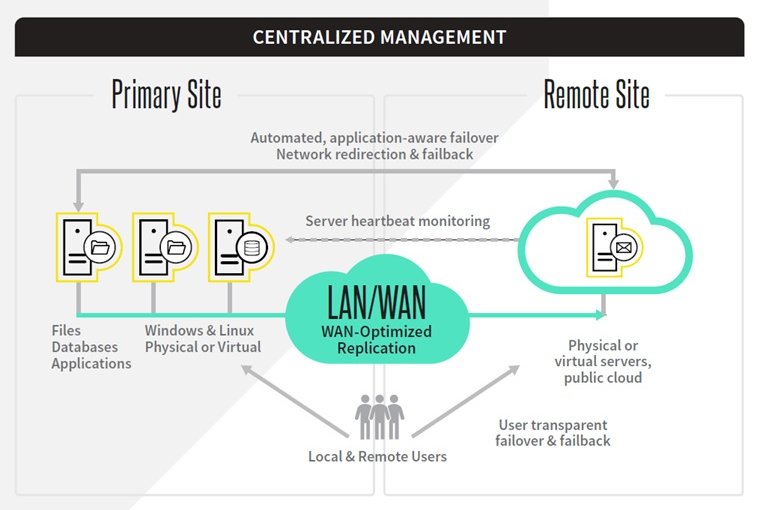

Also new from Kaminario is a Disaster Recovery as a Service (DRaaS) offering, and Kaminario for Public Cloud.

The Kaminario DRaaS option provides customers with a fully managed cloud-based disaster recovery service as a monthly expense based on protected capacity.

Subscribers can choose from a list of Tier 4 data centre locations or make use of an existing remote site. Kaminario said that a range of service levels and recovery time objectives are available.

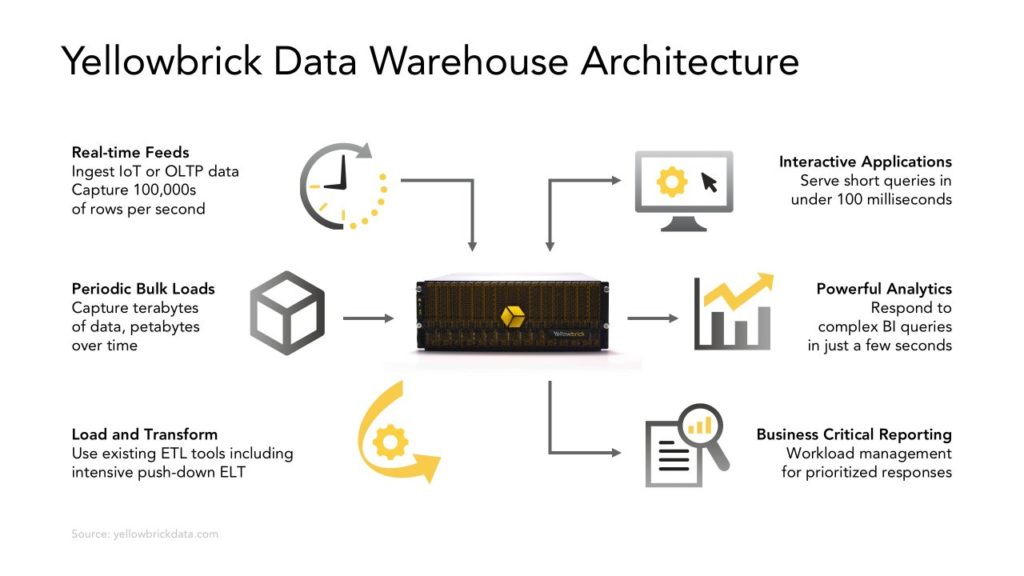

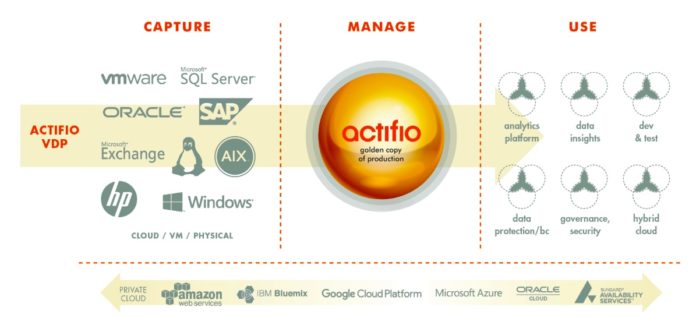

Due to be available in the first half of 2020, Kaminario for Public Cloud will enable customers to deploy storage array instances running VisionOS onto major public cloud platforms. This includes the big three: AWS, Google Cloud Platform and Microsoft Azure.

Kaminario said its platform’s native snapshot and replication support will allow users to move data from on-premises to the cloud to support development, disaster recovery or cloud bursting use cases.

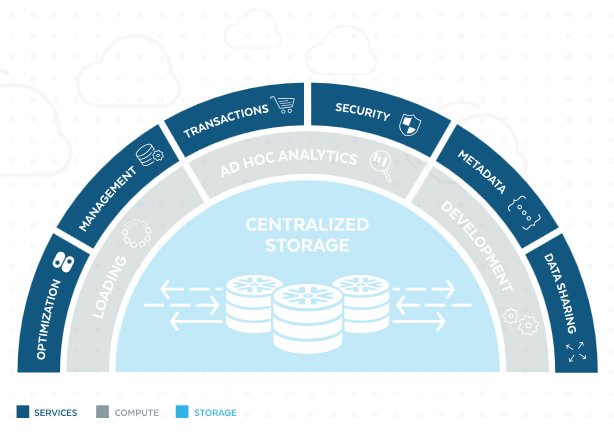

Kaminario’s Clarity analytics Flex orchestration and automation tools will provide customers the ability to manage storage instances across all environments.