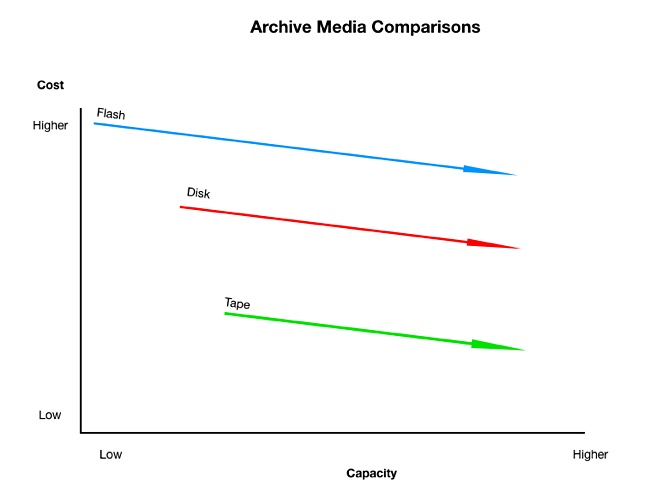

Toshiba and Samsung are pushing the idea of SSDs directly accessed over Ethernet as a way of simplifying the storage access stack.

This idea of directly accessing storage drives across Ethernet first surfaced with Seagate and its Kinetic disk drives. Kinetic drives implement a key:value store instead of the traditional file, block or object storage mediated through a controller manipulating the drive’s data blocks.

Samsung supports the key:value drive store idea but Toshiba opposes it.

Kinetic disk drives

Seagate was a prominent champion of kinetic drives but its technology appears to have fallen by the wayside in 2015 or2016.

Kinetic disk drives had an on-board NIC plus a small processor implementing a key:value store. The drives were directly accessed over Ethernet, with the host server operating the drive as a key:value store – as opposed to a block or file storage device.

There was complexity involved in writing host software to handle the kinetic drives. There was also the lack of significant benefit compared to the existing ways of accessing disk drives. The upshot is that customers had little appetite for kinetic drives.

Direct Ethernet access SSDs

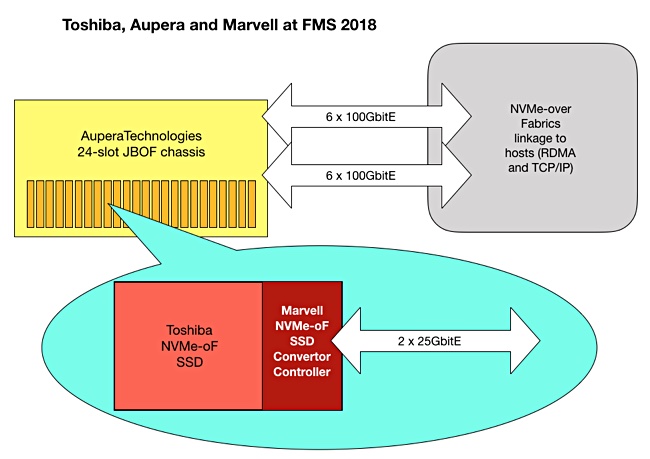

Toshiba proposed an Ethernet-addressed SSD at the Flash Memory Summit (FMS) 2018, with a drive supporting the NVMe-over Fabrics (NVME-oF) protocol.

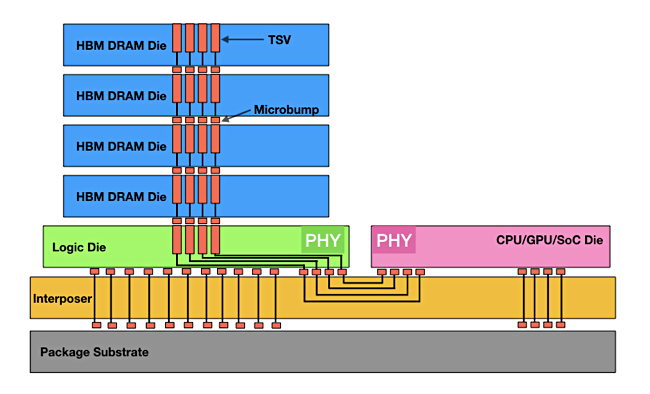

NVMe-oF uses the NVMe protocol across an Ethernet or Fibre Channel network to move data to and from a storage target, addressed at drive level. Data is pumped back and forth at remote direct access memory (RDMA) speeds, meaning a few microseconds latency.

Typically, a smart NIC intercepts the NVMe-oF data packets, analyses them and passes them on to a drive using the NVMe protocol.

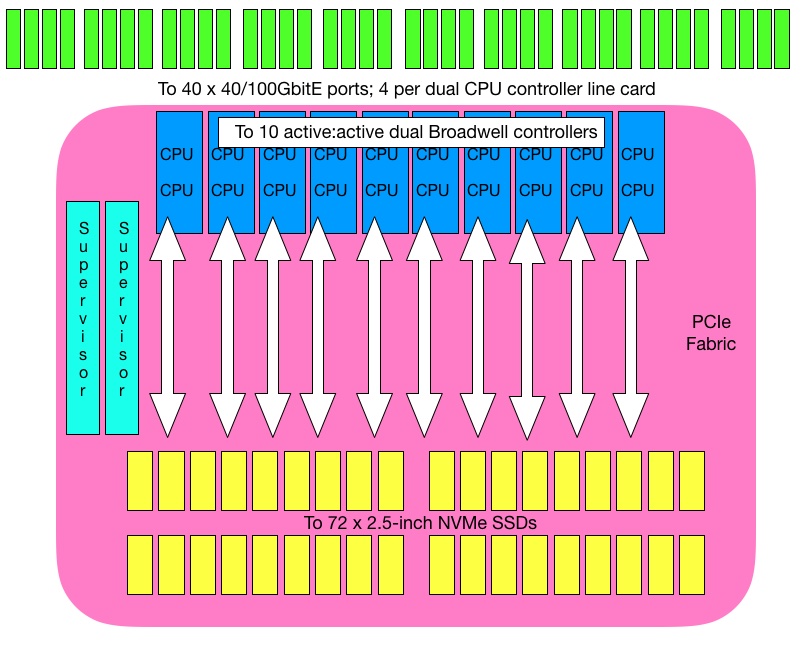

At FMS 2018, Toshiba put 24 of its SSDs inside an Aupera JBOF (Just a Bunch of Flash drives) chassis. They were interfaced to a server host via a Marvell 88SN2400 NVMe-oF SSD converter controller, with dual-port 25Gbit/s ethernet connectivity.

The chassis achieved 16 million 4K random read IOPS from its 24 drives – claimed at the time to be the fastest random read IOPS rate recorded by an all-flash array. Each drive was rated at 666,666 IOPS.

Ssmsung KV-SSD

In November 2018 Samsung revealed it was working on a similar Ethernet-addressed drive, the Z-SSD. The underlying device was a PM983 SSDs with an NVMe connection. This, unlike Toshiba’s NVMe-oF SSD, had an on-board key:value store, making it a KV-SSD,

Samsung said it would eliminate block storage inefficiency and reduce latency.

Toshiba’s NVMe-oF all-flash JBOF

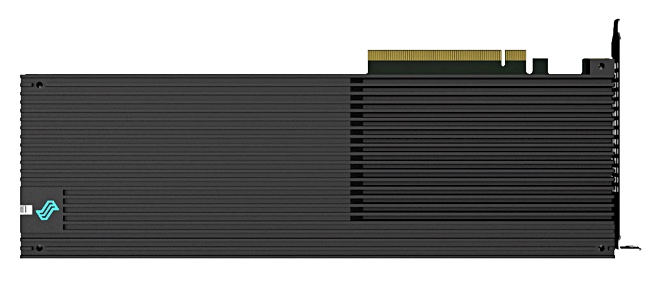

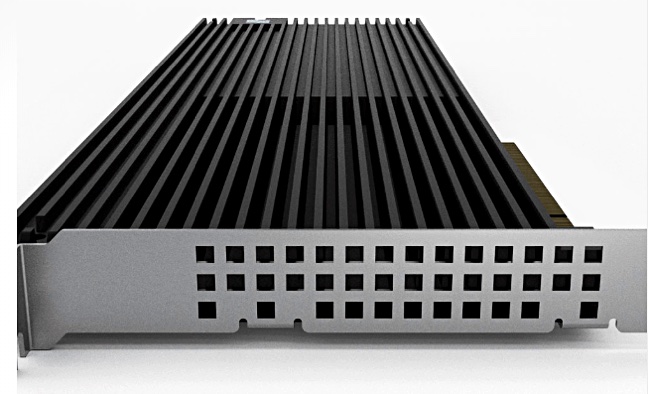

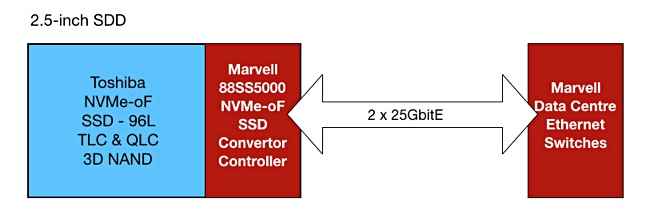

At FMS 2019 Toshiba has gone one step further, giving its SSD a direct NVMe-oF connection. The demonstration SSD uses Toshiba’s 96-layer 3D NAND and has an on-board Marvell 88SS5000 converter controller. This has 8 NAND channels and up to 8GB DRAM, and can talk to Marvell data centre Ethernet switches and so link to servers.

Toshiba said the 88SS5000-SSD combination delivers up to 3GB/sec of throughput and up to 650k random IOPS. This is a tad slower than the FMS 2018 system’s SSDs.

Marvell partners

In an FMS 2019 press release Marvell said the idea of a direct-to-ethernet SSD is “being advanced by multiple storage end users, server and storage system OEMs and SSD makers.” That makes Toshiba the first one to go public with what Marvell says is a market-ready product.

Marvell cited Alvaro Toledo, VP for SSD marketing at Toshiba Memory America, who talked of an SSD demonstration – with no commitment to launch product yet. Another Toshiba exec, Hiroo Ohta, technology executive at Toshiba Memory Corporation, was quoted: “The combination of our products will help illustrate the significant value proposition of NVMe-oF Ethernet SSDs for data centres.”

Blocks & Files thinks Toshiba and Marvell could usefully demonstrate some big name software product running faster and cheaper on their direct-to-ethernet SSDs.

It remains to be seen if Samsung will pick up the Marvell 88SS5000 converter controller for its KV-SSD. It will have a tougher job marketing the KV-SSD than Toshiba with its NVMe-oF SSD, because the key:value store idea adds another dimension to the sale for customers to take on board.

The composable systems connection

Western Digital, Toshiba Memory Corp’s flash foundry joint-venture partner, has a flash JBOF product: the Ultrastar Serv24-HA.

It also has an OpenFlex composable systems product, which uses NVMe-oF to interconnect the devices.

The obvious next step for Western Digital is to bring out its own direct-to-ethernet SSD for the Serv-24-HA chassis, and to also use it in the OpenFlex system.

Toshiba supports the DriveScale Composable Infrastructure, and another obvious possibility is for DriveScale to support Toshiba’s direct-to-Ethernet SSD.