This week in storage, we have an acquisition, three momentum releases, Cloudian going Splunk, Emmy awards for Isilon and Quantum, plus some funding news, people moves, two customer wins and books from NetApp and Intel.

Hitachi Vantara buys Waterline

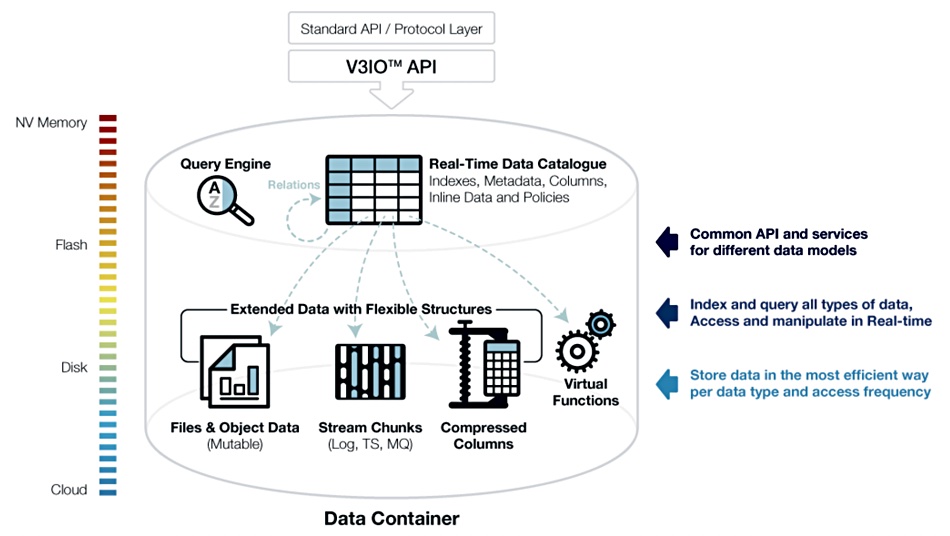

Hitachi Vantara told the market it intends to buy Waterline Data and so gain a data cataloging capability for what it calls its “data ops” product.

Waterline Data’s catalog technology uses machine learning (ML) to automate metadata discovery. The firm calls this “fingerprinting” technology. It uses AI- and rule-based systems to automate the discovery, classification and analysis of distributed and diverse data assets to accurately and efficiently tag large volumes of data based on common characteristics.

Shown an example of a data field containing claim numbers in an insurance data set, the technology can then scan the data set for other examples and tag them. Hitachi V says this enables it to recognise and label all similar fields as “insurance claim numbers” across the entire data lake and beyond with extremely high precision – regardless of file formats, field names or data sources.

Hitachi V is getting technology that has been adopted by customers in the financial services, healthcare and pharmaceuticals industries to support analytics and data science projects, pinpoint compliance-sensitive data and improve data governance.

It can be applied on-premises or in the cloud to large volumes of data in Hadoop, SQL, Amazon Web Services (AWS), Microsoft Azure and Google Cloud environments.

Cloudian goes Splunk

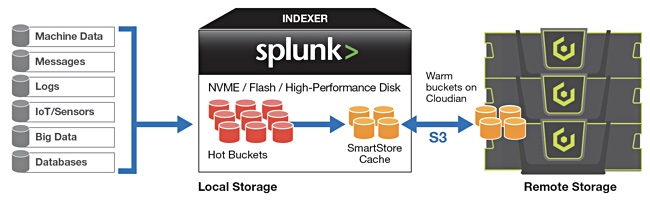

Cloudian has said its HyperStore object storage combined with Splunk’s SmartStore feature can provide an exabyte-scalable, on-premises storage pool separate from Splunk indexers.

The firm claims the growth of machine and unstructured data is breaking the distributed scale-out mode that combines compute and storage in the same devices. A Splunk SmartStore and Cloudian HyperStore combo lets you decouple the compute and storage layers, so you can independently scale both.

A SmartStore-enabled index minimizes its use of local storage, with the bulk of its data residing remotely on economic Cloudian HyperStore. As data transitions from hot to warm, the Splunk indexer uploads a copy of the warm bucket to Cloudian Hyperstore and retains the bucket locally on the indexer until it is evicted to make space for active datasets.

HyperStore becomes the location for master copies of warm buckets, while the indexer’s local storage is used for hot buckets and cache copies of warm buckets which contain recently rolled over data, data currently participating in a search or highly likely to participate in a future search. Searching for older datasets that are now remote results in a fetch of the master copy from the HyperStore.

You can download a Cloudian-Splunk briefing doc to find out more.

Scale Computing momentum

Hyperconverged infrastructure appliance vendor Scale Computing claims to have achieved record sales in Q4, driven by its OEM partnerships and edge-based deal activity, exiting 2019 at a growth rate of over 90 per cent in total software revenue; its best year yet.

Scale says it added hundreds of new customers and announced an OEM partnership with Acronis, offering Acronis Cyber Backup to customers through Scale Computing’s channels. Via its Lenovo partnership, it says customer wins included Delhaize, Coca Cola Beverages Africa, the Zillertaler Gletscherbahn, National Bank of Kenya, beIN Sports, and De Forensische Zorgspecialisten.

In 2019 NEC Corporation of America and NEC Enterprise Solutions (EMEA) announced a new hyperconverged infrastructure (HCI) solution powered by Scale Computing’s HC3 software.

Jef Ready, Scale’s CEO and co-founder, said: “In 2020, we anticipate even higher growth for Scale Computing as a leading player in the edge computing and hyper-converged space.”

Scality RINGs in changes

RING object storage software supplier Scality said it experienced record growth in 2019 from both customer expansions and “new logo” customers. Scality was founded 10 years ago and said it has recruited 50 new enterprise customers from around the world, across a broad range of industries and use-cases.

Scality’s largest customer stores more than 100PB of object data. Scality CEO and co-founder Jerome Lecat said: “Now in our 11th year, we’re really proud to be able to say that eight of our 10 first customer deployments are still in production and continue to invest in the Scality platform.”

He reckons: “It’s clear that Scality RING is a storage solution for the long-term; on a trajectory to average at least a 20-year lifespan after deployment. That’s a solid investment.”

Will Scality have IPO’d or been acquired by 2030 though? That’s an interesting question.

WekaIO we go

Scale-out, high-performance filesystem software startup WekaIO said it grew revenue by 600 per cent in its fiscal 2019 compared to 2018. The firm said its product was adopted by customers in AI, life sciences, and financial analysis.

Liran Zvibel, co-founder and CEO at WekaIO, claimed: “We are the only tier-1, enterprise-grade storage solution capable of delivering epic performance at any scale on premises and on the public cloud, and we will continue to fuel our momentum by hiring for key positions and identifying strategic partnerships.”

WekaIO said its WekaIO Innovation Network of channel partners grew to 67 partners in 2019, a 120 per cent growth rate. It is 100 per cent channel-focused and announced technology partnerships with Supermicro, Dell EMC, HPE, and NVIDIA in 2019. It said there was 300 per cent growth in AWS storage, topping 100PB in 2019.

Shorts

Datera has announced that its Datera Data Services Platform is now Veeam Ready in the Repository category. This enables Veeam customers to back up VMware primary data running on Datera primary storage to another Datera cluster, so providing continuous availability.

Dell EMC Isilon has been awarded a Technology & Engineering Emmy by the National Academy of Television Arts and Sciences for early development of hierarchical storage management systems. It will be awarded at the forthcoming NAB show, April 18-22, in Las Vegas.

HiveIO announced Hive Fabric 9.0 with protection for virtual machines (VMs) and user data with its Disaster Recovery (DR) capability, which integrates with cloud storage, such as Amazon S3 and Azure. Hive Fabric 8.0 also incorporates business intelligence (BI) tools into Hive Sense. This capability proactively notifies HiveIO of an issue within a customer environment.

HPE has added replication over Fibre Channel to its Primera arrays as an alternative to existing Remote Copy support over Internet Protocol (IP). It claims Primera is ready to protect any mission-critical applications, regardless of the existing network infrastructure. Remote Copy is, HPE says, a continuous-availability product and “set it and forget it” technology.

NVMe-Over-Fabrics array startup Pavilion is partnering with Ovation Data Services Inc. (OvationData) to bring Hyperparallel Flash Array (HFA) technology to OvationData’s Seismic Nexus and Technology Centers. OvationData is a data services provider for multiple industries in Europe and the United States, with a concentration on seismic data for oil and gas exploration.

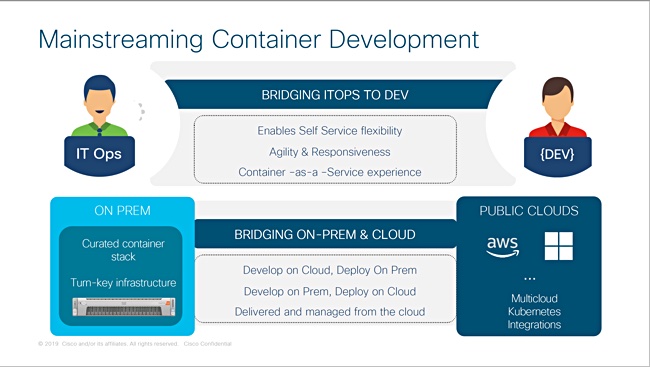

Red Hat announced the general availability of Red Hat OpenShift Container Storage 4 to deliver an integrated, multi-cloud storage to Red Hat OpenShift Container Platform users. It uses the Multi-Cloud Object Gateway from Red Hat’s 2018 acquisition of NooBaa.

Quantum has also won a Technology & Engineering Emmy for its contributions to the development of Hierarchical Storage Management (HSM) systems, meaning StorNext, for the media and entertainment industries. This Emmy will also be awarded at the forthcoming NAB show, April 18-22, in Las Vegas.

SQream announced a new release of its flagship data analytics engine, SQream DB v2020.1. It includes native HDFS support which dramatically improves data offloading and ingest when deployed alongside Hadoop data lakes. SQream DB can now not only read, but also write data and intermediate results back to HDFS for other data consumers, to significantly improve analytics capabilities from a Hadoop data pipeline. It’s also added ORC Columnar Format and S3 support.

Container security startup Sysdig has announced a $70m E-round of VC funding, taking total funding to $206m. The round was led by Insight Partners with participation from previous investors, Bain Capital Ventures and Accel. Glynn Capital also joined this round, along with Goldman Sachs, who joined after being a customer for two years. Sysdig has also set up a Japanese subsidiary; Sysdig Japan GK.

People

Dell Technologies has promoted Adrian McDonald to its EMEA President position. He will continue his role as global lead for the Mosaic Employee Resource Group at Dell Technologies, which represents and promotes cultural inclusion and the benefits of cultural intelligence. In December last year Dell appointed Bill Scannell as its global sales organisation leader, with Aongus Hegarty taking the role of President of International Sales responsible for all markets outside of North America.

Cloud file services supplier Nasuni has appointed Joel Reich, former executive vice president at NetApp, and Peter McKay, CEO of Snyk and former Veeam co-CEO, to its board of directors.

Software-defined storage supplier Softiron announced the appointment of Paul Harris as Regional Director of Sales for the APAC region.

WekaIO has appointed Intekhab Nazeer as Chief Finance Officer (CFO). He comes to WekaIO from Unifi Software (acquired by Dell Boomi), a provider of self-service data discovery and preparation platforms, where he was CFO.

Customer wins

In the UK the Liverpool School of Tropical Medicine (LSTM) has chosen Cloudian’s HyperStore object storage to to manage and protect its growing data, Data will be offloaded onto the Cloudian system from what the firm says is an expensive NetApp NAS filer.

HPE has been selected by Zenuity, a developer of software for self-driving and assisted driving cars, to provide artificial intelligence (AI) and high-performance computing (HPC) infrastructure to be used for developing autonomous driving (AD) systems.

Books

Intel has published a 400+ page book on (Optane) Persistent Memory programming. The book introduces the persistent memory technology and provides answers to questions software developers an d system and cloud architects may have;

- What is persistent memory?

- How do I use it?

- What APIs and libraries are available?

- What benefits can it provide for my application?

- What new programming methods do Ineed to learn?

- How do I design applications to use persistent memory?

- Where can I find information, documentation, and help?

It’s available in print and digital (PDF) format.

NetApp has also published a book, an eBook; Migrating Enterprise Workloads to the Cloud. It provides hands-on guidelines, including how Cloud Volumes ONTAP supports enterprise workloads during and after migration. Unlike the Intel Persistent Memory brain dump, this is more of a marketing spiel – and is 16 pages in landscape format.