NetApp’s fourth quarter ended April 24 deteriorated as the pandemic struck and exacerbated product and cloud sales weaknesses.

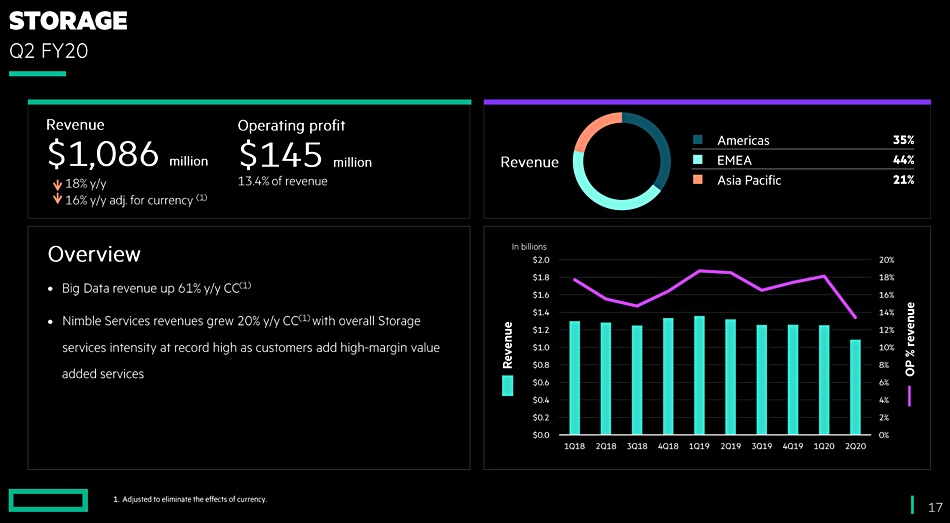

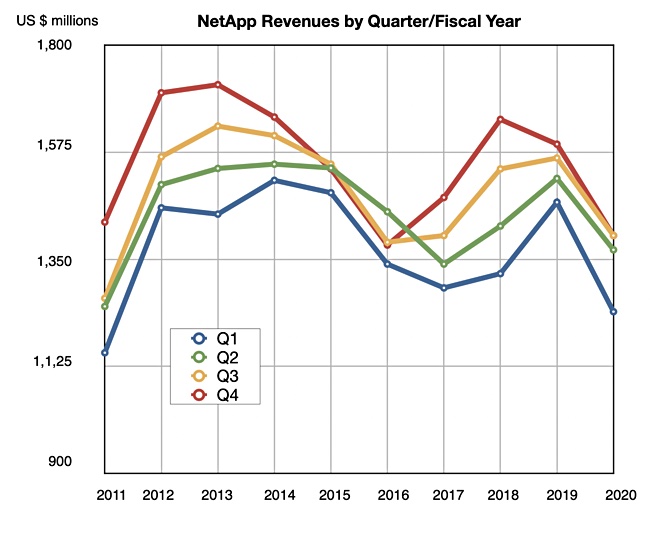

NetApp’s final 2020 quarter revenues were $1.4bn, dropping 11.9 per cent from last year’s $1.59bn. Net income was $196m, down 50.5 per cent from last year’s $396m.

Full fy2020 revenues of $5.41bn were NetApp’s lowest for nine years, but annual net income of $819m was the second highest in at least ten years, exceeded only by last year’s $1.17bn.

CEO George Kurian said: “The quarter started off well, and we were tracking to our targets until countries around the globe began going into lockdown. In April, the pace of business slowed significantly and our visibility was reduced.”

William Blair analyst Jason Ader summed things up: “While certain customers accelerated transactions to get ahead of the shutdown and address the demands of remote work, this dynamic was more than offset by the pace of business slowing in April, as many customers delayed large storage purchases.”

Quarterly financial summary:

- $396m in free cash flow

- Gross margin 66.9 per cent

- $0.88 earnings per share compared to $1.59 a year ago

- Cash, cash equivalents & investments – $2.88bn

Product revenues

Product revenues were $793m, with $267m from software maintenance ($242m a year ago) and $341m ($350m) from hardware maintenance and other services. These business lines accounted for about 40 per cent of revenues for the full year, and are an indication of NetApp’s massive installed customer base.

CFO Mike Berry said: “The combination of software and hardware maintenance and other services continues to be an incredibly profitable business for us, with gross margin of 83.2 per cent, which was up one point year-over-year.”

Products are divided into strategic (All-flash FAS products HW + SW, private cloud systems, enterprise software license agreements and other optional add-on software products) and mature (Hybrid FAS products, OEM products, and branded E-Series.) Both segments showed annual declines, Strategic product revenues were $544m compared to $623m a year ago, and mature product revenues fell to $249m ($377m).

The all-flash array run rate was $2.6bn, with quarterly revenue down three per cent to $656m. Susquehanna International Group analyst Mehdi Hussein talked of “four consecutive quarters of year-over-year decline in all-flash array [revenues].” It’s not good, but there is plenty of scope for sales to the existing customers as only 24 per cent of installed NetApp arrays are all-flash.

Aaron Rakers, a Wells Fargo senior analyst, pointed out the “private cloud business (SolidFire, NetApp HCI, StorageGRID, and services) was at a $408m annual run rate in the quarter, or +19 per cent q/q. -32 per cent y/y).”

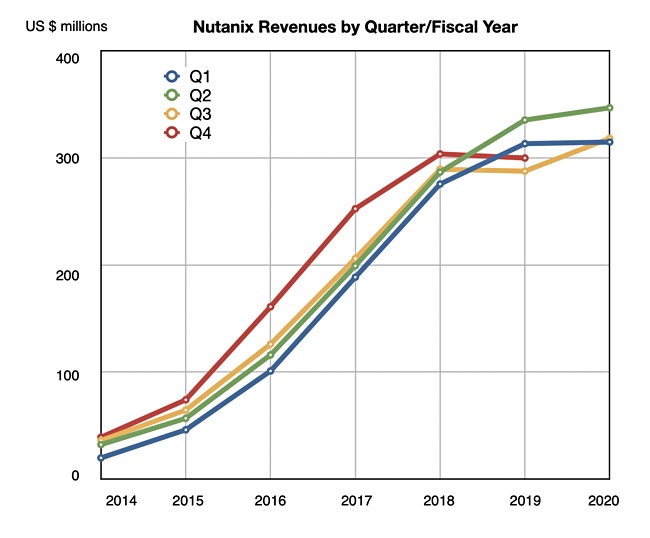

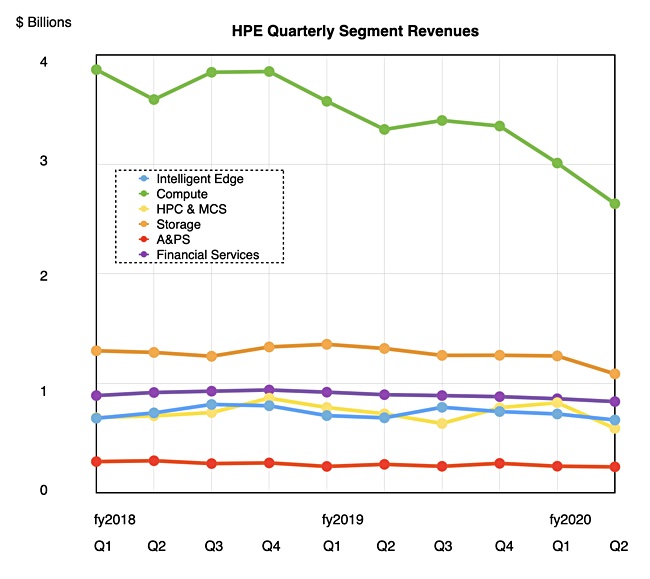

Quarterly revenue trend

What went wrong this quarter was sales close rates falling in April. But a look at a chart of quarterly revenues by fiscal year show that quarterly revenues have been falling on annual compare since Q4 fy2019; that’s five straight quarters, with the pandemic steepening the curve in this latest quarter. There’s a more systemic problem than the pandemic.

Comment

Several market forces are hitting NetApp with its traditional reliance on on-premises arrays:

- Hyperconverged infrastructure (HCI) appliances and server SANs

- The rise of public cloud storage

- A move to subscription-based revenues

NetApp is adapting to each, with mixed success. Its Elements disaggregated HCI is not making a perceivable impact on an HCI dominated by Dell Technologies and Nutanix.

Public cloud-based (also subscription-based) revenues are growing fast, with cloud data services annualised recurring revenue of approximately $111m, an increase of 113 per cent year-over-year. But this is just 7.9 per cent of NetApp’s total quarterly revenue. William Blair analyst Jason Ader commented this “ramp has been well below management targets”.

NetApp is circumspect about subscriptions, with Kurian saying: “I don’t think we are trying to say that at this point in time that we are going to transition our entire business over to a subscription model. I think we need to have that in our portfolio and we’ll will have it, but we are selective about qualifying which customers we provide subscription offerings to.”

This contrasts with Nutanix, Cohesity and others ,which are moving full tilt into subscriptions.

Broadly speaking, NetApp is an on-premises, private cloud supplier in a hybrid cloud world that’s selling too little product and moving too slowly in adding public cloud and subscription-based revenues.

The company’s plan is to get in front of more buyers and more accounts and so it has hired 200 primary sales heads, focused on new customer acquisition, one quarter ahead of its previous schedule. They take three to four quarters to become productive, according to the company.

This background adds context to this month’s hire of Cesar Cernuda, previously head of Microsoft Latin America, as President in charge of sales, marketing, services, and support.

Earnings call

In the earnings call, Kurian’s outlined “two clear priorities, returning to growth with share gains in our storage business … and scaling our … Cloud Data Services business. We will exploit competitive transitions and the accelerating shift to cloud to expand the usage of our products and services.”

NetApp has “created a separate globally integrated structure for our Cloud Data Services sales team with the sole focus of scaling our Cloud Services business by maximizing our partnerships with the world’s biggest public clouds.”

CFO Mike Berry said: “Our acquisition strategy will remain focused on bolstering our strategic roadmap, particularly within our Cloud Data Services business.”

Part of that business is providing virtual desktop infrastructure (VDI). Kurian said: “we are building out a modern workplace solution that combines a global file consolidation solution that we acquired in Q4 called Talon and a virtual desktop infrastructure and Desktop-as-a-Service solution provider called CloudJumper that we acquired in Q1 of fiscal year ’21.

“The combination of Cloud Volumes, Talon and CloudJumper gives us an extremely strong offering in the market, and it’s particularly suited to the broad transition of virtual desktop services to what we see as Windows Virtual Desktop.”

NetApp product leadership

Kurian is emphatic that NetApp occupies a leadership position in storage technology. “We have the leadership position, not second, not third, the leadership position in file, block, object technologies, which is the full range, and we have uniquely differentiated Cloud Data Services.”

Yet the company is still not selling enough product. Other suppliers sell more all-flash arrays, more filers, more object storage, and more HCI systems than NetApp.

NetApp’s main competitor, Dell Technologies, this month announced the PowerStore unified, mid-range array and aims to grow its business with that product. Kurian commented: “To this particular platform, it is a long delay and still very much incomplete. And so, we see it as [a] complete opportunity for us. Now that they have announced it, we’re going after them. Stay tuned.”

Revenue guidance for next quarter is $1.17bn at the mid-point, down 5.6 per cent on a year ago. There is no guidance for the full fy2021 year; the outlook is too fuzzy.