The X86 CPU is a general purpose processing marvel but it is poor at handling the repetitive processing of billions of instructions. Dedicated parallelised hardware such as Nvidia’s GPUs accelerate these operations.

Other suppliers are pursuing this specialised processing hardware idea with dedicated storage and networking processors called Data Processing Units (DPU).

As the volume of data stored and sent over networks by enterprises carries on rising, DPU developers are betting that their products will be needed to accelerate storage and networking functions carried out by industry standard servers. These DPUs can be based on different kinds of processing hardware – for example, ASICs, FPGAs and SoCS with customised Arm CPUs.

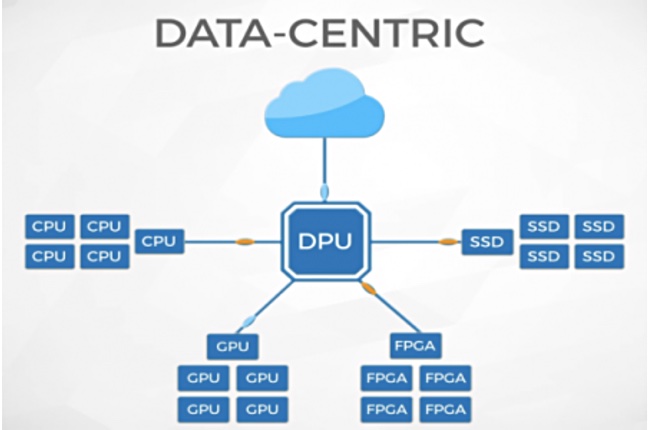

In a recent blog, Kevin Deierling, an SVP at Mellanox, a Nvidia subsidiary, argues that the DPU has “become the third member of the data centric accelerated computing model [where] the CPU is for general purpose computing, the GPU is for accelerated computing and the DPU, which moves data around the data centre, does data processing”.

A DPU is a system on a chip (SoC), containing three elements, according to Deierling.

- Multi-core CPU, e.g. Arm

- Network interface capable of parsing, processing and transferring data at line rate – or at the speed of the rest of the network – to GPUs and CPUs,

- Set of acceleration engines that offload and improve applications performance for AI and machine learning, security, telecommunications, and storage, among others.

Dieirling reveals his Mellanox bias with this statement: “The DPU can be used as a stand-alone embedded processor, but it’s more often incorporated into a SmartNic, a network interface controller,” such as Mellanox products. A SmartNIC carries out network traffic data processing, unlike a standard NIC where the CPU handles much of the workload. So, Deierling is making the point here that DPUs offload a host X86 CPU.

Arm DPU ideas

Several storage-focused DPU products using Arm chips have recently arrived on the scene. Wells Fargo senior analyst Aaron Rakers explained Arm’s DPU ideas to subscribers. He says Chris Bergey, Arm SVP for infrastructure, “shares Nvidia’s vision of the datacenter as a single compute engine (CPU + GPU + DPU). He noted the company is focused on offloading data from the processor via SmartNICs and on the use of data lakes. We continue to see an Nvidia Arm CPU in the datacenter as an intriguing possibility.”

He adds: “As Nvidia looks to integrate / leverage Mellanox, a long-time user of Arm, we question whether Nvidia could be on a path to further deepen / develop their use of Arm for datacenter solutions going forward.”

Let’s have a look at some of the DPU players, starting with AWS.

AWS Nitro

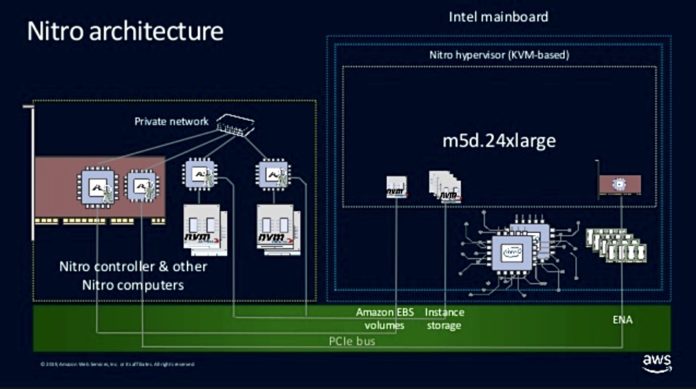

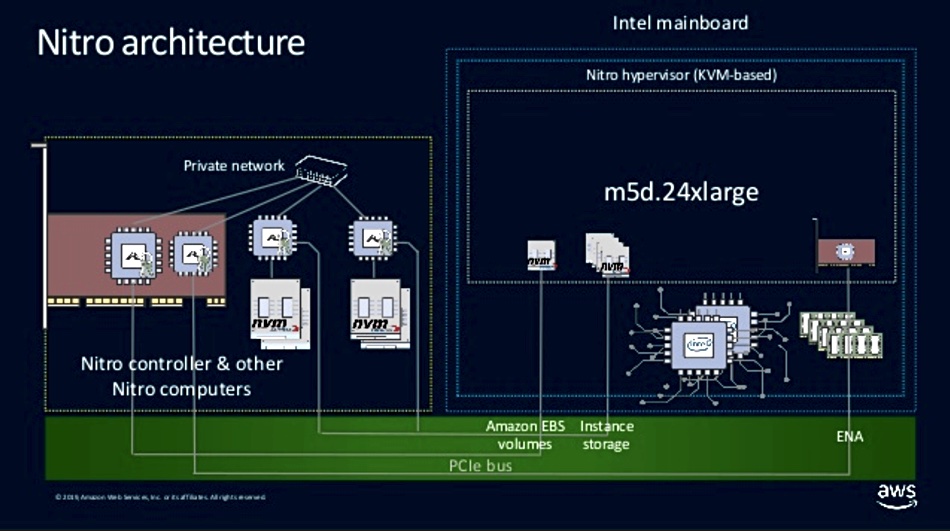

Amazon Web Services has decomposed traditional servers, adding Nitro IO acceleration cards (ASICs) to handle VPC (Virtual Private Cloud), EBS, Instance Storage, security and more with an overall Nitro Card Controller.

An AWS Elastic Compute Cloud instance is based on PCIe-connected Nitro cards plus X86 or Arm processors and DRAM. There are various EC2 instance types – either general purpose or optimised for compute, memory, storage, machine learning and scale-out use cases.

Diamanti

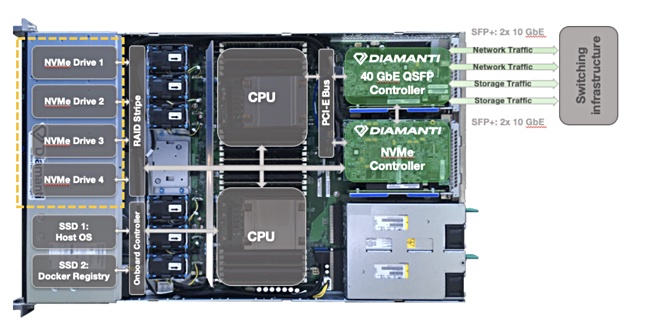

Diamanti is developing bare-metal hyperconverged server hardware to run Kubernetes-orchestrated containers more efficiently that standard servers. Diamanti D10, D20 and G20 servers are fitted with Ultima-branded network and storage offload cards – DPUs with PCIe interfaces.

They offload storage and networking traffic from the server’s Xeon CPUs to their dedicated processors, which have hardware-based queues.

The network card has a 40GbitE QSFP controller and is Kubernetes CNI and SR-IOV-enabled. It links the server to a switched infrastructure. The controller can integrate with standard enterprise data centre networking topologies, delivering overlay and non-overlay network interfaces, static and dynamic IP assignment and support for multiple interfaces per Kubernetes pod.

The storage card links the server to an NVMe storage infrastructure. It is an NVMe controller and Kubernetes CSI-enabled with data protection and disaster recovery features such mirroring, snapshots, multi-cluster asynchronous replication, and backup and recovery. Replication is based on changed snapshot-blocks to reduce network traffic.

Both cards have Quality-of-Service features to ensure storage IOPS and network throughput consistency.

Fungible Inc

Startup Fungible Inc is developing a DPU that can compose server components and accelerate server IO.

According to Fungible, network and DPU devices can perform data-centric work better than general-purpose server CPUs. Dedicate them to application processing and have the DPUs look after the storage, security and network IO grunt work.

This startup is expected to formally launch product later this year.

Pensando

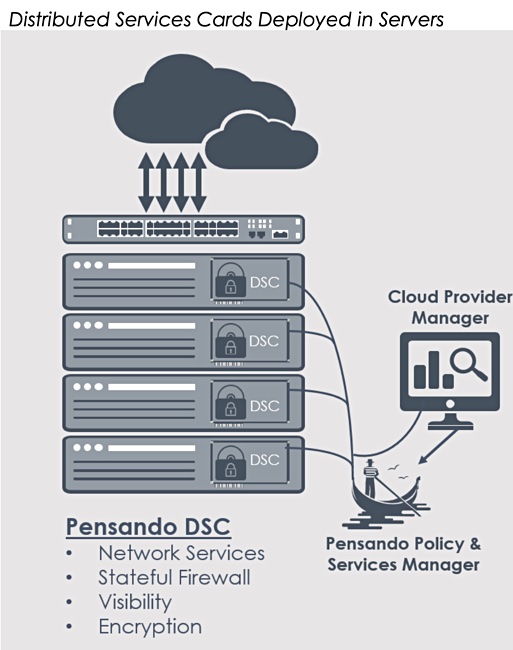

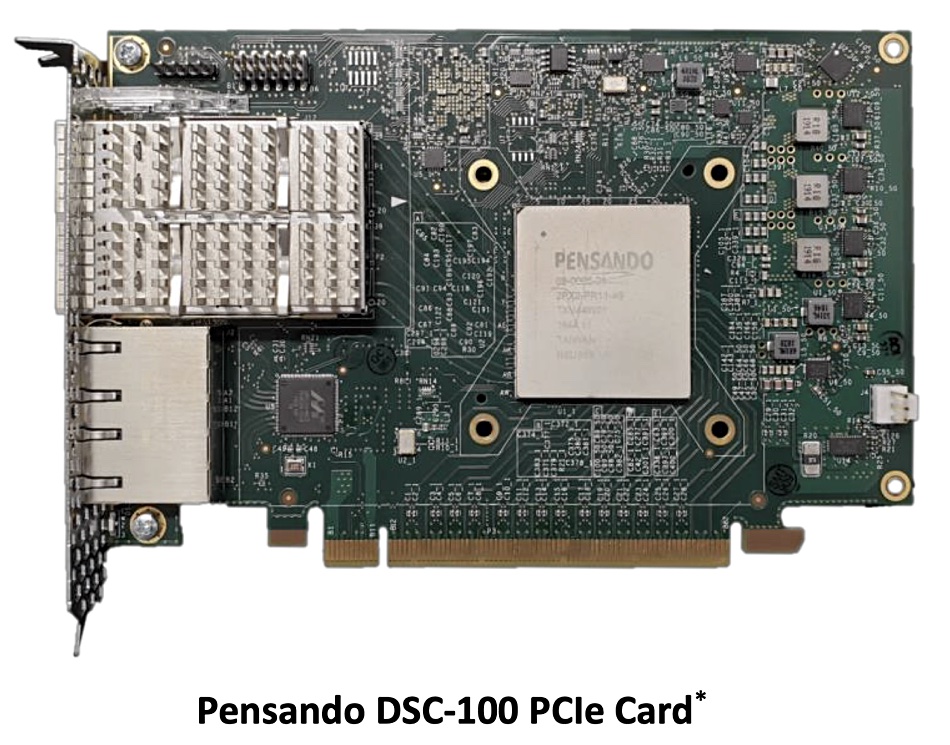

Pensando makes dedicated customisable P4 Capri processors which are located at a server’s edge; the place where it talks to networks and storage. They run a software stack delivering network, storage, and security services at cloud scale with claimed minimal latency, jitter and low power requirements.

A Pensando Distributed Services Card (DSC-100) is installed in a host server to deliver the Capri processor hardware and software functions. This DSC-100 effectively combines the functionality of Diamanti’s separate network and storage cards.

Comment

None of the DPUs above can be classed as industry-standard. Unless included in a server or storage array on an OEM basis, they require separate purchase, management and support arrangements and add complexity. Therefore, the best scenario for enterprise customers is for the mainstream suppliers to treat DPUs as just another component to incorporate into their own systems. Also, we can think about Intel’s views here. The chipmaker already makes Ethernet switch silicon and Altera FPGAs and may be keen to protect its data centre silicon footprint from DPU interlopers.

Hyperscaler customers are a different story as they have the scale to buy direct from DPU suppliers and get DPUs customised for their needs. AWS Nitro ASICs are a classic example of this.