Sponsored It’s one thing hearing a vendor talking about a new technology. The really interesting part comes when someone else puts that new technology into real systems, in real customers, and starts running real world applications

One company that has been doing just that with Intel® Optane™ Technology is BroadBerry Data Systems, a UK-based company that is part of Intel’s very select group of Platinum Partners, giving it privileged access to the chip giant’s labs and engineers.

BroadBerry’s story began in 1989, when its founders began selling custom made PCs and servers in the UK. Unlike many of the home-grown vendors who sprung up back then, BroadBerry managed to navigate its way through the 1990s dotcom bubble and subsequent crash, as it began focusing on industrial and rack mountable server and storage systems and offering customers easy and extensive configuration.

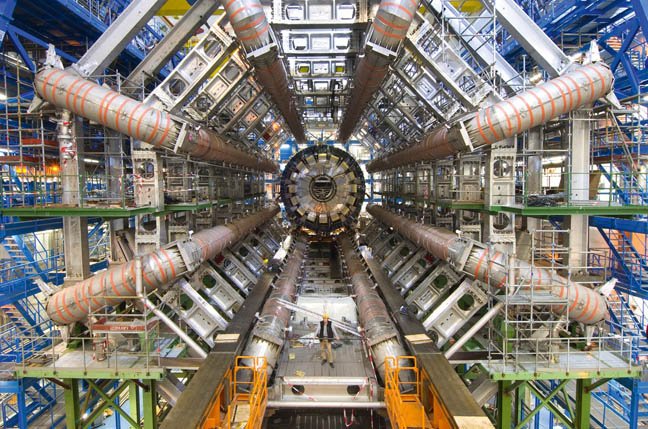

Over 30 years on, the company has expanded across Europe and the US, building a customer list that would turn much bigger rivals slightly green, including England’s top ten universities and numerous research organisations, NASA and CERN, and commercial clients including, Google and Amazon.

One of BroadBerry’s advantages, says marketing manager Graham Hemson, is that as an independent, it can put its customers’ needs first and take its pick of industry leading components or software, for example Supermicro or Intel motherboards, or the Open-E storage software stack, without being locked down to particular suppliers or technologies. At the same time, it is very close to its customers – it has been working with CERN since 2016 for example – and is able to build them a complete solution, including networking and storage, precisely tuning it to the client’s needs and configuring it on site.

This approach also means it can move very quickly when it comes to validating and integrating new technology and components, something that can take months or more in larger, less nimble companies. This has meant it was particularly quick off the mark integrating Intel Optane into its server and storage solutions and putting the technology to work on some very demanding applications at some very interesting customers.

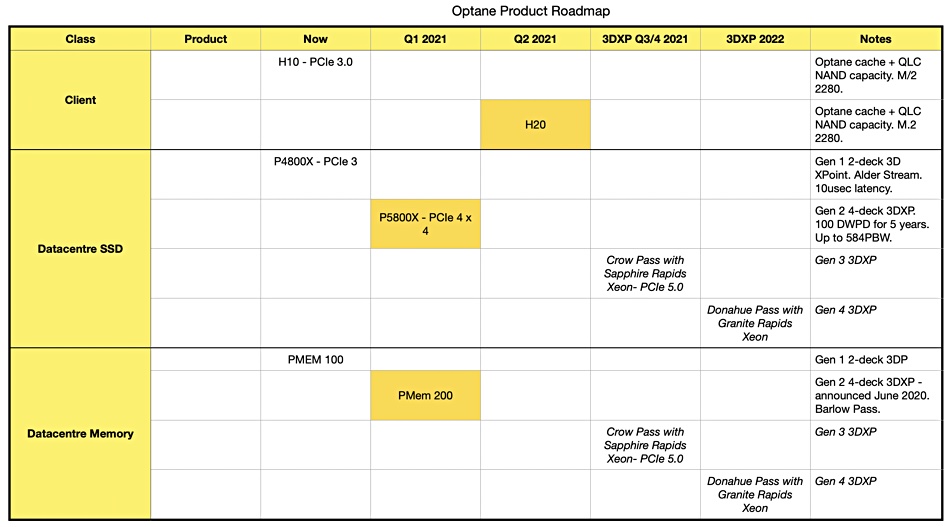

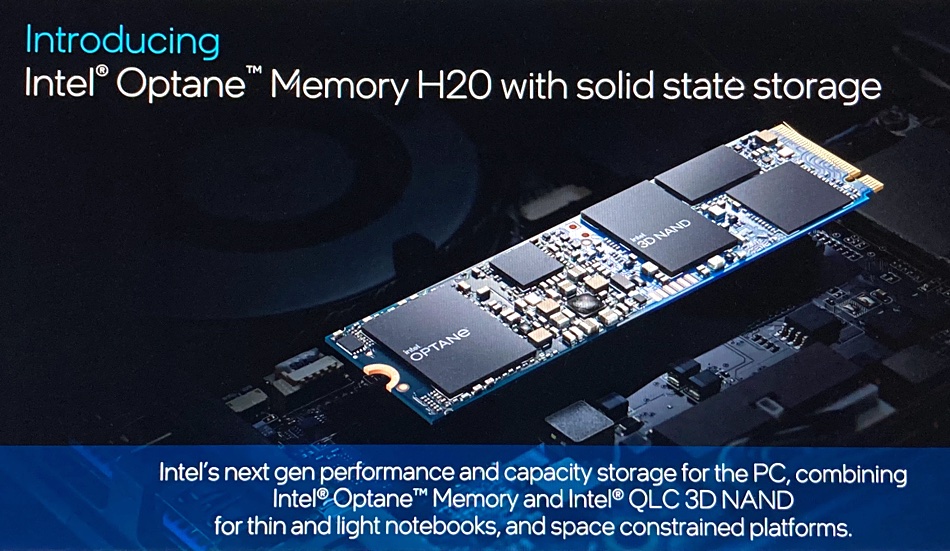

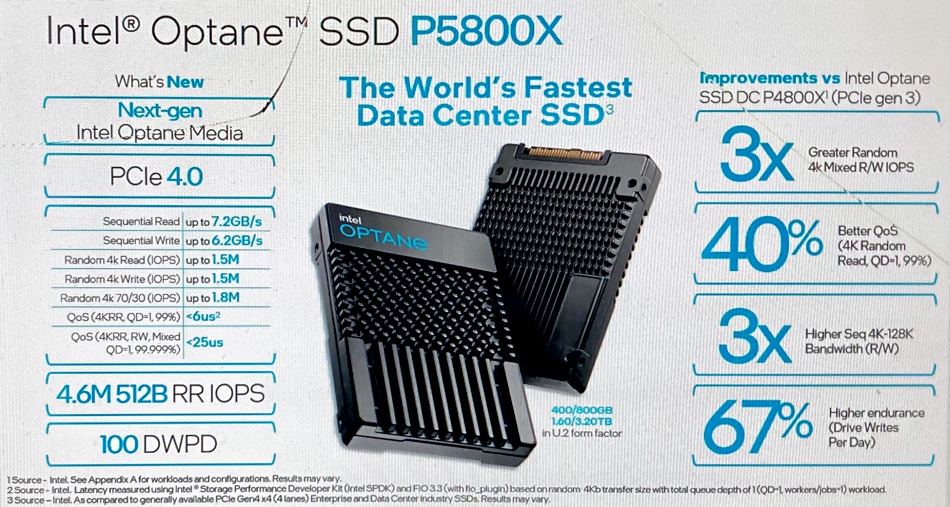

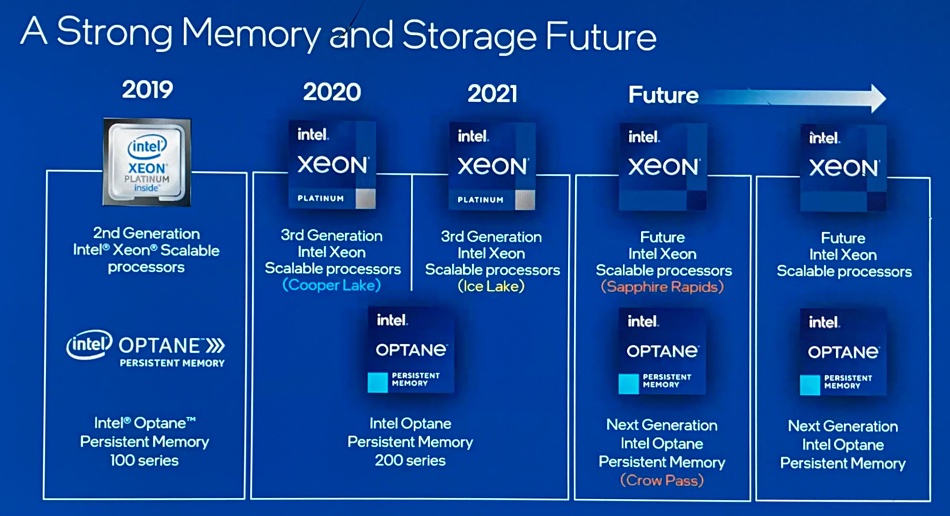

Intel Optane is persistent memory, based on 3D XPoint™ media technology. It offers non-volatile high capacity storage with low latency at near DRAM performance, and is both bit and byte addressable. This means it can be used in SSDs which sit on the NVMe bus and act as a replacement or supplement for conventional SSDs or be used on DIMMS offering the potential of a vast memory pool at a much lower cost than conventional DRAM.

More RAM or more storage? Yes, both BroadBerry’s clients are running demanding applications ranging from commercial big data, to genome sequencing, to geophysical analysis or, in the case of CERN, looking into the very nature of matter itself. This usually means they need lots of DRAM and lots of very fast storage, so the appeal of Optane as both storage and DRAM should be fairly clear.

“If you’re looking at high capacity products, starting from, let’s say 500TB, then obviously the cost of an all-flash solution can go up quite high,” says Matthew Dytkowski, BroadBerry’s technical pre-sales manager, with remarkable understatement. Even as IT budgets come under pressure, he continues, “Everyone’s looking to see how they can … still have a decent amount of capacity whilst retaining performance and this is where Optane is coming into play.”

Dytkowski says one immediately obvious use case for Optane storage was as a write cache device in systems running databases and other randomized workloads. This allows customers to use a tiered hybrid setup, whether with conventional flash drives or with cheaper but higher capacity hard disks, along with Optane storage. This delivers good results, he says, “for a fraction of the price of all flash arrays.”

This was a surprise to some customers, he adds. Whilst they understood the concept of NVMe storage, they didn’t know about Optane. But once BroadBerry explained the technology, and its advantages over conventional flash drives in terms of endurance, he says, “it changed the game”.

BroadBerry has also been able to improve the performance of customers’ existing installations of its kit by adding Optane – something that might not be an option with other vendor’s hardware, due to warranty and certification issues.

Life enhancing, even for older drives

Customers are also increasingly looking at using Optane as a write cache device in VMware/VSan configurations, or with Microsoft Storage Spaces Direct, says Dytkowski. Using Optane in these applications reduces latency to microseconds, he said, “and latency is king.”

By using Optane as dedicated write cache, alongside other cheaper NVMe drives with lower endurance, the life of the latter is extended, he adds. “And so again, by bringing in Optane, we are achieving great performance, great results. And with a much better price point.”

This year had also seen customers get increasingly serious about virtual desktop infrastructure, says Dytkowski, as tech departments worked out how to react to the shift to home working caused by the Covid 19 pandemic, while trying to contain data within the data centre and ensure security. Some customers even want to virtualize the workloads they would normally run on “heavy weight workstations”, he says.

This shift has played to the advantages of using Optane as NVRAM, says Dytkowski. “That allows customers to get more users or more virtual desktops on the one server, rather than buying multiple platforms.” At the same time, 2020 has highlighted the importance of the biotech space – and the technical issues researchers face when running massive workloads. Gene processing for example, eats up DRAM, which is very expensive. At the same time, many organisations in this field, being public sector or grant financed, have to be extremely careful with their budgets. Typically, clients are running 1TB of RAM per machine, so using Optane NVDIMMs and standard RAM in a 7:3 ratio means a drastic cut in TCO.

In the case of one research institute looking to acquire a CPU/RAM intensive HPC cluster for genome work, says Dytkowski, by introducing Optane memory, BroadBerry was able to spec additional hardware for the same budget: “They were very pleased, because adding an additional machine to the entire cluster can shorten the research period.”

One important point, says Dytkowski, is to work with the customer to ensure they the system is optimized towards their given workload to ensure they get the best out of Optane and the server or storage system in general. BroadBerry does extensive stress tests on its machines, and targets this towards whatever workload the customer has specified.

But the customer may have to do some reworking on their older applications to take full advantage of the extra horsepower Optane potentially gives them, he adds. For example, older SQL queries or single threaded applications might not see the full benefit, he explains, “but when you start to optimize, you unleash all the performance.”

And right now, unleashing all the performance you can get with storage is essential. As Dyktowski notes, “data demand is just crazy these days” and shows no sign of slowing down. At the same time, the sort of applications BroadBerry’s customers are running are going to demand ever more RAM. But BroadBerry’s experience at the sharp end shows a combination of a little optimization and a lot of Optane is closing the price performance gap.

Sponsored by Intel