Security and content delivery network provider Akamai is launching a managed database service using Linode technology.

Akamai, which supplies high-availability services to enterprises, bought Linode in February this year for $900 million. Linode is a cloud hosting supplier with 11 datacenters in the US, Europe, and the Far East.

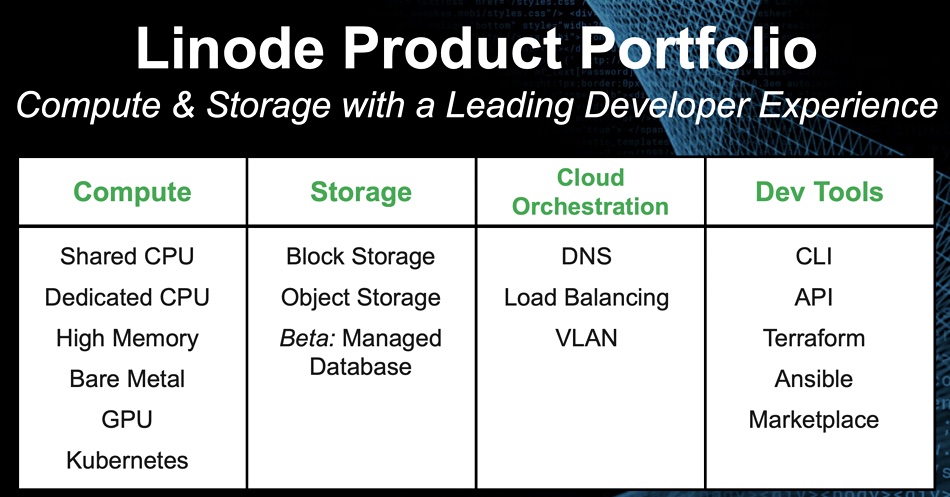

It provides a compute (virtual servers) and storage public cloud, with the storage component including Linode Block Services, Linode Backup, and S3-compatible object storage. Linode had a managed database service in beta at the time of the Akamai buy and this is now coming to market.

Will Charnock, Senior Director of Engineering, Akamai, Compute line of business, said: “Every web application needs a database. Being able to automate aspects of database management is critical for applications that need to be scalable, highly performant, and resilient.

“With the click of a button, developers can have a fully managed database deployed and ready to be populated.” It’s billed as a cloud operations (CloudOps) type service.

This Linode Managed Database (LMDB) service is the first product launch in Akamai’s compute line of business that was built around Linode’s portfolio. It supports MySQL now and will add PostgreSQL, Redis, and MongoDB by the end of June. LMDB offers flat-rate pricing, security and recovery measures, various deployment options, and high-availability cluster options.

Akamai claims users can move common database deployment and maintenance tasks to Linode and save management time and effort. They can select high-availability configurations to ensure that database performance and uptime are never affected by server failure, Akamai added. Less customer admin management expertise is needed to deploy applications and there is a decreased risk of downtime compared to customers setting up and deploying a highly available database themselves, the company claimed.

Akamai delivers content to edge sites and can now deliver database services to the same.

It envisages e-commerce applications doing dynamic personalization using the managed DB along with Kubernetes and FaaS (Function-as-a-Service). Another use case Akamai talked of is healthcare with trainers archiving surgery videos to help train new doctors. The object storage service would play a part here.

Akamai intends to expand into new managed services using Linode. There is obvious potential for adding a Backup-as-a-Service offer and also, perhaps one for file services.