Startup Lucidity claims its Autoscaler can automatically scale public cloud block storage up or down dynamically – depending on workload – without downtime, saving up to 70 percent of cost and with virtually no DevOps involvement.

Lucidity was founded in 2021 in Bangalore, India, by CEO Nitin Singh Bhadauria and Vatsal Rastogi who drives the technical vision and manages engineering and development. Rastogi was previously a software developer involved in creating Microsoft Azure.

It has taken in $5.6 million in angel and VC pre-seed and seed funding. The investors were responding to a proposal that Lucidity develop auto-scaling software to manage the operations involved in scaling up and shrinking public cloud persistent block storage. Its autoscaler would have agents in each virtual machine running in AWS, Azure or GCP, monitor the metrics produced, and then automatically grow or shrink storage capacity as required with no downtime and no need for DevOps involvement.

Lucidity explains that there is a common storage management cycle for AWS, Azure and GCP. This cycle has four basic processes: plan capacity, monitor disks, set up and respond to alerts. Lucidity says it’s built an autonomous orchestration layer to manage this and reduce storage costs, and reduce DevOps involvement by using auto-scaling.

Its GCP and Azure autoscaler documentation claims that “Shrinking a disk without downtime especially has not been possible so far. Lucidity makes all of it possible with no impact on performance.”

The autoscalers rely on a concept of providing logical disks, made up from several smaller disks, which can be added to the logical disk pool or removed from it. A ‘disk’ here means a drive – which can be SSD or HDD-based.

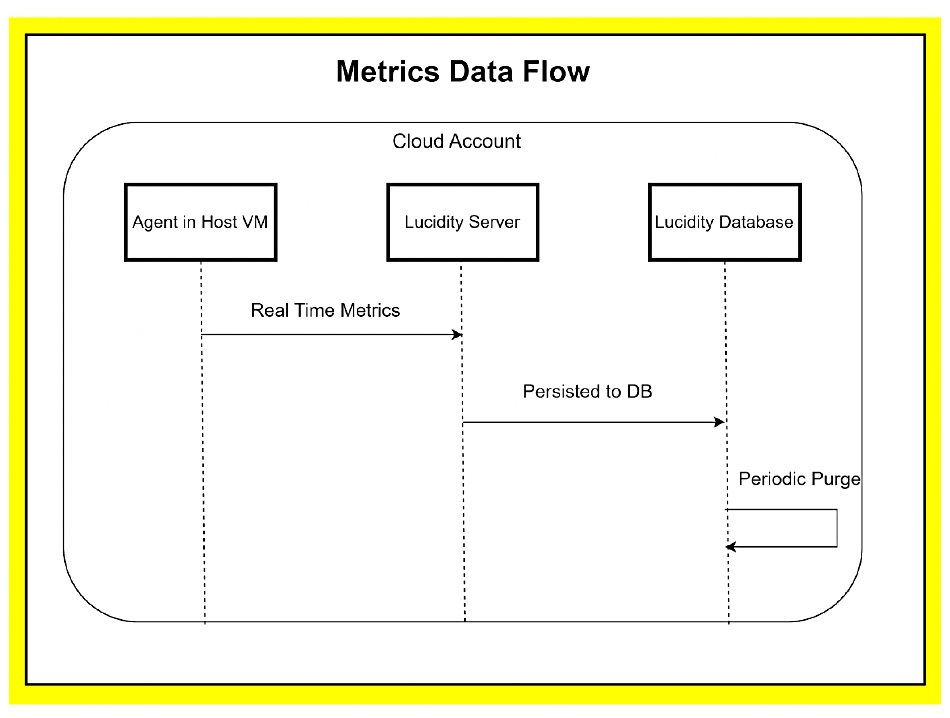

A Lucidity agent runs inside each VM and sends metrics to a Lucidity server which “analyzes the usage metrics for each VM instance to figure out the right storage configuration and keeps tuning it continuously to ensure that the workload always gets the resources it needs.”

This is an out-of-band process. The Lucidity server calculates when to scale capacity up or down. Lucidity GCP autoscaling documentation states: “The Lucidity Scaler is able to perform expand and shrink operations at a moment’s notice by using a group of disks attached to an VM instance as opposed to a single disk. The group of disks is composed together to form a single disk (or a logical disk).”

When a volume is shrunk, Lucidity’s server software works out which disk to remove, copies its data to other drives to rebalance the system, and then removes the empty disk. There is no performance impact on a running application.

This storage orchestration “is powered by Windows Storage Spaces behind the scenes.” Lucidity software runs on top of this and uses the BTRFS filesystem in Linux “to group disks and create a logical disk for the operating system. The operating system would be presented with one single disk and mount point which the applications would continue to use without any impact.”

The AWS autoscaler handles cost optimization by navigating the many and varied AWS EBS storage instances. There are, for example, four SSD-backed EBS volume types and two HDD-backed ones. Lucidity states: “As a result of various performance benchmarks and uses of the various types of EBS volumes, the pricing of these can become challenging to grasp. This makes picking the right EBS type extremely crucial, and can cause 3x inflated costs than required, if not done correctly.”

There are also two types of idle volume and AWS charges on provisioned – not used – capacity. A Lucidity ROI calculator facility can provide more information about this.

Lucidity Community Edition – providing a centrally managed Autoscaler hosted by Lucidity – is available now. A Business Edition – enabling users to “access the Autoscaler via a Private Service Connect that ensures no data is transferred over the internet” – is coming soon. An Enterprise Edition wherein the Autoscaler “is hosted within the cloud account and network of the user for exclusive access” is also coming soon.