Startup PEAK:AIO has had its top-tier GPU data delivery performance validated in testing by Hewlett-Packard Enterprise.

PEAK:AIO is a UK startup providing storage software for the AI market based on storage servers from vendors such as Dell, HPE, Supermicro, and Gigabyte. It aims to deliver the same or better performance than multi-node parallel file systems such as IBM’s Storage Scale and DDN’s Lustre with basic NFS, NVMe SSDs and rewritten RAID software. HPE assessed a single PEAK:AIO AI Data Server and found it to surpass the recently published benchmarks of other AI GPUDirect storage vendors.

Mark Klarzynski, CEO and co-founder of PEAK:AIO, issued a statement: “AI is changing futures; it deserves more than a force fit square peg. PEAK:AIO’s view is AI has unique demands and requires an entirely new approach to storage.

“AI has thoroughly disrupted [the] traditional approach. After observing storage vendors attempting to adapt to the evolving AI market by merely rebranding existing solutions as AI-specific – akin to trying to fit a square peg in a round hole. I believed that AI required and deserved a reset, a fresh perspective and new thinking to match its entirely new use of technology.”

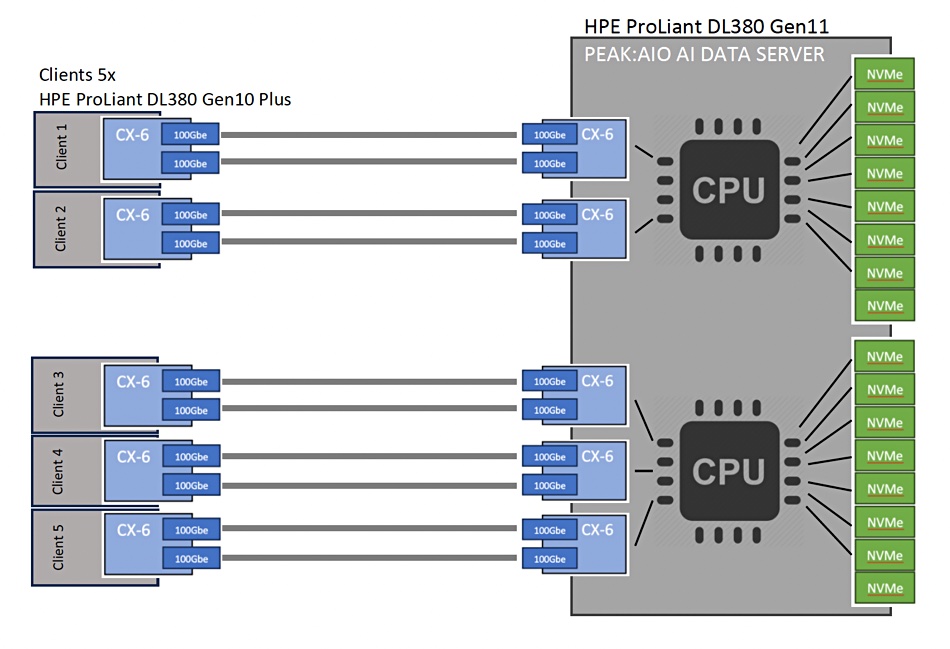

An HPE white paper evaluated PEAK:AIO’s AI Data Server software, executing it on a ProLiant DL380 Gen11 server configured with 16x Samsung 3.2TB SFF PCIe 4 NVMe drives, directly attached via four lanes each. This enabled PEAK:PROTECT RAID to control the drives directly with no intervening controller software. There were 5x Nvidia ConnectX-6 dual 100 GbE port HBAs for external connectivity.

HPE tested this configuration with Nvidia’s GPUDirect protocol, hooking up a single Nvidia DGX A100 GPU server via five 200 GbE/HDR ports, and recording a total read bandwidth of 118GB/sec. PEAK:AIO points out that the load on the ProLiant CPU “was not significant, leaving room for growth with … generation 5 NVMe drives coupled with ConnectX-7 cards and how they will perform when they are more available.”

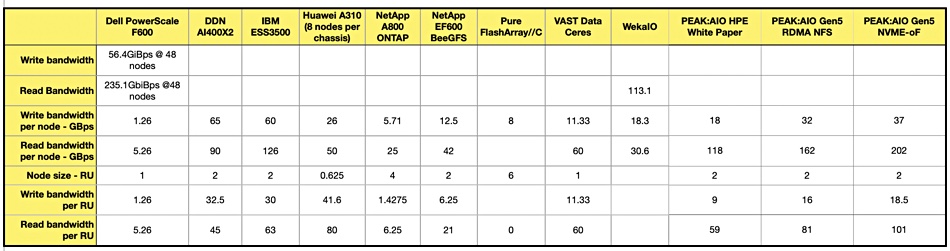

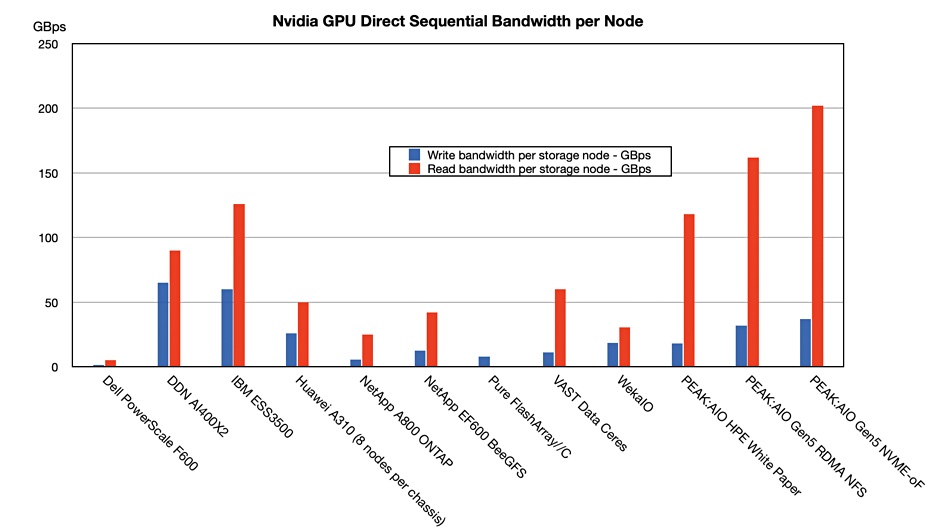

We added these numbers to a spreadsheet (see below) we maintain of suppliers’ GPUDirect performance numbers and charted the results:

With the PCIe 4 SSDs, the PEAK:AIO performance on a per node basis (118GB/sec) was second to IBM’s ESS3500 Storage Scale’s 126GB/sec, with DDN third at 90GB/sec. The tester also looked at external RDMA NFS performance, achieving 119GB/sec.

PEAK:AIO tested the server with gen 5 SSDs and CX7 hardware, and it went faster still. They recorded 202GB/sec with GPUDirect and NVMe-oF, and 162GB/sec with GPUDirect and RDMA NFS. That gives the other suppliers something to aim for.

Klarzynski tells us “HPE utilized a genuine single-node setup” and not a multi-node system like the other suppliers. It’s more affordable and simpler to set up and manage, in other words. He reckons: “In terms of AI GPUDirect performance, our solution stands ahead in the field because that is its entire focus.”

Bootnote

Our spreadsheet table of GPUDirect supplier performance numbers: