Computational memory and CXL-connected external memory pools are tech development hotspots. DRAM and NAND fabricator SK hynix has combined the two in a joint development with sister company SK Telecom to speed machine learning and big data analytics.

The curse of computational memory and storage is that they don’t use x86 processors, and so code from standard server applications can’t run on them. Computational storage requires customized code and computational memory is even harder to build as it needs specialized hardware, instruction sets, and code. SK hynix has found machine learning applications used by sister company SK Telecom that it says can be accelerated by being implemented in computational memory system (CMS) hardware.

Park Kyoung, Head of Memory System Research at SK hynix, said: “Through the internalization of computational functions, CMS enabled performance several times faster than that of dozens of CPU cores in specific computations. Considering that this is just a prototype, we think we can improve the performance even further and are considering applying the technology to other applications such as big data.”

CMS also avoids much data transfer between DRAM and a host server’s x86 CPU, claims the memory maker, thus saving electrical energy.

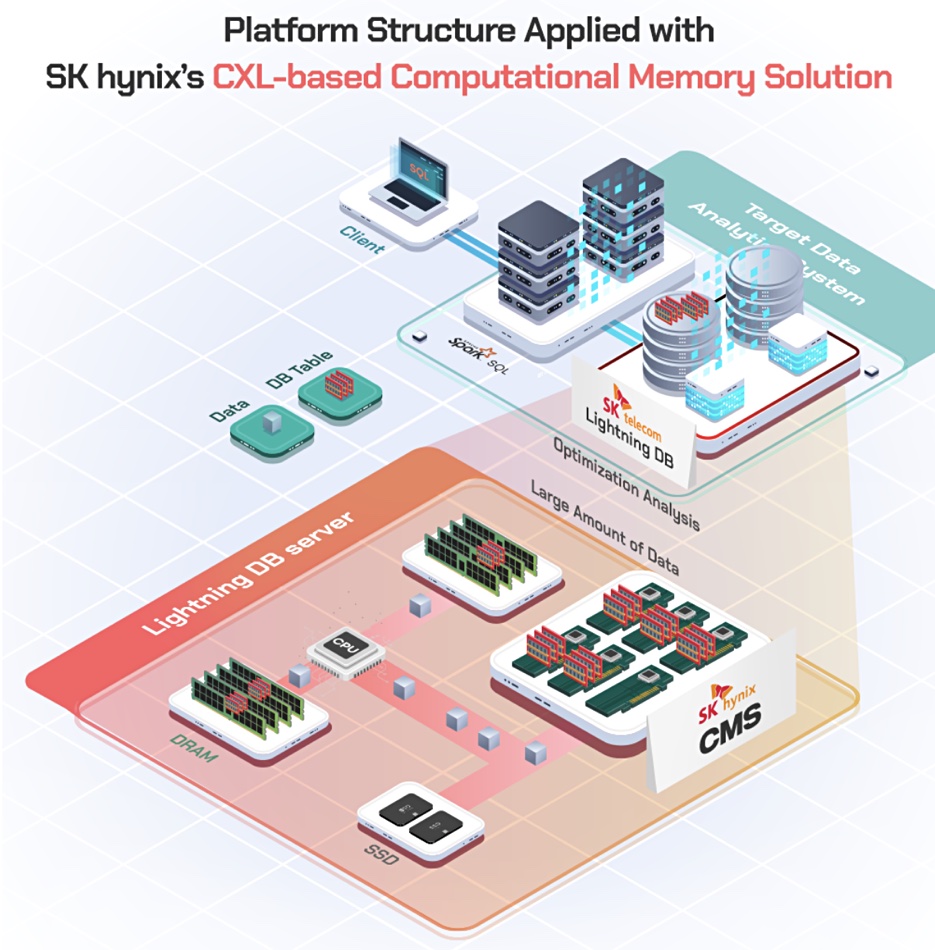

SK Telecom has developed and runs in various commercial services its own Lightning DB, an in-memory data analytics platform, to process large amounts of data. This involves dividing and storing large amounts of data in smaller units and then the optimized parallel processing of these units. SK hynix and Telecom developed both the CMS and CMS-applied Lightning DB simultaneously.

Yang Seung-ji, VP and Head of Vision R&D at SK Telecom, said: “Meaningful computations for SK Telecom’s actual application services were selected, which helped us save a significant amount of time by jointly performing all processes from structure design of hardware-software to development and verification.

“As we verified the performance improvements of the solutions, we plan to apply them to screening tasks that increase accuracy in large-scale AI learning data in the future and use them to strengthen the competitiveness of SK Telecom’s AI service.”

The technology

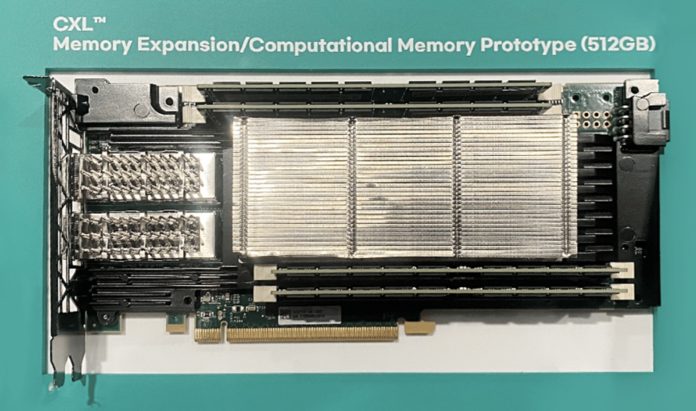

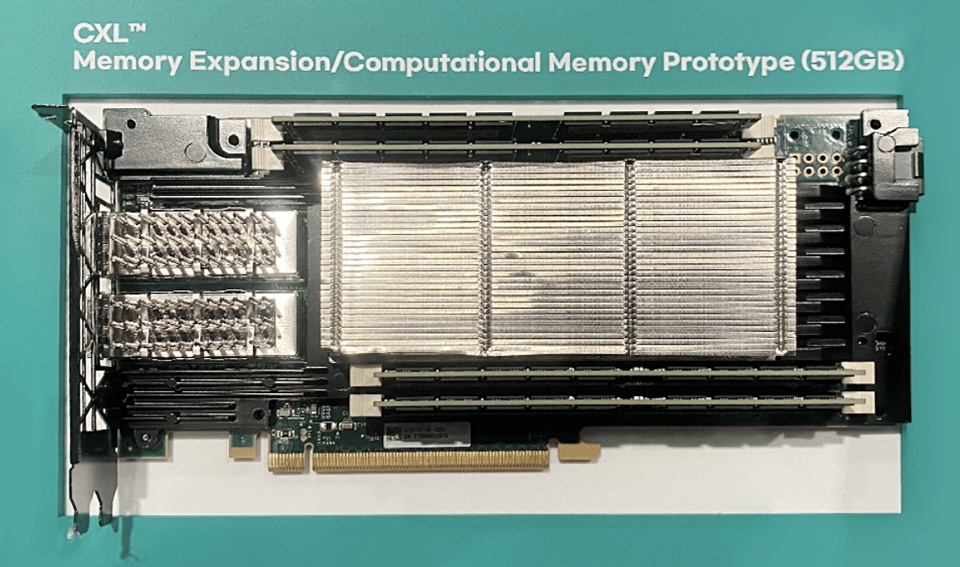

SK hynix has already developed CXL-connected memory. CXL, the Computer eXpress Link, is a developing standard to interconnect server CPUs+DRAM with accelerators and pools of DRAM across a PCIe 5 bus using CXL protocol messages. This external DRAM can be combined with a server’s directly attached DRAM a single and larger memory pool.

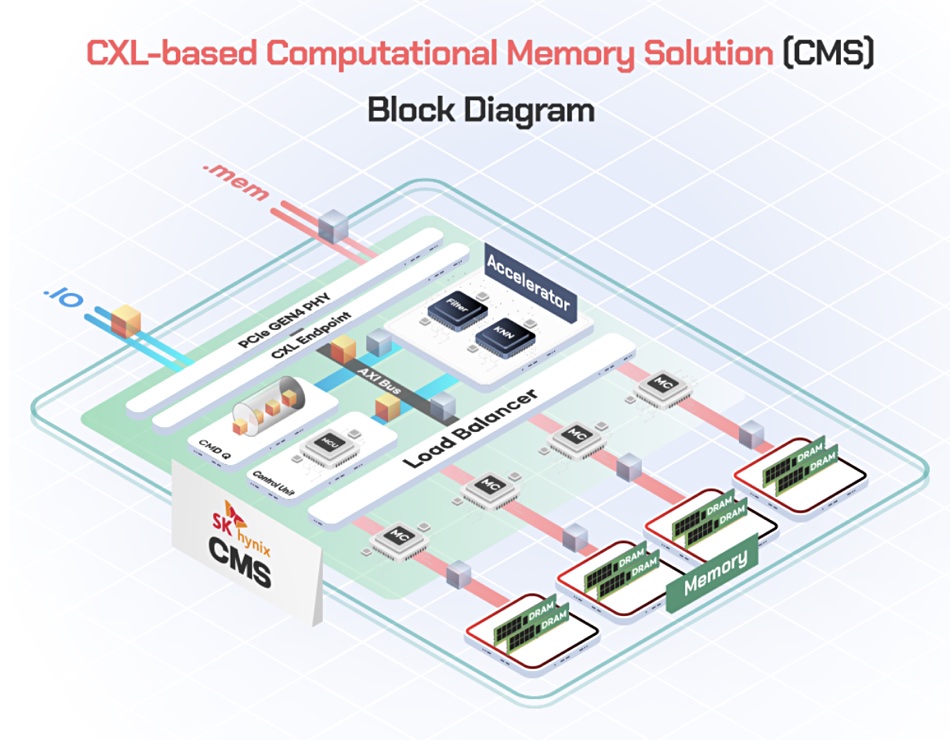

For computational CXL memory, think of an embedded processor card starting with a PCIe 4 endpoint and then a CXL endpoint. Data and commands comes in across these and a control unit processes commands in a queue with an AXI bus interconnecting the control unit and command queue with a filter, a KNN accelerator and a Load Balancer, which is the on-board memory interface. There are four memory banks each with their own control unit, as a diagram illustrates:

This is not computational memory in the Samsung PIM sense, with a programmable computing unit (PCU) embedded inside each memory bank. It is more like computational storage with a processor located in a storage drive, adjacent to the storage media.

SK hynix and Telecom have co-developed a Lightning DB server which included the hynix CMS in its system:

SK hynix’s CXL memory system prototype and the Lightning DB big data analysis platform, optimized with SK Telecom, are being unveiled at the Open Compute Project (OCP) Global Summit, October 18-20, in San Jose, and will also be demonstrated at the SK Tech Summit in Korea in early November.