Eighteen storage news bites today for your delectation. four are light bites and 14 are snacks. Cloudiness, containerisation, data backup and predictions feature in the light bites section of this week’s collection of storage news stories.

The predictions are for 2019 and from Western Digital. They illustrate just how far ahead Western Digital is setting its expansionary gaze away from basic disk drives, NAND chips and SSDs and on towards systems.

The stories are organised alphabetically and we start with a backup supplier.

NAKIVO automates backup and improves recovery

NAKIVO has released NAKIVO Backup & Replication v8.1. It provides more automation and universal recovery of any application objects.

Automation comes from NAKIVO introducing policy-based data protection for VMWare, Hyper-V and AWS infrastructure. Customers can create backup, replication, and backup copy policies to fully automate data protection processes.

A policy can be based on VM name, size, location, power state, tag, or a combination of multiple parameters. Once set up, policies can regularly scan the entire infrastructure for VMs that match the criteria and protect the VMs automatically.

NAKIVO’s Universal Object Recovery enables customers to recover any application objects back to source, a custom location, or even a physical server. Customers can recover individual items from any file system or application by mounting VM disks from backups to a target recovery location, with no need to restore the entire VM first.

NAKIVO Backup & Replication runs natively on QNAP, Synology, ASUSTOR, Western Digital, and NETGEAR storage systems and thereby delivers a claimed up to 2X performance advantage. It has support for high-end deduplication appliances including Dell/EMC Data Domain and NEC HYDRAstor.

Portworx powers up multi-cloud containerisation

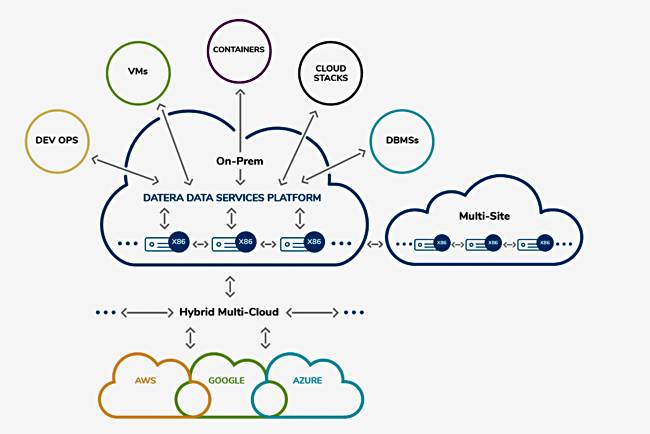

Stateful container management supplier Portworx has announced the availability of PX-Enterprise 2.0, featuring PX-Motion and PX-Central.

According to Portworx’s 2018 Annual Container Adoption Survey, one-third of respondents report running containers in more than one cloud, with 23 per cent running in two clouds and 13 per cent running in three clouds.

However, 39 per cent of respondents cite data management as one of their top three barriers to container adoption. Also, 34 per cent cite multi-cloud and cross-data centre management as a barrier.

Enterprises must be able to move their application data across clouds safely and securely without negatively affecting operations. Portworx says it integrates with more container platforms than any other solution, including Kubernetes services running on AWS, Microsoft Azure, Google Cloud, IBM Cloud, and Pivotal as well as Red Hat OpenShift, Mesosphere DC/OS, Heptio and Rancher.

Murli Thirumale, co-founder and CEO of Portworx, said: “Kubernetes does not inherently provide data management. Without solving data mobility, hybrid- and multi-cloud Kubernetes deployments will never be mainstream for the vast majority of enterprise applications.” PX-Motion and PX-Central have been designed to fix that.

PX-Motion allows Kubernetes users to migrate application data and Kubernetes pod configuration between clusters across hybrid- and multi-cloud environments. This enables entirely automated workflows like backup and recovery of Kubernetes applications, blue-green deployments for stateful applications, and easier reproducibility for debugging errors in production.

PX-Central is a single pane of glass for management, monitoring and metadata services across multiple Portworx clusters in hybrid- and multi-cloud environments built on Kubernetes.

With PX-Central, enterprises can manage the state of their hybrid- and multi-cloud Kubernetes applications with embedded monitoring and metrics directly in the Portworx user interface. Additionally, DevOps users can control and visualize the state of an ongoing migration at a per-application level using custom policies.

Go to Google Cloud Platform, quoth Quobyte

Quobyte’s Data Center File System is now available via Google Cloud Platform marketplace, enabling Google Cloud users to configure a hyperscale, high-performance distributed storage platform in a few clicks. It has native support for all Linux, Windows, and NFS applications.

Quobyte’s Data Center File System provides a massively scalable and fault-tolerant storage infrastructure. It decouples and abstracts commodity hardware to deliver low-latency and parallel throughput for the requirements of cloud services and apps, the elasticity and agility to scale to thousands of servers, and to grow to hundreds of petabytes with little to no added administrative burden. With Quobyte, databases, scale-out applications, containers, even big data analytics can run on one single infrastructure.

Users can run entire workloads in the cloud, or burst peak workloads; start with a single storage node and add additional capacity and nodes on the fly; and dynamically downsize the deployment when resources are no longer needed. Quobyte storage nodes run on CentOS 7.

Two of the company founders are Google infrastructure alums and so should understand hyperscale needs.

Western Digital’s 2019 predictions

Western Digital has made ten predictions for 2019. They are (with our comments in brackets):

- Open composability, Western Digital’s composable systems scheme will start to go mainstream, and make headway against proprietary schemes (meaning, we think, ones from from Dell EMC – MX7000, DriveScale, HPE – Synergy and Liquid.)

- Orchestration of large-scale containerization. We will see further disaggregation of all pieces – memory, compute, networking and storage – and the adoption of broad-based container capabilities. With this further disaggregation, organizations will move toward the orchestration of large-scale containerization.

- Proliferation of RISC-V based silicon as there will be an increased demand from organizations who are looking to specifically tailor (and adapt) their IoT embedded devices to a specific workload, while reducing costs and security risks associated with silicon that is not open-source. (Meaning not ARM, MIPS or X86).

- Move towards fabric infrastructure, including fabric attached memory, with the wide-spread adoption of fabric-attached storage (NVMe-oF). This allows compute to move closer to where the data is stored rather than data being several steps away from compute.

- Beginning of the adoption of energy-assist storage (meaning MAMR disk drives giving customers capacities greater than 16TB).

- Devices will come alive at the edge – such as autonomous cars and medicine diagnoses. In 2019, compute power will get closer to the data produced by the devices, allowing it to be processed in real-time, and devices to awaken and realise their full potential. Cars will be able to tap into machine learning to make the instantaneous decisions needed to manoeuvre on roads and avoid accidents.

- Sprouting of the smaller clouds at the edge. With the proliferation of connected “things,” we have an explosion of data repositories. As a result, in 2019, we will see smaller clouds at the edge – or zones of micro clouds – sprout across devices in order to effectively process and consolidate the data being produced by not only the “thing,” but all of the applications running on the “thing.”

- Expansion of new platform ecosystem as the demands associated with 5G increase. Because 5G won’t be able to support the bandwidth that is required to support all of the IoT devices, machine learning will need to occur at the edge to ensure optimization of the data. The new platform will be a complete edge platform supported by RISC-V processors, ensuring compute moves as close to the data as possible.

- Adoption of the machine learning into the business revenue stream. Up until now, for most organizations, machine learning has been a concept, but in 2019, we will see real production installations. As a result, organizations will adopt machine learning — at scale – and it will have a direct impact on the business revenue stream.

- More data scientists will be needed. As noted in the 451 Research report, Addressing the Changing Role of Unstructured Data With Object Storage, “the interest in and availability of analytics is rapidly becoming universal across all vertical markets and companies of nearly every size, creating the need for a new generation of data specialists with new capabilities for understanding the nature of data and translating business needs into actionable insight.” As a result of this demand to shift data into action, organizations will prioritize hiring data scientists, and in the next three years, 4 out of 10 new software engineering hires will be data scientists.

The first five points are all things that Western Digital is betting on for its growth. So we can be sure it’s putting its money where these predictions are pointing.

Storage roundup

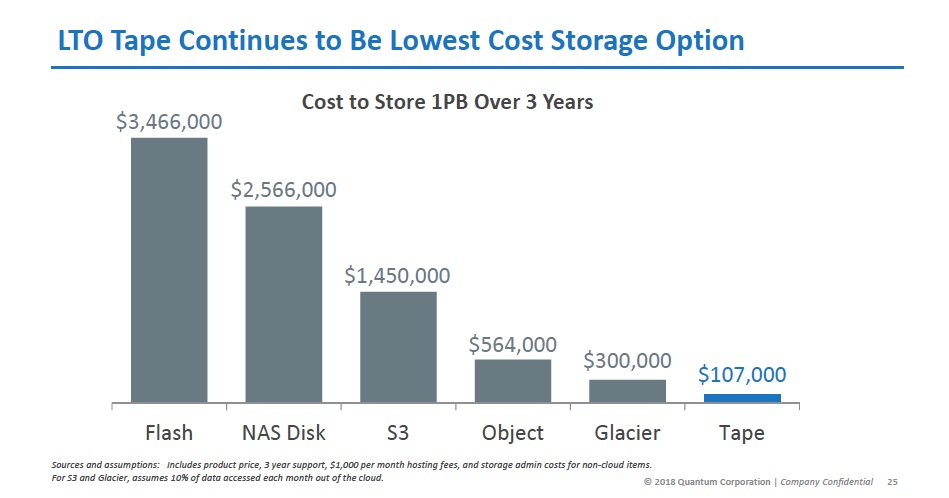

Backblaze, a public cloud storage supplier has a cloud storage vs LTO tape cost calculator. You can download the spreadsheet, test the assumptions, and try it yourself. Download the spreadsheet here.

Barracuda Networks is providing the Leeds United football club with its Message Archiver in order to make the storage and access of emails simpler, quicker and more secure. It allows Leeds United to combine on-site hardware with cloud-based replication. This ensures that email data is easy to recover in the event of an attack or data loss.

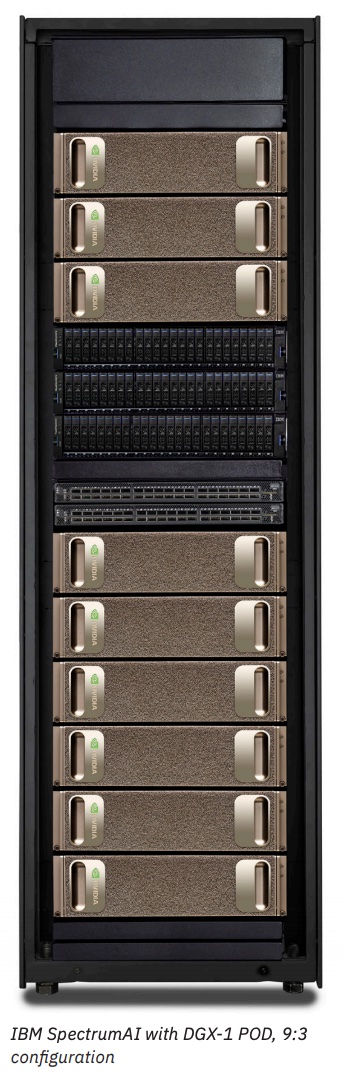

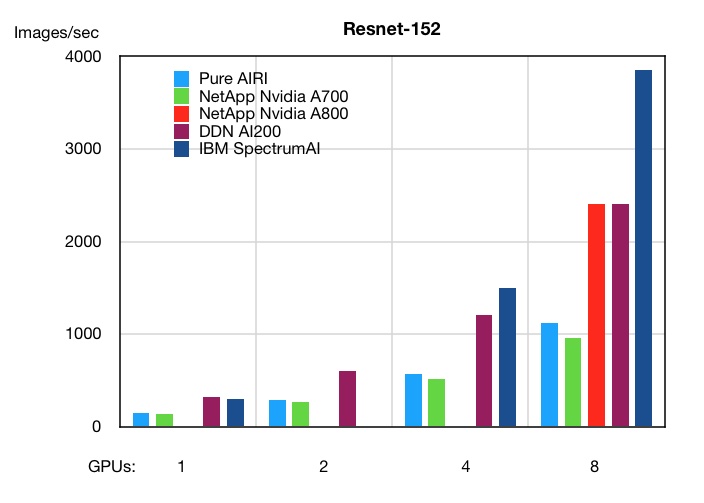

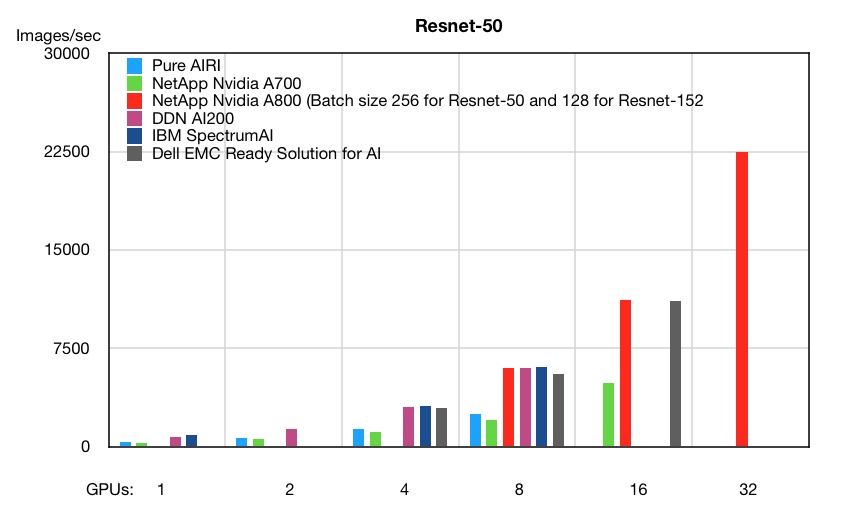

DDN’s AI200 AI-focused storage box, twinned with Nvidia’s DGX-1 GPU server with Microvolution SW has delivered a 1,600-fold better yield compared with traditional microscopy workflows. We’re told researchers use sophisticated deconvolution algorithms to increase signal-to-noise and to remove blurring in the 3D images from Lattice LightSheet microscopes and other high-data-rate instruments. The DDN-Nvidia pairing enables real-time deconvolution during image capture in areas such as neuroscience, developmental biology, cancer research and biomedical engineering.

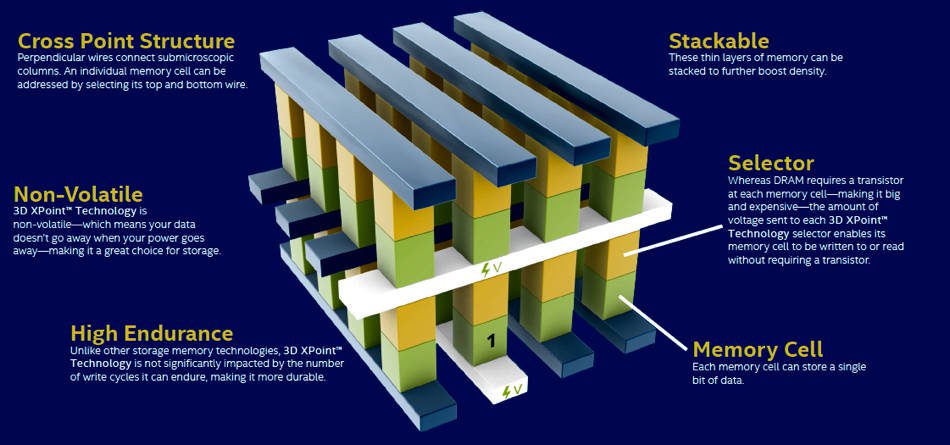

G2M Research has released a Fall 2018 NVM Express Market Sizing Report. It says the market for NVMe SSDs (U.2, M.2, and PCI AOCs) will reach $9bn by 2022, growing from just under $2bn in 2017. More than 60 per cent of AFAs will utilize NVMe storage media by 2022, and more than 30 per cent of all AFAs will use NVMe over Fabric (NVMe-oF) in either front-side (connection to host) and/or back-side (connection to expansion shelves) fabrics in the same timeframe. The market for NVMe-oF adapters will exceed 1.75 million units by 2022, and the bulk of the revenue will be for offloaded NVMe-oF adapters (either FPGA or SoC based adapters). More information here.

In-memory computing supplier GridGain announced that the GridGain In-Memory Computing Platform is now available in the Oracle Cloud Marketplace is fully compatible with Oracle Cloud Infrastructure.

Hitachi Vantara has upgraded its Pentaho analytics software to v8.2, integrating it with the Hitachi Content Platform (HCP). Users can onboard data into HCP, which functions as a data lake, and use Pentaho to prepare, cleanse and normalize the data. Pentaho can then be used to make the logical determination of which prepared data is appropriate for each cloud target (AWS, Azure or Google).

The new Pentaho version has AMQP and OPenJDK support,improved Google Cloud security and Python functionality.

HubStor provides data protection as a service from within Azure and has announced a new continuous backup for its cloud storage platform, enabling users to capture file changes as they happen on network-based file systems and within virtual machines. File changes can be captured either as a backup with a very short recovery point objective (RPO) or as a WORM archive for compliance.

It’s also added version control so as it captures incremental changes, it builds out a version history for each file and maintains point-in-time awareness in the cloud, allowing restoration to known healthy period. It has version control settings that diminish the number of versions held over time as data ages.

Nutanix and GCP-focused data protector HYCU has announced a global reseller agreement with Lenovo to enable Nutanix-using customers to buy Lenovo offerings and HYCU Data Protection for Nutanix directly from Lenovo and its resellers in a single transaction.

Immuta, which ships enterprise data management products for AI, has new features to reduce the cost and risk of running data science programs in the cloud. It has has eliminated the need for enterprises to create and prepare dedicated data copies in Amazon S3 for each compliance-type policy that needs to be enforced. Data owners can enforce complex data policy controls where storage and compute are separated to allow transient workloads on the cloud.

The NVM Express organisation has ratified TCP/IP as an approved transport layer for non-volatile memory express (NVMe), Lightbits Labs says its hyperscale storage platform is the industry’s first to take full advantage of this transport standard. Lightbits participated in the November 212-15 NVMe over Fabrics (NVMe-oF) Plugfest at The University of New Hampshire’s InterOperability Laboratory (UNH-IOL), and showcased interoperability with multiple NIC vendors.

NVM Express Inc. announced the pending availability of the NVMe Management Interface (NVMe-MI) specification. The NVMe-MI 1.1 release standardizes NVMe enclosure management, provides the ability to access NVMe-MI functionality in-band and delivers new management features for multiple NVMe subsystem solid-state drive (SSD) deployments.

The in-band NVMe-MI feature allows operating systems and applications to tunnel NVMe-MI Commands through an NVMe driver. The primary use for in-band NVMe-MI is to allow operating systems and applications to achieve parity with the management capabilities that are available out-of-band with a Baseboard Management Controller (BMC).

Rambus says Micron has selected its CryptoManager Platform for Micron’s Authenta secure memory product line. This will enable Micron to securely provision cryptographic information at any point in the extended manufacturing supply chain and throughout the IoT device lifecycle, enhancing platform protection while enabling new silicon-to-cloud services.

Data Warehouse in the AWS and Azure clouds supplier Snowflake Computing has announced the general availability of Snowflake on Microsoft Azure in Europe.

Unitrends has published a white paper comparing its backup and recovery options to those from Veeam. Get the white paper here.