HPE has announced Optane caching for its 3PAR arrays and this makes them chew through Oracle database workloads faster.

According to HPE, “92% of all 3PAR install base reads and writes are met below 1 millisecond”. Some 72 per cent are dealt with in 0.25ms (250μs).

HPE is using Intel NVMe-accessed Intel Optane drives, which form a persistent memory pool and are addressed with Load/Store memory semantics instead of the slower storage stack Reads and Writes used in storage drive accesses.

HPE calls this Memory-Driven Flash (MDF), with caches filled with transient hot data, metadata, or used as a storage tier for hot data.

Intel’s Optane drives use 3D XPoint media which has a 7-10μs latency – faster than flash SSDs’ 75-120μs. With a 3PAR 3D XPoint, cache average latency is reduced by 50 per cent and 95 per cent of IOs are serviced within 300μs.

Memory eccentric

HPE says this is an example of a memory-centric compute fabric design, with multiple CPUs accessing a central memory pool, thereby bringing storage closer to compute and increasing the workload capability of a set of servers.

You may think that’s a bit of a stretch from adding Optane caches to a shared external array with dual controllers. To be truly memory-centric, HPE should surely add Optane SCM to HPE servers, and then aggregate the Optane SCM between the servers.

In the meantime the company uses Optane as storage class memory (SCM) in 3PAR array controllers to deliver more data accesses faster in response to requests coming in across iSCSI or Fibre Channel networks.

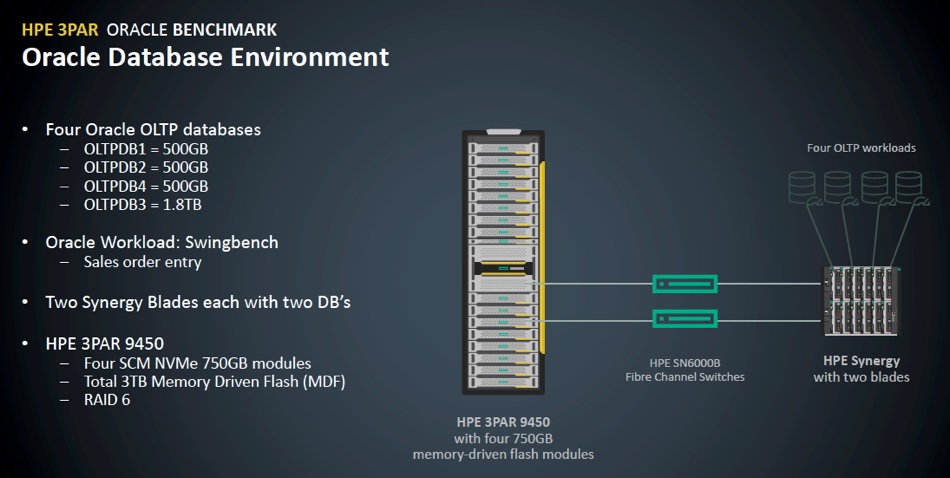

HPE has benchmarked the set-up in an Oracle environment, using a 3PAR 9450 array equipped with 3TB of Optane capacity and 4 x 750GB XPoint drives. The workload was Oracle Swingbench (sales order entry) and the 3PAR box linked across 16Gbit/s Fibre Channel (FC) to a 2-blade HP Synergy composable system servicing four OLTP workloads.

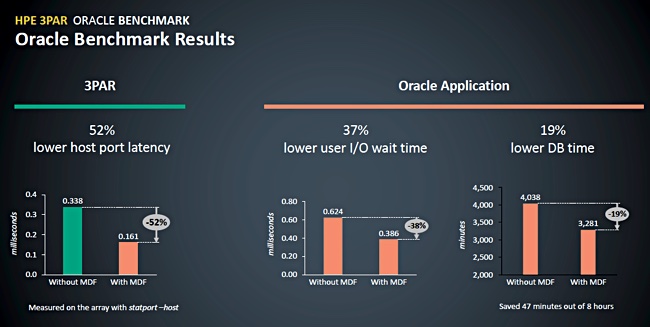

HPE recorded a 52 per cent lower port latency on the array, plus a 37 per cent lower user I/O wait time and 19 per cent lower database time. There was a 27 per cent increase in SQL select execution per second – meaning more database queries were run.

HPE says the lower user I/O wait time means a lower read transaction latency and reduced database time means more workloads are completed. The company suggests you could lower Oracle licensing costs or increase your Oracle workloads.

This is good but ….

A Broadcom document states that you could reduce Oracle 12c data warehouse workload query time by half with 32Gbit/s FC compared to 16Gbit/s FC. This suggests that upgrading the 3PAR 9450 to 32Gbit/s FC, and so cutting network transit time, would be more effective than using SCM.

A Lenovo document says you can get a 2.1x increase in IOPS by moving from Fibre Channel to NVMe over Fibre Channel; again getting a bigger performance boost than by using Optane SCM caching in the array.

in August 2016 an anonymous HPE blogger said NVMe-over-Fabrics with RDMA and FC was on the 3PAR roadmap. It isn’t here yet and surely it must be coming soon.