FlashBlade gets file and object replication

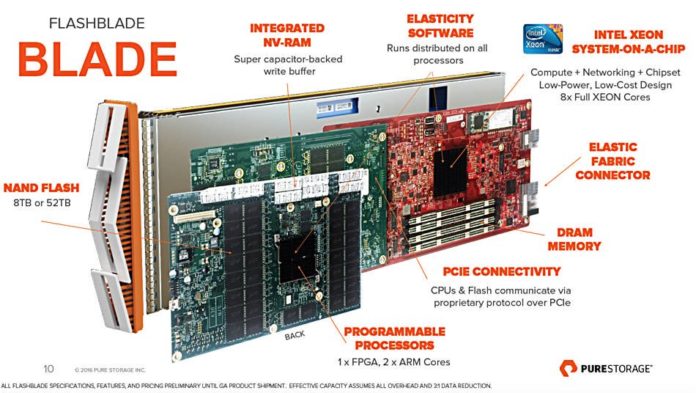

Pure Storage has announced V3.0 of its Purity//FB FlashBlade operating system. FlashBlade is Pure’s unified, scale-out all-flash file and object storage system. New features include:

- File Replication for disaster recovery of file systems. Read-only data in the target replication site enables data validation and DR testing.

- Object Replication – replication of object data between two FlashBlades improves the experience for geographically distributed users by providing lower access latency and increasing read throughout. Replication of object data in native format from FlashBlade to Amazon S3 means customers can use cloud for a secondary copy, or use public cloud services to access data generated on-premises.

- S3 Fast Copy – S3 copy processes within the same FlashBlade Bucket now use “reference-based copies” – Data is not physically copied so the process is faster. Fast Copy does not apply to S3 uploads and copying or copying between different buckets.

- Zero touch provisioning (ZTP) – After FlashBlade hardware is installed, ZTP completes the setup remotely; an IP address is automatically obtained via the management port via DHCP. A REST token (“PURESETUP”) allows access to the array with a set of released APIs to perform its basic configuration and setup the static management network. When setup completes the “PURESETUP” token becomes invalid and DHCP is terminated.

V3 also has File system Rollback; a file system data protection feature enabling fast recovery of file systems from snapshots, and NFS v4.1 Kerberos authentication. There are Audit Logs and SNMP support enhancements for improved security, alerting, and monitoring capabilities.

FlashBlade now has a peak backup speed of 90TB/hour and peak restore speed of 270TB/hour.

Public cloud disk drive and SSD ships

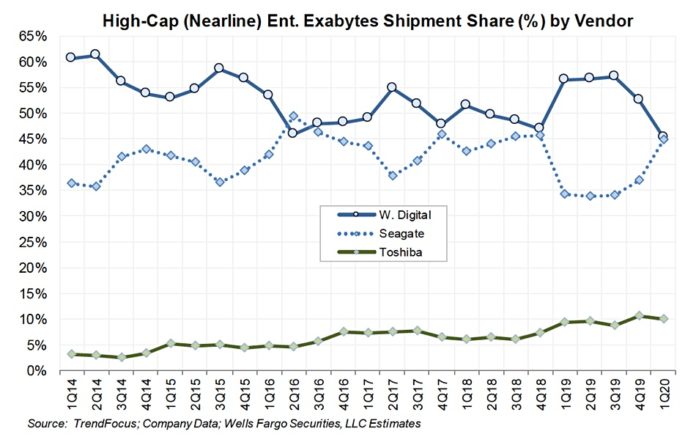

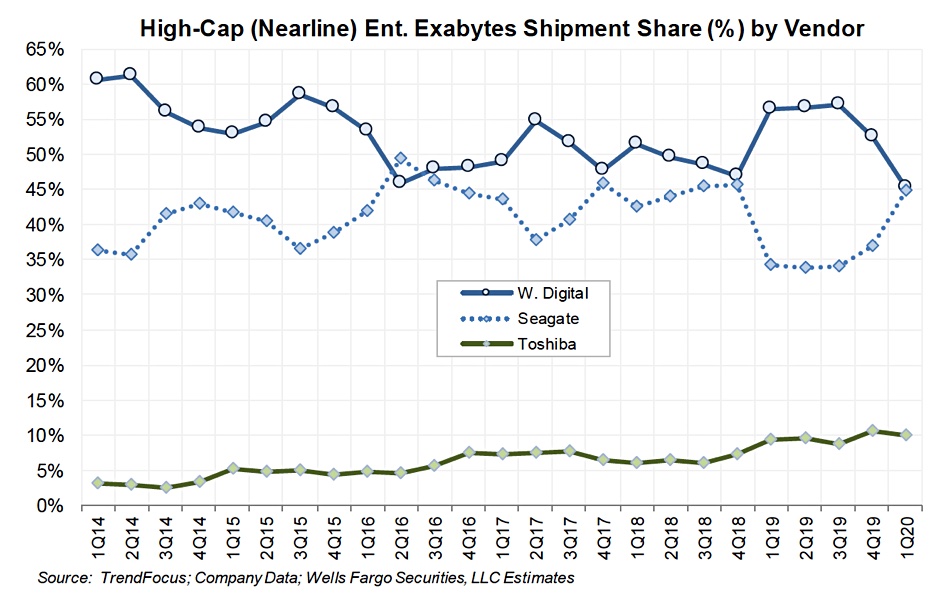

Wells Fargo analyst Aaron Rakers told subscribers cloud-driven near line HDD units are now approaching 70 per cent of total HDD industry capacity shipped, and account for more than 60 per cent of total HDD industry revenue.

Also enterprise SSDs are estimates to account for 20-25 per cent of total NAND flash industry bits shipped, with cloud accounting for 50-60 per cent or more of total bit consumption.

Shorts

DigiTimes has reported (paywall access) Western Digital is increasing enterprise disk drive prices by up to 10 per cent due to pandemic-caused production and supply chain cost increases. A WD spokesperson told Blocks & Files the company does not comment on its pricing.

NetApp is partnering with Iguazio so that NetApp’s ONTAP AI on-premises storage and public cloud Cloud Volumes storage participate in Iguazio’s machine learning data pipeline software. Iguazio is compatible with KubeFlow 1.0 machine learning software.

Alluxio, which supplies open source cloud data orchestration software, announced an offering in collaboration with Intel to offer an in-memory acceleration layer with 2nd Gen Intel Xeon Scalable processors and Intel Optane persistent memory. Benchmarking results show 2.1x faster completion for decision support queries when adding Alluxio and PMem compared to only using disaggregated S3 object storage. An I/O intensive benchmark delivers a 3.4x speedup over disaggregated S3 object storage and a 1.3x speedup over a co-located compute and storage architecture.

Broadcom’s Emulex Fibre Channel host bus adapters (HBAs) support ESXi v7.0, and provide NVMe-oF over FC to/from ESXi v7.0 hosts. NetApp, Broadcom and VMware have a validated NVMe/FC server and storage SAN setup.

China’s CXMT (ChangXin Memory Technologies) has signed a DRAM patent license agreement with Rambus, strengthening its potential as a DRAM chip supplier.

FileShadow has announced an integration partnership with Fujitsu, allowing consumers to scan documents, from Fujitsu scanners, directly into their FileShadow Cloud Storage Vault. FileShadow collects the file, preserves it with its secure cloud vault and curates it further with machine learning (ML)-generated tags for images and optical character recognition (OCR) of written text.

GigaSpaces, the provider of InsightEdge, an in-memory real-time analytics and data processing platform, has closed a $12m round of funding. Fortissimo Capital led the round, joined by existing investors Claridge Israel and BRM Group. Total funding is now $53m.

MemSQL has announced that it has been selected as a Red Hat Marketplace certified vendor.

Supermicro has introduced BigTwin SuperServers and Ultra systems validated to work with Red Hat’s hyperconverged infrastructure software.