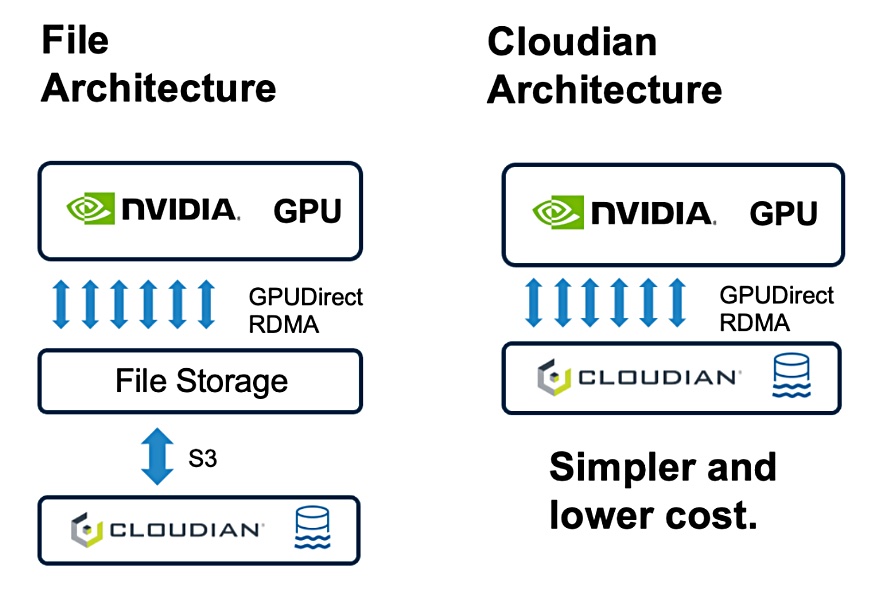

Cloudian announced its integration with Nvidia Magnum IO GPUDirect storage technology, using RDMA parallel transfer, and putting file and object storage data on an equal footing in serving data to Nvidia GPUs.

This “simplifies the management of AI training and inference datasets – at petabyte and exabyte scales – while reducing costs by eliminating the need for complex data migrations and legacy file storage layers.”

Cloudian CEO Michael Tso said of the move: “For too long, AI users have been saddled with the unnecessary complexity and performance bottlenecks of legacy storage solutions. With GPUDirect Storage integration, we are enabling AI workflows to directly leverage a simply scalable storage architecture so organizations can unleash the full potential of their data.”

Rob Davis, Nvidia VP of storage technology, said: “Fast, consistent, and scalable performance in object storage systems is crucial for AI workflows. It enables real-time processing and decision-making, which are essential for applications like fraud detection and personalized recommendations.”

GPUDirect, up until now, has been an Nvidia file-focused protocol that eliminates needless file data movement within a server. Normally a storage server CPU copies data from a storage drive into its memory and then authorizes its transmission over a network link into a GPU’s DRAM. The GPUDirect protocol enables a direct connection to be made between the storage drive and GPU memory, cutting out file movement into the storage server’s memory, lowering file data access latency and keeping GPUs busy rather than having them wait for file IO.

However, GPUs still wait for object data IO, rendering the use of object data for GPU AI processing impractical. Cloudian and MinIO have broken this bottleneck, enabling stored object data to be used directly in AI processing rather than indirectly via migration into file data, which can then travel via GPUDirect to the GPUs.

The HyperStore GPUDirect object transfer relies on Nvidia ConnectX and BlueField DPU networking technologies, enabling direct communication between Nvidia GPUs and multiple Cloudian storage nodes.

Cloudian says its GPUDirect-supporting HyperStore delivers over 200 GBps from a single system with performance sustained over a 30-minute period without the use of data caching. It “slashes CPU overhead by 45 percent during data transfers.”

There are no Linux kernel modifications, eliminating “the security exposure of vendor-specific kernel modifications.”

CMO Jon Toor wrote in a blog post: “Cloudian has spent months collaborating closely with Nvidia to create GPUDirect for Object Storage. With GPUDirect for Object, data can now be consolidated on one high-performance object storage layer. The significance is simplification: a single source of truth that connects directly to their GPU infrastructure. This reduces cost and accelerates workflows by eliminating data migrations.”

Cloudian HyperStore’s effectively limitless scalability enables AI data lakes to grow to exabyte levels, and its centralized management ensures “simple, unified control across multi-data center and multi-tenant environments.”

The company says it accelerates AI data searches with integrated metadata support, allowing “easy tagging, classification, and indexing of large datasets.” It says file-based systems depend on “rigid directory structures and separate databases for metadata management.” With Cloudian and other object stores, metadata is natively handled within the object storage platform, “simplifying workflows and speeding up AI training and inference processes.”

Michael McNerney, SVP of Marketing and Network Security at Supermicro, said: “Cloudian’s integration of Nvidia GPUDirect Storage with the HyperStore line of object storage appliances based on Supermicro systems – including the Hyper 2U and 1U servers, the high-density SuperStorage 90-bay storage servers, and the Simply Double 2U 24-bay storage servers – represents a significant innovation in the use of object storage for AI workloads. This will enable our mutual customers to deploy more powerful and cost-effective AI infrastructure at scale.”

David Small, Group Technology Officer of Softsource vBridge, said: “Cloudian’s GPUDirect for Object Storage will simplify the entire AI data lifecycle, which could be the key to democratizing AI across various business sectors, allowing companies of all sizes to harness the power of their data. We’re particularly excited about how this could accelerate AI projects for our mid-market clients who have previously found enterprise AI solutions out of reach.”

Nvidia is working with other object storage suppliers. Toor tells us: “GPUDirect is an Nvidia technology that we integrate with. We did foundational work on the code to support object, so we believe we are on the forefront. But like any industry standard, it will be driven by ecosystem growth. And that growth is a good thing. It will cement the position of object storage as an essential enabler for large-scale AI workflows.”

MinIO announced its AIStor with GPUDirect-like S3 over RDMA earlier this month. Scality introduced faster object data transfer to GPUs wIth its Ring XP announcement in October.

Cloudian HyperStore with Nvidia Magnum IO GPUDirect Storage technology is available now. To learn more, explore Cloudian’s views on GPUDirect in its latest blog post.