VAST Data is hooking up with AI stack orchestrator Run:ai to provide storage and data infrastructure services for Run:ai’s AI tool chain and framework management for GPUs in a full stack deal.

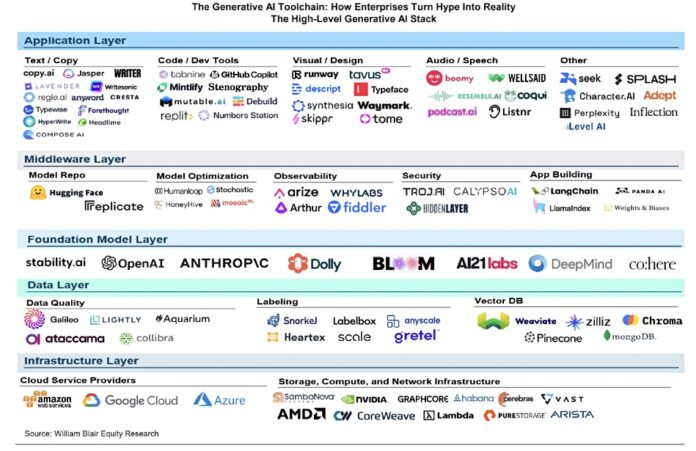

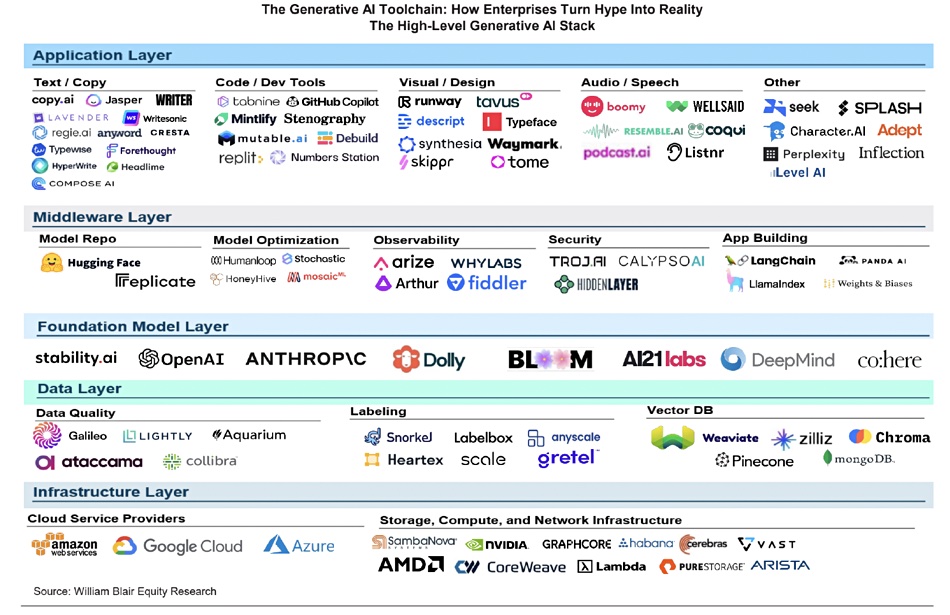

Processing AI models for training and inference involves much, much more than sending a large data set into a job that the GPUs can process. William Blair analyst Jason Ader has diagrammed some of this complexity to show job stack components from a base infrastructure layer, including storage, up through a data layer, foundation model, middleware, and application layers:

There are myriad elements at each layer and Run:ai orchestrates them. VAST CEO and co-founder Renen Hallak said in a statement: “We’ve recognized that customers need a more holistic approach to AI operations. Our partnership with Run:ai transcends traditional, disparate AI solutions, integrating all of the components necessary for an efficient AI pipeline. Today’s announcement offers data-intensive organizations across the globe the blueprint to deliver more efficient, effective, and innovative AI operations at scale.”

Run:ai was founded in Tel Aviv, Israel, in 2018 by CEO Omri Geller and CTO Ronen Dar, and has raised $118 million thus far. Their containerized software provides AI optimization and orchestration services, built to let customers train and deploy AI models and get scalable, optimized access to AI compute resources. For example, it provides so-called fractional GPU services in which a GPU is virtualized such that separate AI jobs can share a single physical GPU, in the same way as server virtualization enables separate virtual machines (VMs) to share the same physical CPU.

Geller said: “A key challenge in the market is providing equitable access to compute resources for diverse data science teams. Our collaboration with VAST emphasizes unlocking the maximum performance potential within complex AI infrastructures and greatly extends visibility and data management across the entire AI pipeline.”

VAST and Run:ai say the joint offer will include:

- Full-Stack Visibility for Resource and Data Management encompassing compute, networking, storage, and workload management across AI operations.

- Cloud Service Provider-Ready Infrastructure with a CSP blueprint to deploy and manage AI cloud environments efficiently across a single shared infrastructure, with a Zero Trust approach to compute and data isolation.

- Optimized End-to-End AI Pipelines from multi-protocol ingest to data processing to model training and inferencing, using Nvidia RAPIDS Accelerator for Apache Spark, as well as other AI frameworks and libraries available with the Nvidia AI Enterprise software platform for development and deployment of production-grade AI applications with the VAST DataBase enabling data pre-processing.

- Simple AI Deployment and Infrastructure Management with fair-share scheduling to allow users to share clusters of GPUs without memory overflows or processing clashes, paired with simplified multi-GPU distributed training. The VAST DataSpace enables data access across geographies and multi-cloud environments, providing encryption, access-based controls, and data security.

This partnership will tend to encourage VAST customers to use Run:ai, and Run:ai users to consider storing their data on VAST. It’s a deal that should be attractive to CSPs looking to provide AI training and inferencing services as well.

VAST plus Run:ai blueprints, solution briefs, and demos will be available at Nvidia GTC 2024, booth #1424.