…

Marvell is sampling its Octeon 10 DPU (Data Processing Unit) with between 8 and 36 Arm Neoverse N2 cores based on Arm’s Armv9 architecture. The chip is built using TSMC’s 5nm manufacturing process and is said to be 3x more powerful than the prior Octeon TX2 product yet draw half as much electricity. The Octeon 10 includes cryptography-acceleration units, packet parsers, DDR5 support, PCIe 5.0 interconnects, up to 400Gbit/sec Ethernet, functions for IPSec, and vector packet processing (VPP). It is destined for edge-network processing work such as packet filtering and machine-learning on a 5G wireless network and should be available by the year-end. We now have Fungible, Intel, Marvell and Pensando producing specialised DPU/SmartNIC chips.

…

Samsung is producing DDR5 DRAM using a 14nm process and EUV (Extreme Ultra Violet lithography). It can operate at up to 7.2Gbit/sec, more than 2x DDR4 speed of up to 3.2Gbit/sec. Samsung claims it is the industry’s highest-density DDRAM chip. DDR5 DIMMS can have up to 2TB capacity per DIMM vs 128GB DIMMs available currently. Samsung expects growing DDR5 adoption in 2022, which could mean servers with far higher memory capacity.

…

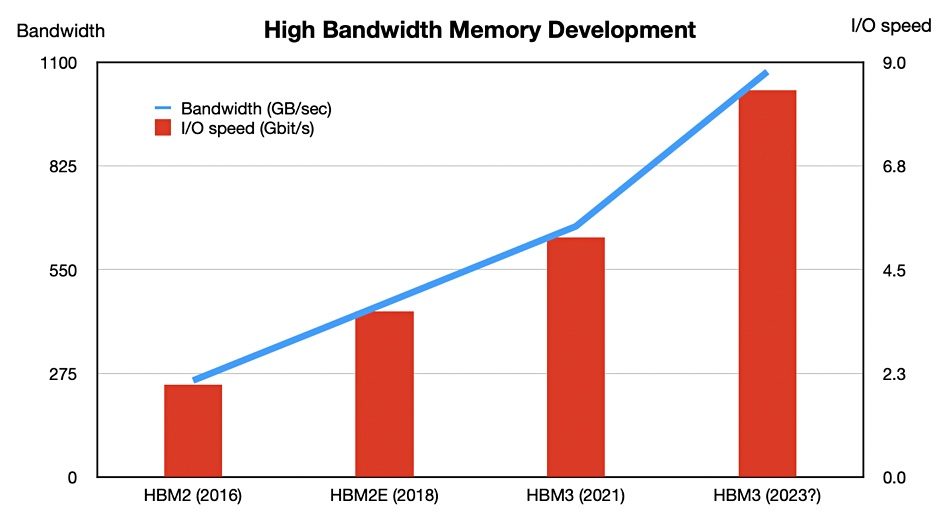

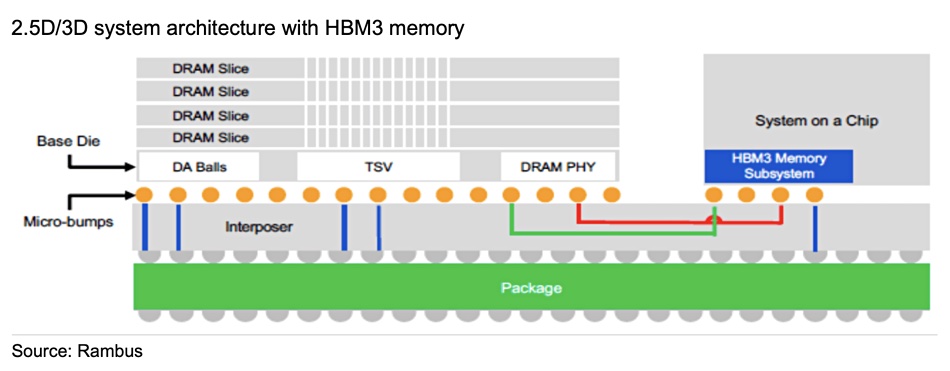

A third generation of high bandwidth memory (HBM3) is emerging and was discussed in SemiConductor Engineering. AI Training models need more memory and HBM3 can offer a high-capacity, high-bandwidth and power-efficient tier of memory below DRAM in the memory hierarchy. A couple of diagrams illustrate HBM generation developments and interposer use.

HBM relies on an interposer layer connecting it to a closely-located System-on-Chip (SoC) containing a processor. It’s unclear how or if such a pool of memory could work in a CXL environment, since the interposer functions as a CPU-memory link instead of CXL.

…

Italian oil company ENI has upgraded its HPC4 supercomputer with an HPE GreenLake utility pricing scheme whereby existing nodes will be replaced with ProLiant DL385 Gen 10 Plus nodes with 2x AMD Rome 24-core processors. 1,375 nodes will have 2x Nvidia V100 GPUs. 122 nodes will have 2x Nividia A100 GPUs and 22 nodes will carry 2x AMD Instinct M100 GPUs. There will be 22 log-in-only CPU nodes. The whole processing bundle will use data from a ten-petabyte ClusterStor E1000 parallel file system, which is almost twice the capacity of the current HPC4 system.

…

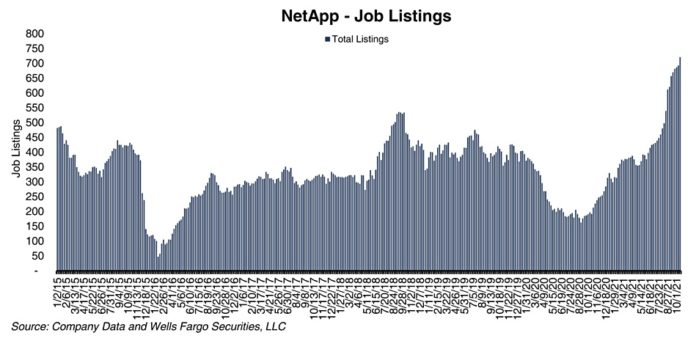

Wells Fargo analyst Aaron Rakers traps some storage company job listings. He’s noticed NetApp’s open job positions have risen by 28 compared to last week, totalling 721. This compares to 192 a year ago. A chart shows a recent spurt with NetApp recruiting heavily and steadily since September 2020.

We think this is due in whole or part to the rise of its cloud business unit.

…

JEDEC is developing a Crossover Flash Memory (XFM) Embedded and Removable Memory Device (XFMD) standard whereby soldered NVMe/PCIe NAND chips can be replaced. These chips are soldered in to devices such as gaming consoles, virtual and augment reality glasses/headsets, drones and surveillance video recorders, and in the automotive industry. An XFMD unit will measure 13mm x 18mm x 1.4mm high — smaller than a standard SD card and larger than a microSD card.

…

Metrolink.ai, a data management omniplatform, officially launches today. It has completed a $22 million seed round in just a few months since its inception. Trusted by Grove Ventures and Eclipse Ventures, Metrolink.ai plans to expand its R&D team to make data extractable and accessible through a dedicated generic platform, allowing customer companies to:

- Free data scientists from cleaning up messy data and cataloguing;

- Design their own data pipelines by mixing ready-made ‘building blocks’;

- Support a ‘no code’ model that covers a wide variety of automated, advanced data transformations;

- White-label product that integrates with existing business processes and databases, and deployable on-premises or in the cloud;

- Retrieve and process complex data in hours.

…