Caringo, a niche object storage supplier, has hit the big time at UK supercomputer outfit JASMIN.

This is a clustered supercomputer at the Science and Technology Facilities Council’s (STFC) Rutherford Appleton Laboratory (RAL) in Oxfordshire, UK.

It is managed by RAL Space’s Centre for Environmental Data Analysis (CEDA). In 2017 it had 20PB usable capacity of mostly Panasas HPC storage, described as the largest single-site Panasas installation and Panasas realm worldwide.

In June this year JASMIN added 30PB of usable capacity with a Quobyte software-defined scale-out parallel file system using Dell and Super Micro hardware.

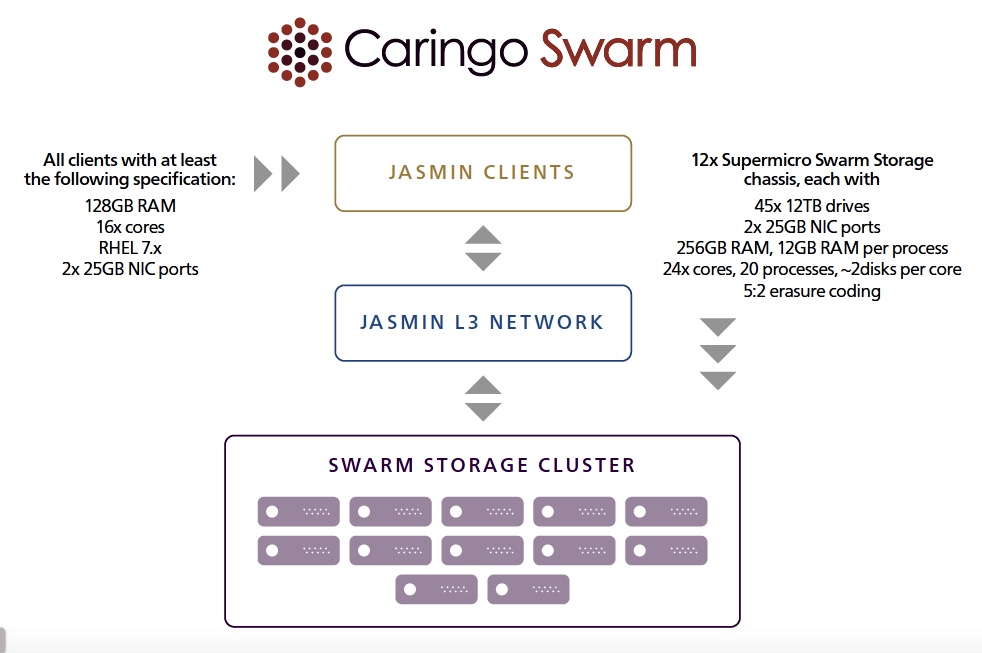

A sixth of this, 5PB, is interchangeable between file and S3-format object storage. A separate 5PB of dedicated S3 Swarm object storage, with an NFS interface, was bought from Caringo and uses Super Micro hardware.

JASMIN also added 500TB of Pure Storage FlashBlade capacity with a NAS interface to be used for home and scratch small file/compilation/metadata heavy workloads.

Caringo announced that in JASMIN benchmark testing Caringo Swarm delivered 35 GB/sec read and 12.5 GB/sec write aggregate S3 throughput.

NFS throughput benchmarks via a single instance of SwarmNFS delivered 1.6 GB/sec sustained streaming (3PB+ per month) with no caching or spooling.

Tony Barbagallo, Caringo’s CEO, said: “These results are similar to what parallel file systems achieved in the same environment and we have a solid roadmap to continued performance improvements in upcoming Swarm releases.”

Parallel bars

The parallel file system in question is understood to be Panasas’ PanFS. “Similar” means that Caringo did 35GB/sec on S3 reads while the parallel file system did 37GB/sec. On S3 writes the numbers were 12.5GB/sec for Caringo and 37GB/sec for the parallel file system.

Still this rough equivalence to parallel file system read throughput is unexpected.

A Caringo case study (registration wall) states: “The performance requirements defined by STFC using 2 Gigabyte objects with sequential access and erasure-coded data via the S3 and NFSv4 protocols were set by a minimum requirement and targets based around the parallel file system performance for similar number of HDD spindles.

“JASMIN data analyses work flows are mostly WORM (Write once read many), so the STFC was less concerned with matching the write performance of the parallel file system than the read performance.”

Panasas this month quadrupled PanFS performance with v8 software and new ActiveStor Ultra hardware.

The specific improvements for S3 access are not known but Caringo might fare less well in a bake-off with Panasas if it were run today.

Object lesson

Swarm read bandwidth is higher than expected because, Caringo says, data is written raw to disk together with its metadata.

Identifying attributes such as the unique ID, object name and location on disk are published from the metadata into a patented and dynamic shared index in memory that handles lookups. This design is quite ‘flat’, infinitely scalable and very low latency as there are zero IOPS (input/output operations per second) to first byte.

There is no need to look up metadata in an external metadata database. Also automatic load balancing of requests using a patented algorithm allows for all nodes and all drives to be addressed in parallel.

To compare the performance of object storage with parallel file system storage in the same breath is somewhat surprising.

If resource-limited Caringo can produce such a good result then larger object storage suppliers might be able to improve on it with, for example, metadata stored in flash.