At a press briefing yesterday to mark the company’s tenth birthday, Scality co-founder and CEO Jérôme Lecat talked about competition, its roadmap, and a new version of its RING object storage software.

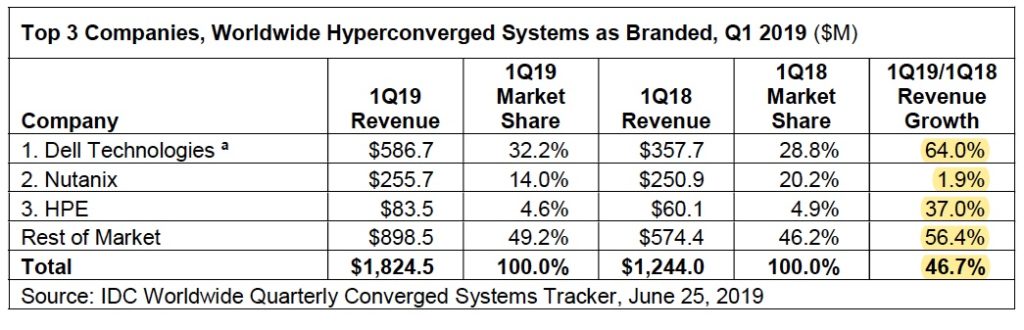

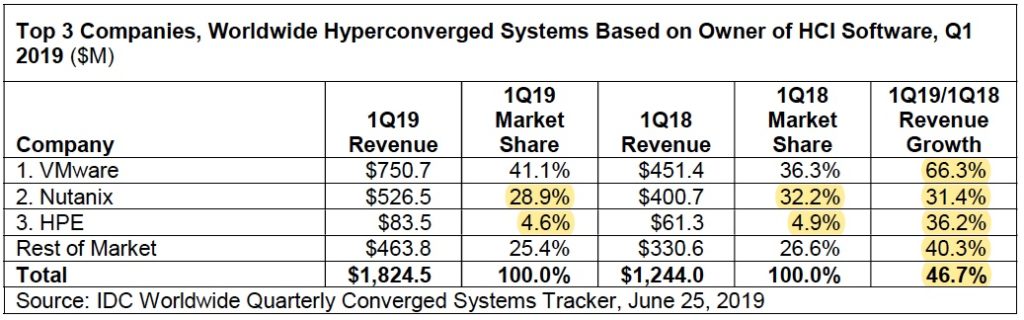

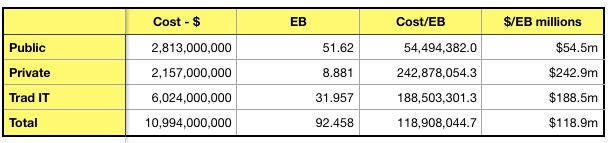

In no particular order, let’s kick off with Scality’s competitive landscape – as the company sees it. According to Lecat Dell EMC is Scality’s premier competitor, and NetApp, StorageGRID is in second place. It encounters NetApp about half the time it meets Dell EMC.

The next two competitors are IBM Cloud Object Store and companies touting solutions based on Ceph. Collectively, Scality encounters them half the time it competes against NetApp.

Roadmap

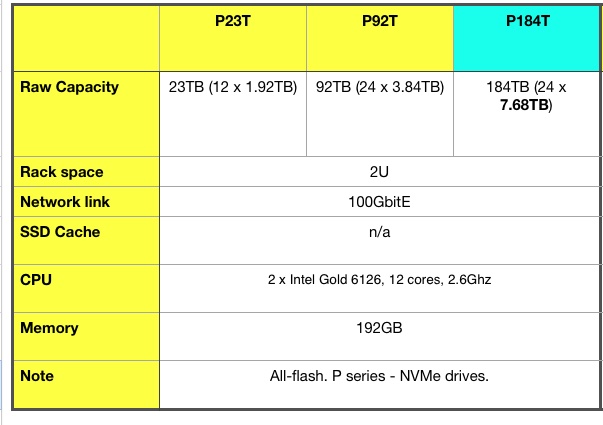

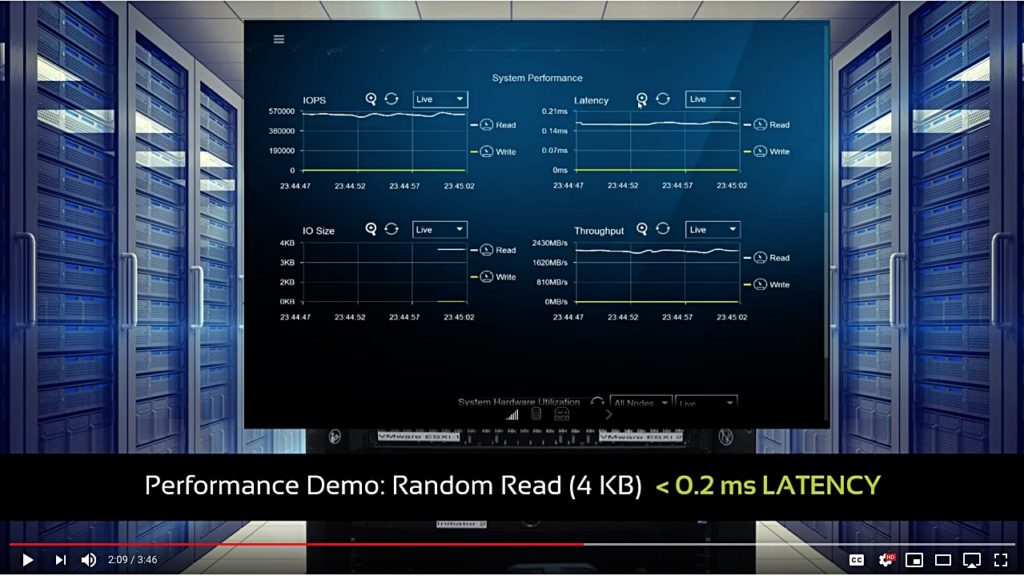

Scality is testing all-flash RINGs with QLC (4 bits/cell) flash in mind. NAND-based RINGs would need less electricity than disk-based counterparts and may be be more reliable in a hardware sense.

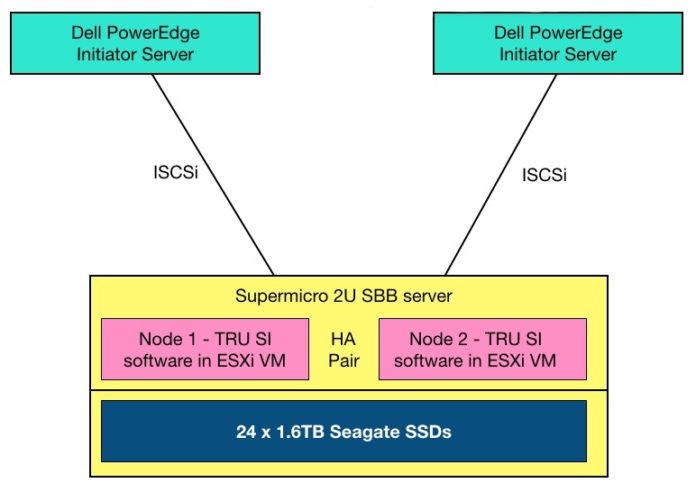

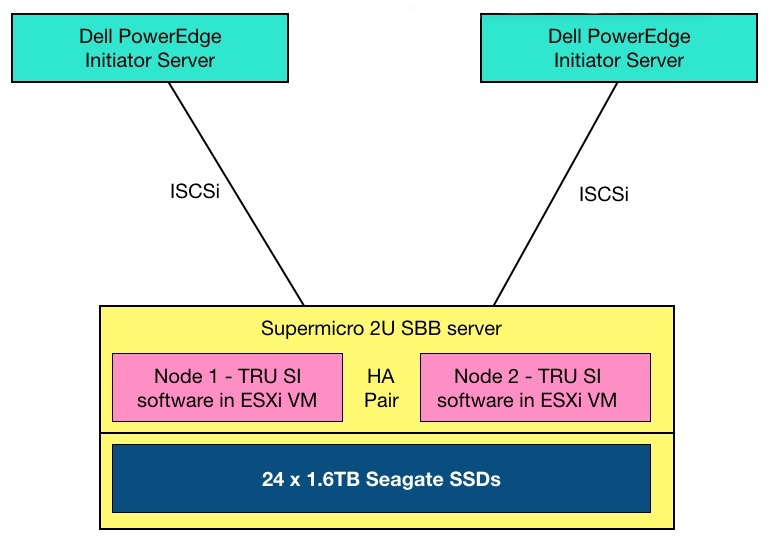

It is working with HPE to integrate RING with Infosight, HPE’s analytics and management platform. HPE has also launched a tiered AI Data Node with WekaIO software installed for high-speed data access, along with Scality RING software for longer term data storage.

Read a reference config document for the AI Data Node here.

RING8

Scality has updated its RING object storage, adding management features across multiple RINGs and public clouds, and new API support.

RING8, the eighth generation RING, has:

- Improved security with added role-based access control and encryption support,

- Enhanced multi-tenancy for service providers,

- More AWS S3 API support and support for legacy NFS v4,

- eXtended Data Management (XDM) and mobility across multiple edge and core RINGs, and public clouds, with lifecycle tiering and Zenko data orchestrator integration.

Details can be found on a datasheet downloadable here (registration needed.)

An analyst at the briefing suggested Scality is making pre-emptive moves in case Amazon produced an on-premises S3 object storage product.

Edge and Core RINGs

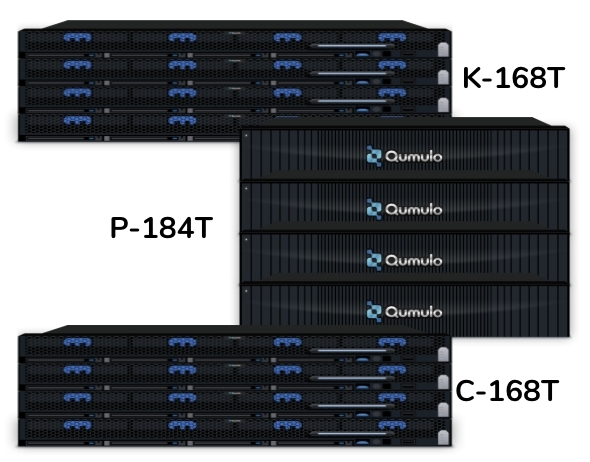

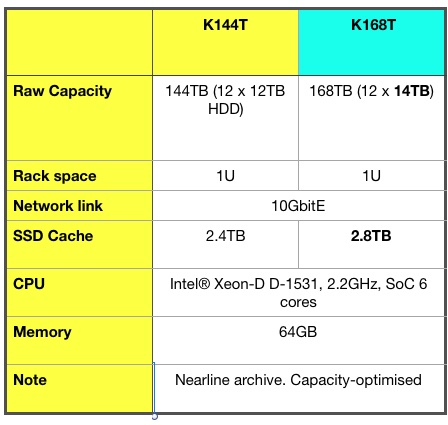

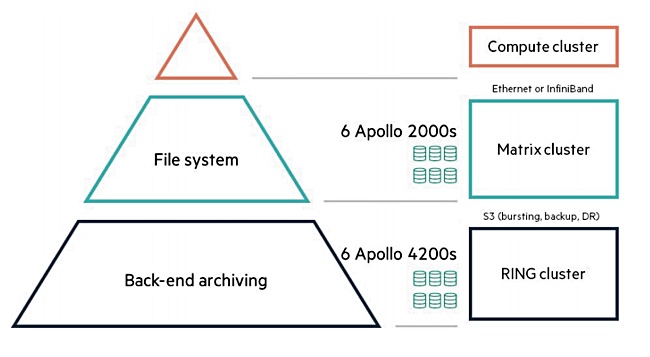

An edge RING site will be a smaller RING, say 200TB, with lower durability, such as a 9:3 erasure coding system. It will be used in remote office/branch office and embedded environments with large data requirements. Scality calls this a service edge. We might think of them as RINGlets.

The edge RINGs replicate their data to a central and much larger RING with higher durability, such as 7:5 erasure coding. This can withstand a higher degree of hardware component failure.

Download a RING8 datasheet here (registration needed.)