Data protector Arcserve has appointed Christian van den Branden as CTO, leading the global research and development group, advancing Arcserve’s technology innovation, and driving the strategic direction of the product roadmap. Most recently, van den Branden served as CTO at Qualifacts, a healthcare technology company. Prior to this, he held leadership roles including head of engineering at ZeroNorth, XebiaLabs, SimpliVity, DynamicOps, and EMC Corporation. Prior Arcserve CTO Ivan Pittaluga is still listed on LinkedIn in that role.

…

Flash controller firmware startup Burlywood announced on November 1 that Mike Jones has become its CEO, with founder and original CEO Tod Earhart stepping aside to be CTO – a classic tech startup founding CEO move. Funny that: we had him in place in August as a Burlywood press release confirms.

…

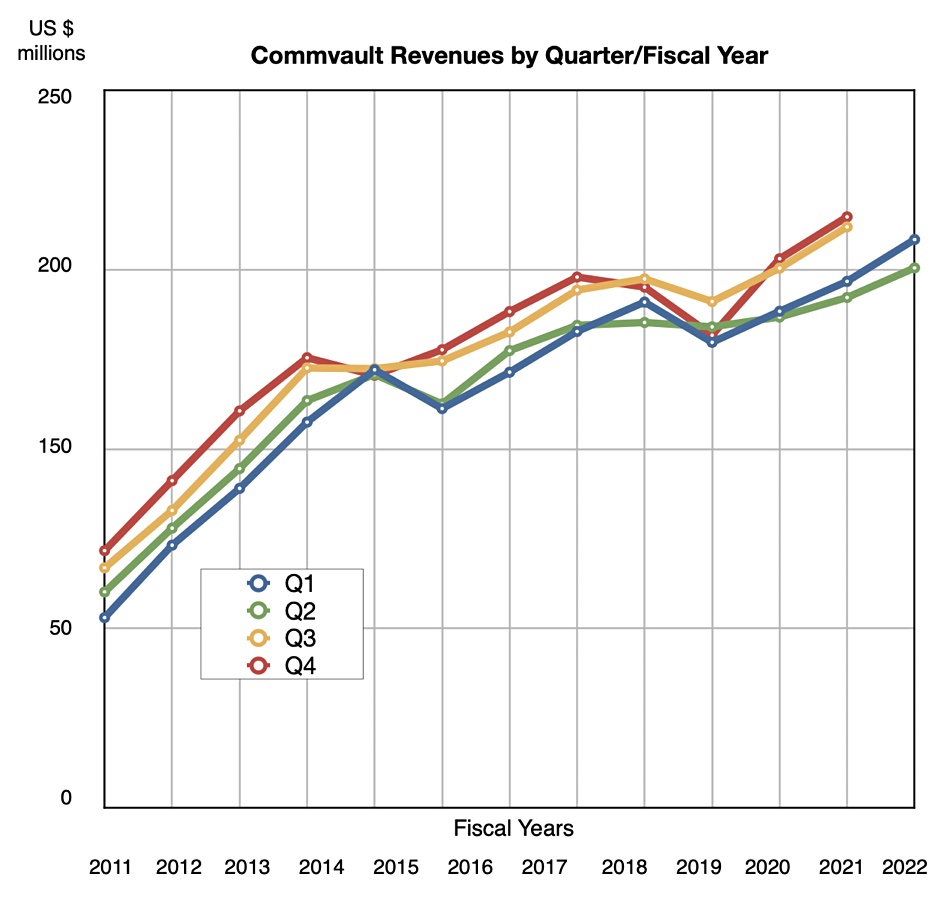

Data Protector Commvault has announced the Metallic File & Object Archive. It’s Data Management as a Service (DMaaS) offering designed to help organizations deal with Governance, Risk, and Compliance (GRC) requirements. Commvault says it uses data insights, access controls, tagging, metadata search, audit trails, and reports to help manage compliance needs over a large amount of unstructured data. The service provides predictable cost modelling, actionable data insights, flexible bring your own storage (BYOS) options, and compliance-ready operations. It’s virtually air-gapped with logical segmentation of the access keys. More information here.

…

Data protector Druva has earned the ISO/IEC 27001:2013 certification by the International Organization of Standardization. This milestone, it says, proves the company’s commitment to data security and ability to meet the highest standards for security excellence. ISO/IEC 27001 includes more than 100 requirements for establishing, implementing, maintaining and continually improving an information security management system.

…

World Digital Preservation Day, is taking place today, November 3, 2022 – so-called by the Digital Preservation Coalition. The members are listed here, and don’t include any storage supplier. Steve Santamaria, CEO of Folio Photonics, said about the day: “Storage that is highly reliable, long-lived, easily accessible, and cost-efficient is crucial to any data preservation strategy. We have yet to see an ideal storage technology developed that strikes the right balance between these vectors. However, new technologies such as next-generation tape storage, advanced optical storage, and DNA storage are all currently being developed to sit at the center of data preservation strategies around the globe.”

…

MRAM developer Everspin says its EMxxLX chips are now generally available with an xSPI serial interface, and is is the highest-performing persistent memory available today. It offer SRAM-like performance with low latency, and has a full read and write bandwidth of 400MB/sec via eight I/O signals with a clock frequency of 200MHz. The chip is offered in densities from 8 to 64 megabits and can replace alternatives, such as SRAM, BBSRAM, FRAM, NVSRAM, and NOR flash devices. Everspin says these are low-powered devices and deliver the best combination of performance, endurance, and retention available today. Data is retained for more than ten years at 85°C and more than 100 years at 70°C. Write speeds of more than 1000 times faster than most NOR flash memories are claimed.

…

Too many companies with no product news and nothing else to say run reports with findings that customers need their products. Today’s example is Kaseya with a Datto Global State of the MSP Report – “a report full of data and insights on topics that range from how MSPs run their business, the services they offer, and what is driving growth in today’s changing landscape.” The hybrid workforce is here to stay (you didn’t read it here first), ransomware and phishing are getting worse (you don’t say) and the biggest challenge facing MSPs is … wait for it … competition (as opposed to the challenges facing literally every other type of business in the world). Yawn. We’re told the most surprising finding is that the revenue generated from break-fix is increasing.

…

FD Technologies announced the appointment of Ashok Reddy as CEO of its KX business unit, reporting to Group CEO Seamus Keating. KX is a data analysis software developer and vendor with its KX Insights platform built upon the proprietary time series database kdb+ and its programming language q. The company was founded in 1993 by Janet Lustgarten and Arthur Whitney, developer of the k language. Investor Ireland-based First Derivatives (FD) increased its stake to 65 percent in 2014 and bought them out in 2018.

…

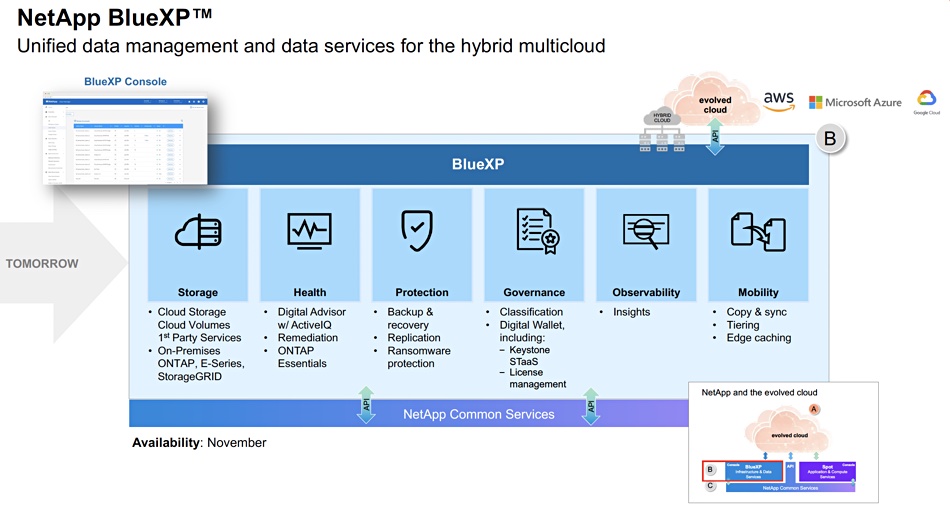

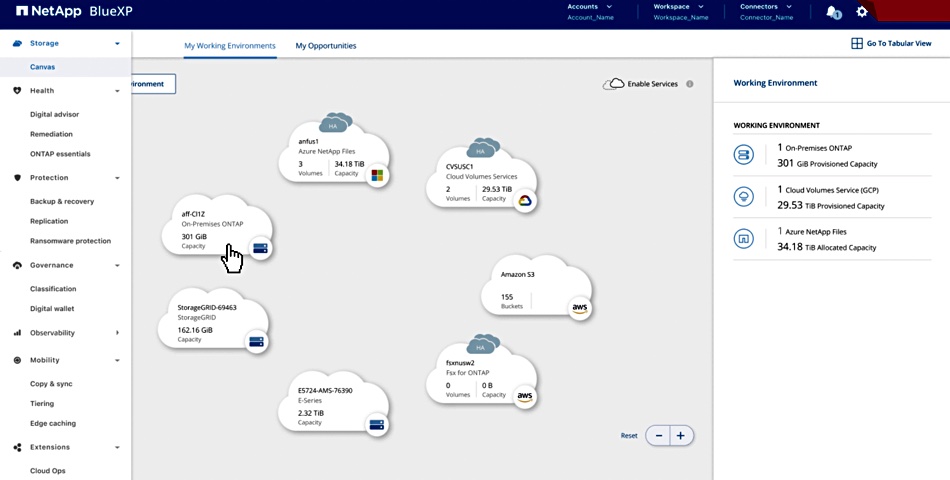

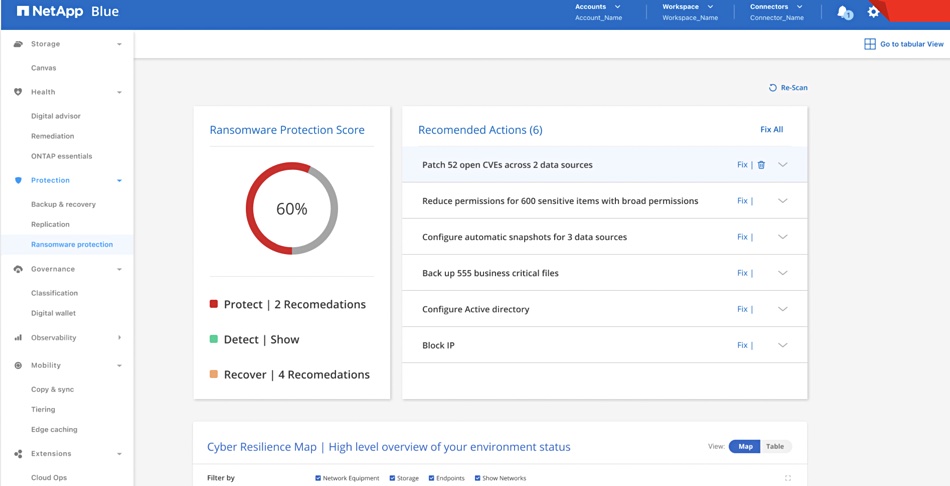

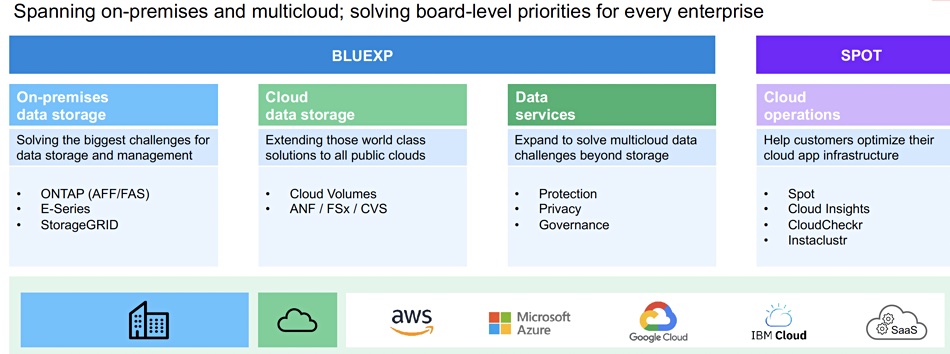

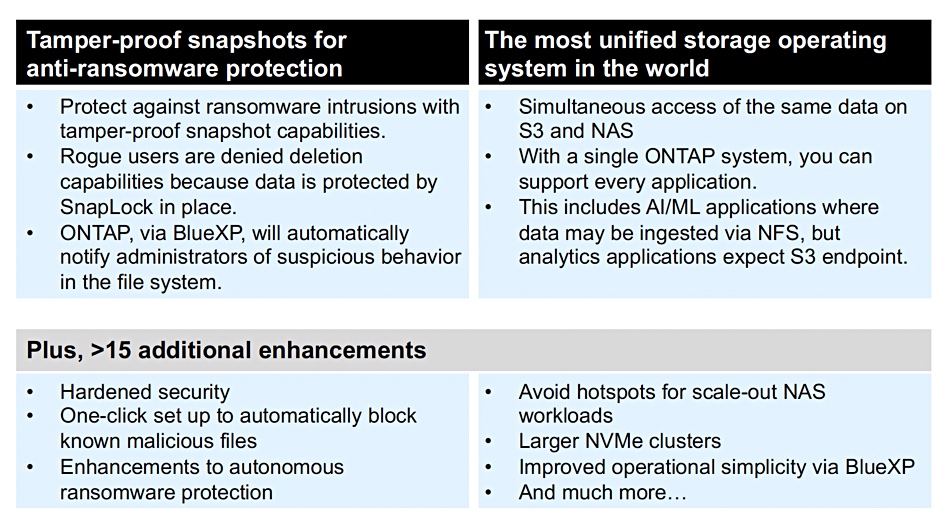

NetApp is going even greener. It has announced availability of new ways (BlueXP, Cloud Insights Dashboard, LCA reports, storage efficiency guarantee) for customers to monitor, manage, and optimize their carbon footprints across hybrid, multi-cloud environments. It has a commitment to achieve a 50 percent intensity reduction of Scope 3 Greenhouse Gas (GHG) emissions (those produced by an organization’s value chain) by 2030, and a 42 percent reduction of Scope 1 (emissions controlled or owned by an organization) and Scope 2 (electricity, heat, and cooling purchases) through adoption of a science-aligned target.

The Lifecycle Assessment (LCA) carbon footprint report uses Product Attribute Impact Algorithm (PAIA) methodology. PAIA is a consortium of information and communication technology peers, sponsored by MIT. The PAIA consortium allows NetApp to produce a streamlined LCA for its products that is aligned with leading standards including EPEAT, IEEE 1680.1 and the French product labeling initiative Grenelle.

…

Nutanix global channel sales boss, Christian Alvarez, is leaving for another opportunity after spending more time with his family. SVP Global Customer Success Dave Gwynn is taking over the position.

…

Pure Storage announced continued momentum within the financial services industry as global banks, asset management firms and financial technology organizations become customers. To date, nine of the top ten global investment banks, seven of the top ten asset management companies, and five of the top ten insurance organizations use Pure Storage products. Pure Storage’s financial services business reached an all-time high last year, growing more than 30 percent year-over-year in FY22.

…

In-cloud database accelerator Silk has promoted Chris Buckel from VP business development and alliances to VP strategic partnerships.

…

There are press rumors that Toshiba will finally be bought by a Japan Industrial Partners-led consortium for $16.1 billion in cash and loans. The consortium includes domestic Japanese companies Orix Corp and Chubu Electric Power Co, plus global investment firms Baring Private Equity Asia, CVC Capital Partners and others. A Bain-led consortium is also involved in the current (second) round of bidding.