Vector database startup Pinecone has launched Pinecone Assistant, an AI agent-building API service to speed RAG development.

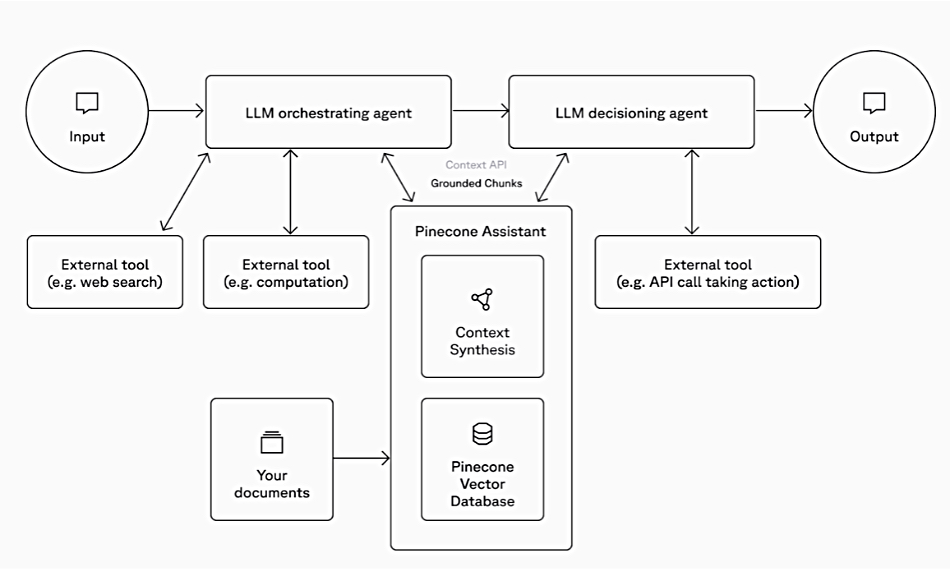

Large and small generative AI language models (LLMs) generate their responses by looking for similarities to a request using mathematically encoded representations of an item’s many aspects or dimensions, known as vector embeddings. These are stored in a database with facilities for search. AI agents are used to provide automated intelligent responses to text input and can invoke LLMs to do their work. The LLM provides natural language processing capabilities to an agent, enabling it to interact with users in a human-like manner, understand complex queries, and generate detailed responses. Pinecone Assistant is designed to help build AI agents.

The company says: “Pinecone Assistant is an API service built to power grounded chat and agent-based applications with precision and ease.” The business claims it abstracts away the chunking, embedding, file storage, query planning, vector search, model orchestration, reranking, and more steps needed to build retrieval-augmented generation (RAG) applications.

Pinecone Assistant includes:

- Optimized interfaces with new chat and context APIs for agent-based applications

- Custom instructions to tailor your assistant’s behavior and responses to specific use cases or requirements

- New input and output formats, now supporting JSON, .md, .docx, PDF, and .txt files

- Region control with options to build in the EU or US

There is an Evaluation API and a Chat API that “delivers structured, grounded responses with citations in a few simple steps. It supports both streaming and batch modes, allowing citations to be presented in real time or added to the final output.”

There is also a Context API that delivers structured context (i.e. a collection of the most relevant data for the input query) as a set of expanded chunks with relevancy scores and references. Pinecone says this makes it a powerful tool for agentic workflows, providing the necessary context to verify source data, prevent hallucinations, and identify the most relevant data for generating precise, reliable responses.

The Context API can be used with a customer’s preferred LLM, combined with other data sources, or integrated into agentic workflows as the core knowledge layer.

The Pinecone Assistant includes metadata filters to restrict vector search by user, group, or category, and also custom instructions so users can tailor responses by providing short descriptions or directives. For example, you can set your assistant to act as a legal expert for authoritative answers or as a customer support agent for troubleshooting and user assistance.

It has a serverless architecture, an intuitive interface, and a built-in evaluation and benchmarking framework. Pinecone says it’s easy to get started as you “just upload your raw files via a simple API,” and it’s quick to experiment and iterate.

Pinecone estimates in its own benchmark that Pinecone Assistant delivers results up to 12 percent more accurate than those of OpenAI assistants.

Pinecone Assistant is now generally available in US and EU for all users. with more info’ available here. It is powered by Pinecone’s fully managed vector database. The company says that customer data is encrypted at rest and in transit, never used for training, and can be permanently deleted at any time.