Open-source object storage supplier MinIO has tweaked its code so AI, ML, and other data-intensive apps running on Arm chipsets go faster.

Arm servers are making inroads against Intel and AMD in the datacenter. In MinIO’s view, object storage has become foundational to AI infrastructure with all the big LLMs (Large Language Models) being built on object storage. The company released its DataPOD reference architecture in August, to help customers build exascale MinIO stores for data needing to be pumped out to AI workload GPUs. MinIO is also working with Intel and providing the core object store within its end-to-end Tiber AI Cloud development platform.

Manjusha Gangadharan, head of Sales and Partnerships at MinIO, stated: “As the technology world re-architects itself around GPUs and DPUs, the importance of computationally dense, yet energy efficient compute cannot be overstated. Our benchmarks and optimizations show that Arm is not just ready but excels in high-performance data workloads.”

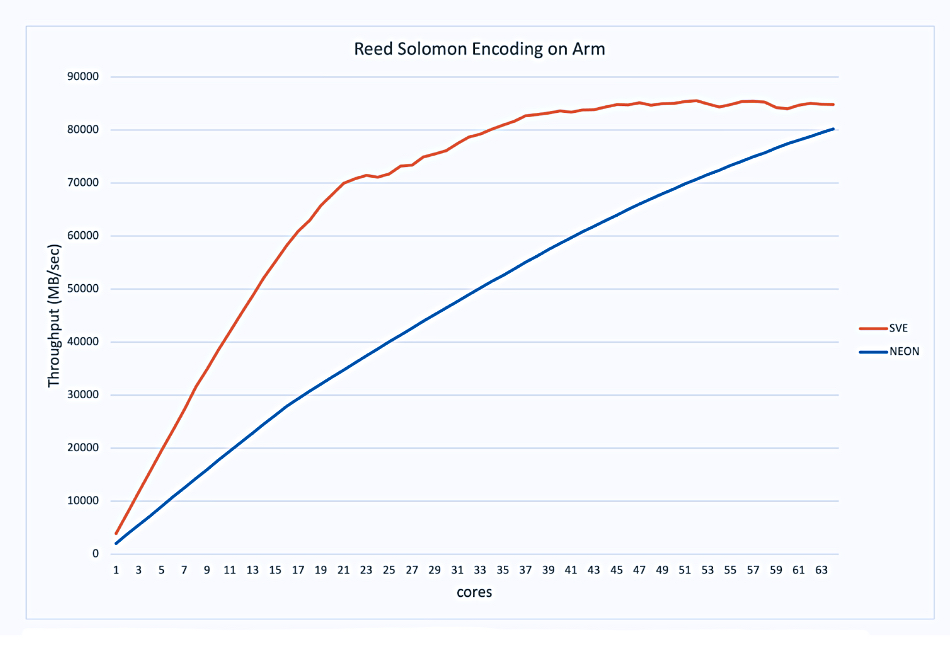

MinIO said it made use of Arm’s Scalable Vector Extension Version (SVE) enhancements – SVE improving vector operation performance and efficiency – to improve its Reed Solomon erasure coding library implementation. The result was 2x faster throughput compared to its previous NEON instruction set implementation, it claimed:

The new code uses a quarter of the available cores (16) to consume half the memory bandwidth used by NEON.

SVE offers support for lane masking via predicated execution, allowing more efficient use of the vector units. Support for scatter and gather instructions makes it possible to efficiently access memory more flexibly.

MinIO also boosted performance for the Highway Hash algorithm it uses for bit-rot detection, using SVE support for the core hashing update function. The algorithm is frequently run during both GET (read) as well as PUT (write) operations.

NVIDIA’s BlueField-3 smartNIC/DPU (Data Processing Unit), which can effectively front-end storage drives, has an on-board 16-core Arm CPU and MinIO’s software can run on that. MinIO says that, with 400Gbps, Ethernet, BlueField-3 DPUs offload, accelerate and isolate software-defined networking, storage, security and management functions. “Given the criticality of disaggregating storage and compute in modern AI architectures, the pairing is remarkably powerful.”

Eddie Ramirez, VP of marketing and ecosystem development, Infrastructure Line of Business, Arm, played the power efficiency card, saying: “Delivering performance gains while improving power efficiency is critical to building a sustainable infrastructure for the modern, intensive data processing workloads demanded by AI. Performance efficiency is a key factor in why Arm has become pervasive across the datacenter and in the cloud, powering server SoCs and DPUs.”

We think that MinIO and Arm could play a role in AI inferencing workloads as edge IT locations. Their presence in the datacenter relies on DC operators adopting Arm servers over x86 boxes and that could happen as Arm server performance and power efficiency match power-restrained data center needs.

A MinIO blog discusses its Arm work.