Parallel file system supplier WEKA has devised an on-premises WEKApod storage appliance to plug into Nvidia’s SuperPOD.

Update. SuperPod details updated and image updated as well. 19 March 2024.

SuperPOD is Nvidia’s rack-scale architecture designed for deploying its GPUs. It houses 32 to 256 DGX H100 AI-focused GPU servers and connects over the InfiniBand NDR network. A DGX A100 is a 8RU chassis containing 8 x H100 GPUs, meaning up to 2,048 in a SuperPOD, 640 GB Memory, dual Xeon 8480C CPUs and BlueField IO-accelerating DPUs.

WEKA chief product officer Nilesh Patel said: “WEKA is thrilled to achieve Nvidia DGX SuperPOD certification and deliver a powerful new data platform option for enterprise AI customers … Using the WEKApod Data Platform Appliance with DGX SuperPOD delivers the quantum leap in the speed, scale, simplicity, and sustainability needed for enterprises to support future-ready AI projects quickly, efficiently, and successfully.”

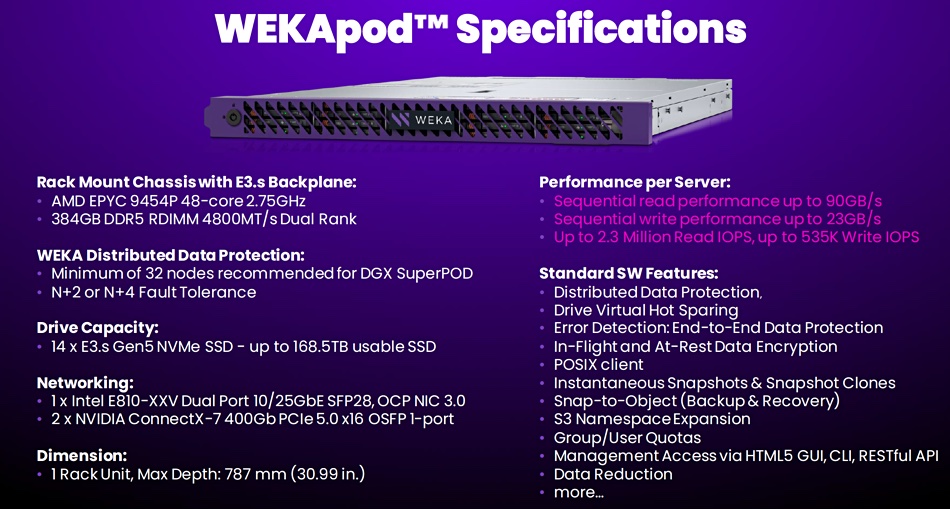

WEKApod is a turnkey hardware and software appliance, purpose-built as a high-performance data store for the DGX SuperPOD. Each appliance consists of pre-configured storage nodes and software for simplified and faster deployment. A 1 PB WEKApod configuration starts with eight storage nodes and scales up to hundreds. It uses Nvidia’s ConnectX-7 400 GBps InfiniBand network card and integrates with Nvidia’s Base Command manager for observability and monitoring.

WEKA has supplied storage already for BasePOD use and its WEKApod is certified for the SuperPOD system, delivering up to 18.3 million IOPS, 720 GBps sequential read bandwidth, and 186 GBps write bandwidth from eight nodes. That’s 90 GBps/node when reading and 23.3 GBps/node when writing.

WEKA claims its Data Platform’s AI-native architecture delivers the world’s fastest AI storage, based on SPECstorage Solution 2020 benchmark scores. The WEKApod’s performance numbers for an 8-node 1PB cluster are 720 Gbps read bandwidth and 18.3 million IOPS. Per 1RU node that means 90 GBs read and 23.3 GBps write bandwidth and 2.3 million IOPS. A WEKA slide shows the hardware spec:

A WEKA blog declares: “Over the past three decades, computing power has experienced an astonishing surge, and more recent advancements in compute and networking have democratized access to AI technologies, empowering researchers and practitioners to tackle increasingly complex problems and drive innovation across diverse domains.”

But with storage, WEKA says: “Antiquated legacy storage systems, such as those relying on the 30-year-old NFS protocol, present significant challenges for modern AI development. These systems struggle to fully utilize the bandwidth of modern networks, limiting the speed at which data can be transferred and processed.”

They also struggle with large numbers of small files. WEKA says it fixes these problems. In fact, it claims: “WEKA brings the storage leg of the triangle up to par with the others.”

Find out more about the SuperPOD-certified WEKApod here.

Comment

Dell, DDN, Hitachi Vantara, HPE, NetApp, Pure Storage, and VAST Data have all made announcements relating to their product’s support for and integration with Nvidia’s GPU servers at GTC. Nvidia GPU hardware and software support is now table stakes for storage suppliers wanting to play in the GenAI space particularly for GenAI training workloads. Any supplier without such support faces being knocked out of a storage bid for AI workloads running on Nvidia gear.