DataStax 2024 predictions:

- AI will become more deeply regulated in the wake of consumer and regulator backlash.

- The rise of Dark AI will cause societal and/or business disruption.

- AI companies will shake out and only those capable of managing the governance, risk, and compliance requirements will remain.

- GenAI will drive deeper operational efficiencies in the SMB market, fueling transformation and disruption.

- The “Instagrams” of GenAI apps will emerge in 2024.

…

IBM released CloudPak for Data v4.8.1 just before Christmas. It introduces support for Red Hat OpenShift Container Platform Version 4.14, and has new features for services such as Cognos Analytics, DataStage, IBM Match 360, and watsonx.ai. Full details here.

…

Intel has recruited Justin Hotard, HPE EVP and GM of High-Performance Computing, AI and Labs, to be EVP and GM of its Data Center and AI Group (DCAI), effective February 1. He’ll report directly to Intel CEO Pat Gelsinger. Hotard, who replaces Sandra Rivera, will be responsible for Intel’s suite of datacenter products spanning enterprise and cloud, including the Xeon processor family, graphics processing units (GPUs) and accelerators. He is expected to play an integral role in driving the company’s effort to introduce AI everywhere. Rivera became the CEO of Intel’s standalone Programmable Solutions Group on January 1.

…

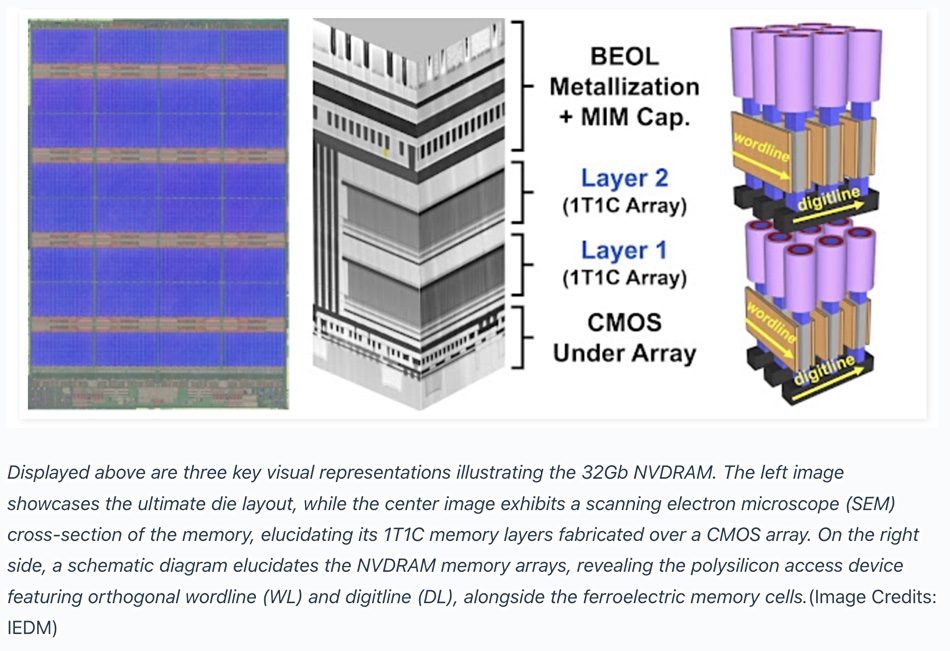

Micron presented its 32Gbit dual-layer stackable ferroelectric nonvolatile memory tech, NVDRAM, at the December 2023 IEDM meeting. It has FeRAM non-volatility and endurance, better-than-NAND retention, and read/write speed similar to DRAM. A paper entitled “NVDRAM: A 32Gbit Dual Layer 3D Stacked Non-Volatile Ferroelectric Memory with Near-DRAM Performance for Demanding AI Workloads” was presented by lead author Nirmal Ramaswamy, Micron VP of advanced DRAM (thanks to Techovedas for the information).

This is an alternative to the failed Optane storage-class memory, and like Optane comes in a stack of two layers. According to Bald Engineering, it uses a 5.7nm ferroelectric capacitor for charge retention in a 1T1C DRAM structure, while dual-gated polycrystalline silicon transistors control access. The stacked double memory layer resides above a CMOS access circuit layer on a 48nm pitch. The paper’s abstract states: “To achieve high memory density, two memory layers are fabricated above CMOS circuitry in a 48nm pitch, 4F2 architecture. Full package yield is demonstrated from -40°C to 95°C, along with reliability of 10 years (for both endurance and retention).”

NVDRAM utilizes the LPDDR5 command protocol. It achieves a bit density of 0.45Gb/mm², higher than Micron’s 1b planar DRAM technology. The cost was not mentioned but could be high.

…

Storage array supplier Nexsan thinks that in 2024 there will be more cloud repatriation as organizations face inflated cloud costs. They will need immutable data stores for protection, and increasingly adopt NVMe and NVMe-oF. Storage options that can decrease energy consumption per TB will become increasingly important. With the growth in unstructured data and data archives, cloud remorse, and extended retention policies, Andy Hill, EVP and worldwide pre-sales, says: “We see HDD continuing to play an essential role as a complement to solid-state options such as NVMe for organizations.”

…

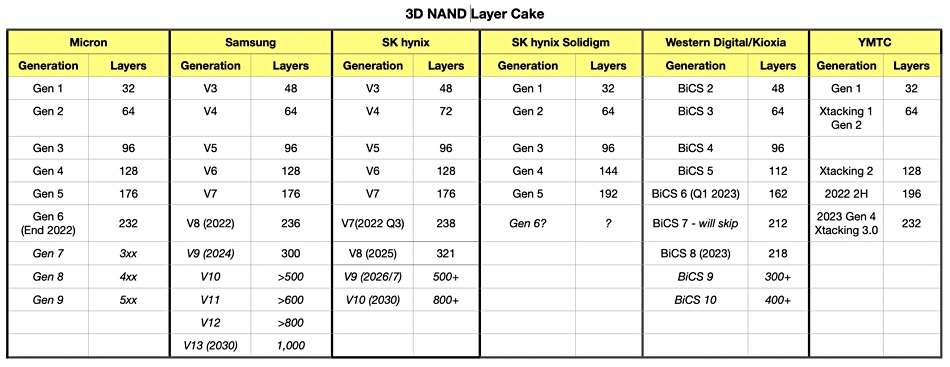

Samsung is expected to scale its V-NAND 3D NAND technology by >100 layers per generation and reach 1,000 layers by 2030.

…

SK hynix plans to provide HBM3E, the memory product it developed in August 2023, to AI technology companies by starting mass production from the first half of 2024. The company will showcase Compute Express Link (CXL), interface technology, a test Computational Memory Solution (CMS) product based on CXL, and an Accelerator-in-Memory AiMX project – a processing-in-memory chip-based accelerator card with low-cost and high-efficiency for generative AI – at CES 2024 in Las Vegas, January 9-12.

SK hynix plans to commercialize 96 GB and 128 GB CXL 2.0 memory solutions based on DDR5 in the second half of 2024 for shipments to AI customers. AiMX is SK hynix’s accelerator card product that specializes in large language models using GDDR6-AiM chips.

…

Reuters reports SK hynix aims to raise about $1 billion in a dollar bond deal. The company reported combined operating losses of 8.1 trillion won ($6.19 billion) during the first three quarters of 2023, caused by a demand slowdown for memory and NAND chips in smartphones and computers. Memory chip prices are expected to recover this year after supplier production cuts. SK hynix is investing to retain its lead in high bandwidth memory (HBM) chips.