Software RAID house Xinnor has developed xiSTORE, an HPC and AI market storage software stack using its xiRAID and the Lustre parallel filesystem with off-the-shelf hardware.

Xinnor’s xiRAID software is among the fastest software RAID products available. It supports NVMe, SAS, and SATA drives, and works with block devices, local or remote, using any transport – PCIe, NVMe-oF or SPDK target, by Fibre Channel or InfiniBand. The software provides a local block device to the system and has a declustered RAID feature for HDDs. This places many spare zones over all drives in the array and restores the data of a failed drive to these zones, making drive rebuilds faster. Lustre (Linux + cluster) is an open source parallel filesystem used in HPC and supercomputing. It is the filesystem software used in DDN’s ExaScaler and HPE Cray ClusterStor arrays.

Xinnor says xiSTORE performance is optimized for HPC and AI workloads, and supports both HDD and NVMe SSD configurations. It features integration with Lustre clustered filesystems, virtual machine management, and has no hardware lock-in. A declustered RAID approach is used for HDD, which delivers drive rebuild times up to 2.6 times faster than ZFS and up to ten times faster than conventional RAID systems, we’re told.

The xiSTORE software supports multiple RAID configurations such as RAID 5, 6, 7.3, N+M/nested/declustered, and is composed of dual-controller building blocks deployed in an HA cluster to eliminate single points of failure. It supports a silent data corruption protection mechanism that scans and fixes errors in the background, with insignificant performance loss.

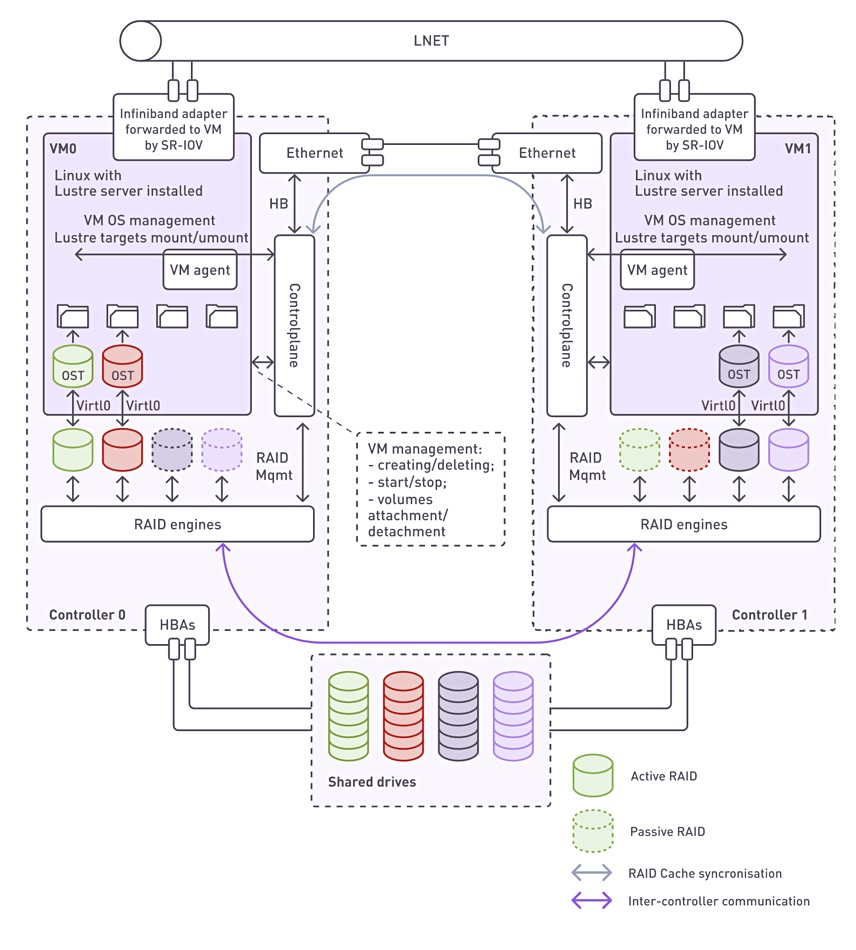

Users can add more JBODs to one node (scale-up) or enhance both capacity and performance by incorporating additional building blocks (Scale-out). Here is an architecture diagram:

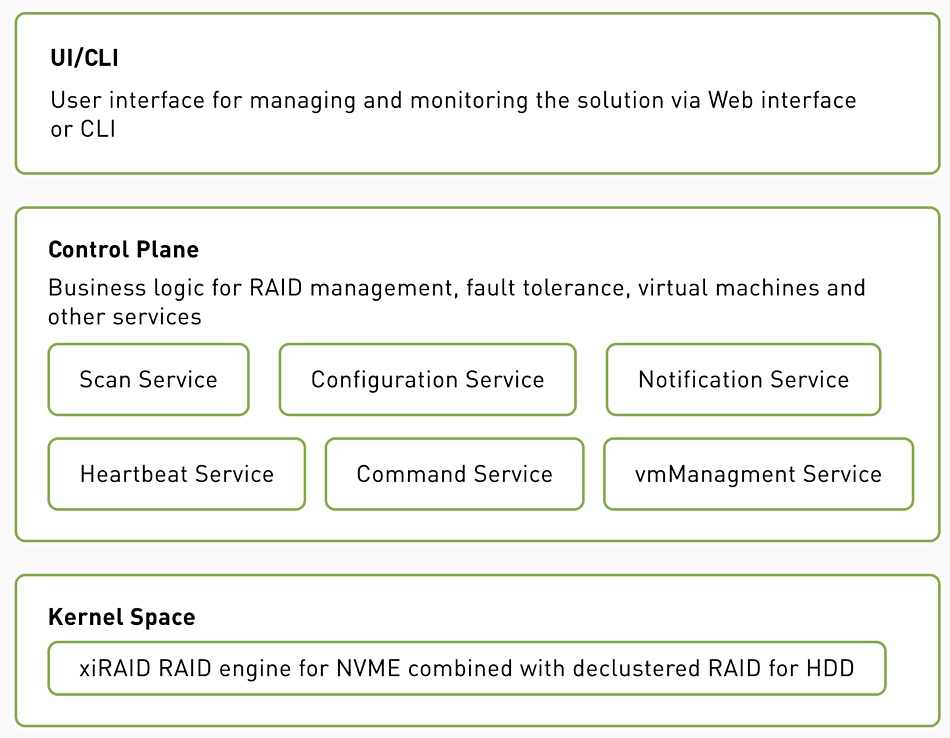

It can deliver over 800 GBps sequential read and write from 384 disk drives, a claimed 83 percent efficiency compared to raw device performance, and more than 60 GBps write and >80 GBps read from 16 NVMe SSDs, a claimed 94 percent efficiency. A node block diagram shows the controller components:

The RAID engine specifically supports RAID 0, 1, 10, 5, 6, 7.3, RAID 50, 60, and 70. The declustered RAID feature supports dRAID (dRAID1), dRAID5 (4D+1P, 8D +1P), dRAID6 (8D + 2P), dRAID6 (16D + 2P), and dRAID 7.3 (16D + 3P).

The minimal node config is two servers with either AMD EPYC or Intel Nehalem (or newer) CPUs, each with:

- 1x SAS HBA SAS 9500-16e per Numa node

- 1x Infiniband 200 Gbps adapter per Numa node

- 1x SAS JBOD with 84 SAS HDD + 4 SAS SSD

The use of off-the-shelf hardware means that the system should be more affordable than commercially available Lustre systems, and not be limited by hardware lock-in.

We referred to an xiSTORE microsite for the information in this article. Xinnor is going to present xiSTORE at booth 389 at the upcoming Supercomputing Conference (SC23) to be held in Denver, CO, November 12-17. Xinnor told us about a coming November 29 webinar at which it will showcase 2 recommended reference architectures: one based on HDD to address the workload of traditional HPC applications and another one based on NVMe SSD to address the new challenge created by AI workloads. Both can be combined in large installations that require both high capacity and performance.”