Nvidia has devised GPUDirect as a way of getting data from storage arrays sent quickly to its GPU servers. Should MinIO support GPUDirect so that its object storage can feed data fast to Nvidia GPU servers as well?

The GPUDirect scheme is a host server CPU/DRAM bypass IO model in which the remote NVME-accessed all-flash array or filer communicates directly with the GPU server over an RDMA link rather than have a host server control and manage the IO session.

Participating storage array vendors include Excelero, DDN, IBM (with Spectrum Scale), Pavilion Data and VAST Data (NFS over RDMA).

WekaIO also sends files from its filesystem to Nvidia’s GPU servers via GPUDirect, and NetApp supports GPUDirect storage with ONTAP AI and (non-ONTAP) EF600 arrays.

MinIO positions its object storage as being fast enough for performance-sensitive applications, as it can deliver 183.2 GB/sec (1.46 Tbit/sec) on reads and 171.3 GB/sec (1.37 Tbit/sec) on writes, using 32 nodes of AWS i3en.24xlarge instances, each with eight NVMe drives.

While on an IT Press Tour, we asked MinIO co-founder and CEO AB Periasamy whether MinIO would support GPUDirect. He said “I’m looking at it,” but he was not convinced it was necessary.

We also asked storage architect Chris Evans what he thought about the idea and we quote his answer in full as it explains a lot about how GPUDirect works: “I don’t think MinIO could support GPUDirect Storage in the current incarnation.

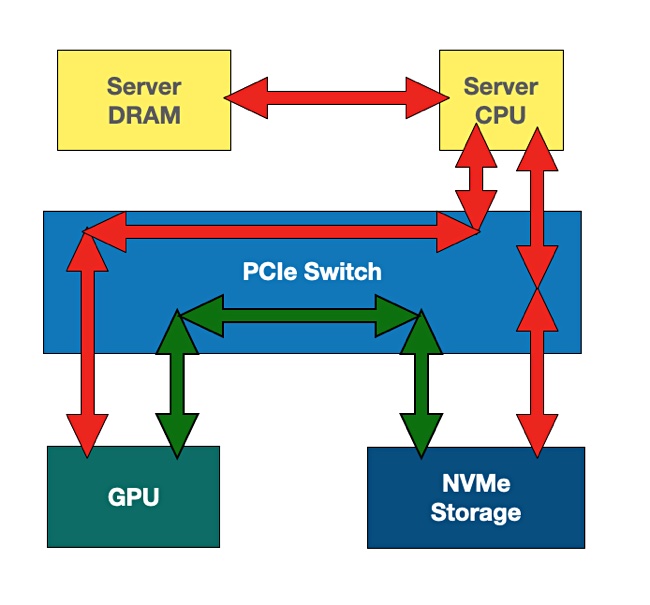

“The aim of the GPUDirect technology is to relieve the overhead of pushing data through the main CPU – eliminating the need for a ‘bounce buffer’.

“So splitting a pool of data into two components, we have the metadata and the content/data itself. In the models I’ve seen, the physical data is expected to be on NVMe devices or NVMe-oF devices. The bulk of the physical data moves directly between the GPU and the source storage (an NVMe-oF device) using DMA (direct memory access). I expect the mechanism to achieve this is that the GPU and application share metadata that indicates where the physical data is in the memory space, then the GPU reads it directly across the PCIe bus using DMA.

“For MinIO to use GPUDirect, the software would need to be storing data on NVMe devices. Data on SAS/SATA isn’t going to work. However, even with PCIe NVMe drives, I suspect that MinIO will need to be reading/writing to them directly via PCIe (a bit like WEKA or StorPool does), not using the Linux kernel for I/O.

“As the MinIO executable is only about 50MB in size and can be easily deployable everywhere, I would expect that there are no special I/O routines included to bypass the NVMe kernel support. As a result, in the current form, MinIO probably won’t support GPUDirect.

“To build support in, there would need to be some serious development to write directly to NVMe devices. At that point, the simplicity and flexibility of the solution is lost.”

We have asked AB about the points and as soon as we get a reply, it will bet added.

Maybe we’ll get a MinIO Edge distribution and a separate MinIO GPUDirect one in the future.