IBM and Nvidia are building a DGX SuperPOD system based on its IBM’s ESS 3200 storage server and Spectrum Scale. It has an updated reference architecture for DGX PODs and released benchmark data for the ESS 3200 and a DGX POD.

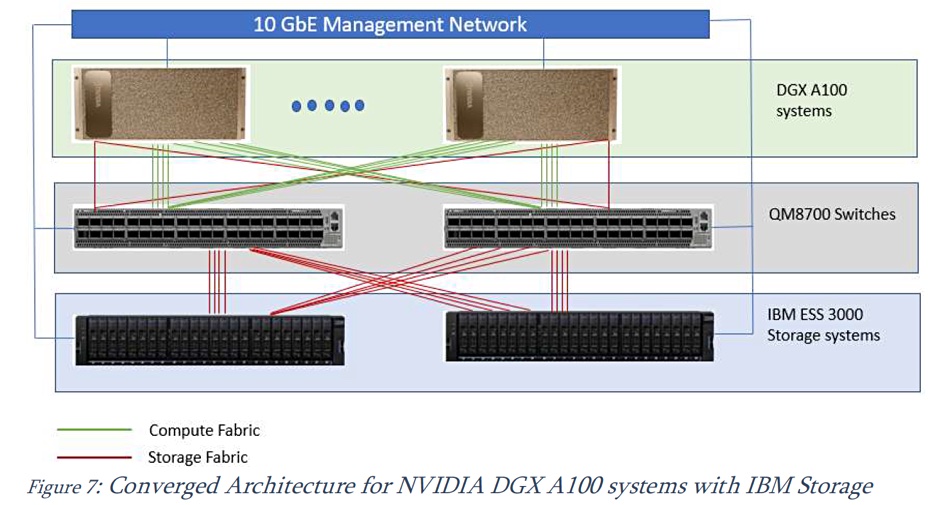

A DGX POD is a reference architecture for AI processing and contains up to nine Nvidia DGX-1 servers, twelve storage servers (from Nvidia partners), and three networking switches to support single- and multi-node AI model training and inference using NVIDIA AI software. A SuperPOD is an updated and larger POD architecture, that starts at 20 Nvidia DGX A100 systems and scales to 140 of them.

Douglas O’Flaherty, Global Ecosystems Leader for IBM Storage, has written a blog, in which he states: “IBM Storage is showcasing the latest in Magnum IO GPU-Direct Storage (GDS) performance with new benchmark results, and is announcing an updated reference architecture (RA) developed for Nvidia 2-, 4-, and 8-node DGX POD configurations. Nvidia and IBM are also committed to bringing a DGX SuperPOD solution with IBM Elastic Storage System 3200 (ESS 3200) by the end of Q3 of this year.”

He writes that “With future integration of the scalable ESS 3200 into Nvidia Base Command Manager, and including support for Nvidia Bluefield DPUs, networking and multi-tenancy will be simplified.”

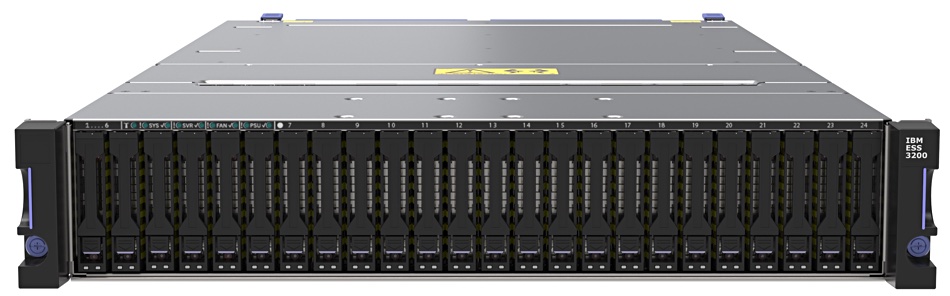

The ESS 3200 is a 2U × 24-bay all NVMe SSD system supporting up to eight InfiniBand HDR-200 or Ethernet-100 ports.

O’Flaherty says that IBM has “issued updated IBM Storage Reference Architectures with NVIDIA DGX A100 Systems,” and this is, he told us, “to reflect the increased capability of the ESS 3200 (2x that of the 3000)”. The Reference Architecture uses Nvidia’s CPU/DRAM bypass GPU-Direct scheme to set up RDMA links between the ESS 3200 storage and the DGX-A100 GPUs so that Spectrum Scale can read and write to these GPUs in the DGX POD at high speed.

According to a 2020 IBM reference architecture (RA) doc, and using GDS-enabled Fio Bandwidth testing, “the total system read throughput scales to deliver[y] of 94GB/sec with two ESS 3000 units and eight DGX A100 systems,” and “the total system write throughput scales to 62GB/sec with two ESS units and eight DGX A100 systems.”

The ESS 3200 is much faster than the ESS 3000, and that edition of the RA did not use GPU-Direct Storage, only using the Storage Fabric in keeping with the NVIDIA guidelines.

DGX POD network views

There are two views of the network in a DGX POD: the storage fabric view and the compute fabric view.

The storage fabric network is 2x HDR/200GbitE NICs connected through the CPU, while the compute fabric is 8x HDR NICs closely connected to the GPU. The storage fabric in the IBM ES3200 reference architecture has a theoretical maximum of 50GB/sec (2x HDR) to a single DGX and actually delivered 43GB/sec, 86 per cent of the theoretical maximum.

The compute fabric on a single DGX has a theoretical maximum of 200GB/sec (8x HDR). with GPU-Direct Storage a pair of ESS 3200s demonstrated 191GB/sec, or 95.5 per cent of the theoretical maximum — much faster than the ESS 3000’s 94GB/sec.

Bootnote 1. Nvidia GPU-Direct release notes for May 2021 (Open Beta Release v0.95.1) indicate support has been added for Excelero’s NVMesh devices and ScaleFlux computational storage. Support has also been added for Mellanox OpenFabrics Enterprise Distribution for Linux (MLNX_OFED) which can use distributed file systems from WekaIO (WekaFS v3.8.0), DDN (Exascaler v5.2) and VAST Data (VAST v3.4). Other supported block access and filesystem access systems supported are ScaleFlux CSD, Excelero NVMesh and PavilionData.