Analysis Nvidia has updated its SuperPOD GPU server for AI by adding BlueField DPU support to accelerate and secure access to data. DDN is the first Nvidia-certified SuperPOD-BlueField storage supplier. The road is now open to a BlueField fabric future that interconnects x86/VMware servers, Nvidia GPU servers and shared storage arrays in a composable pool of data centre resources.

Nvidia announced general availability for the BlueField-2 DPU (Data Processing Unit) this week and heralded its powerful successor; BlueField-3, which will sample ship in the first 2022 quarter. DDN has integrated its EXAScaler parallel filesystem array with BlueField-2.

Charlie Boyle, Nvidia’s VP and GM for DGX systems, issued a statement: “The new DGX SuperPOD, which combines multiple DGX systems, provides a turnkey AI data centre that can be securely shared across entire teams of researchers and developers.”

A SuperPOD is a rack-based AI supercomputer system with 20 to 140 DGX A100 GPU systems and HDR (200Gbit/s) InfiniBand network links. An Nvidia image shows an 18-rack SuperPod system;

DDN has integrated its EXAScaler parallel filesystem array with BlueField-2. NetApp and WekaIO are also supporting BlueField.

The secure sharing that Nvidia’s Boyle refers to, is supplied courtesy of SuperPOD having a pair of BlueField-2 DPUs integrated with each DGX A100. This means that multiple clients (tenants) share access to a SuperPOD system, each with secure data access. It also means that stored data is shipped to and from the DGX A100s across Bluefield-controlled links.

The SuperPOD system is managed by Nvidia Base Command software which provisions and schedules workloads, and monitor system health, utilisation and performance. These can use one, many or all of the A100s in the SuperPOD, facilitation what Tony Paikeday, Nvidia senior director for product marketing, calls “cloud-native supercomputing.”

“IT administrators can use the offload, accelerate and isolate capabilities of NVIDIA BlueField DPUs to implement secure multi-tenancy for shared AI infrastructure without impacting the AI performance of the DGX SuperPOD.”

BlueField and x86 servers

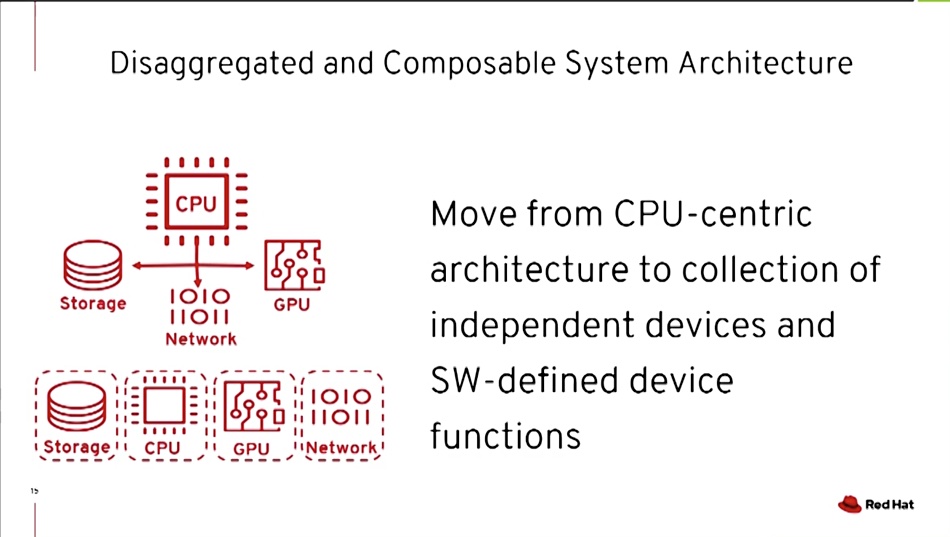

Last September, VMware signalled its intention to support BlueField via the company’s Project Monterey. This entails vSphere handling networking and storage IO functions on the BlueField Arm DPU, and running applications in virtual machines on the host x86 servers. At the time, Paul Perez, Dell Technologies CTO, said: “We believe the enterprise of the future will comprise a disaggregated and composable environment.”

Red Hat is also supporting BlueField with its Red Hat Linux and OpenShift products. A blog by three Red Hat staffers states: “In this post we’ll look at using Nvidia BlueField-2 data processing units (DPU) with Red Hat Enterprise Linux (RHEL) and Red Hat OpenShift to boost performance and reduce CPU load on commodity x64 servers.”

Red Hat Enterprise Linux 8 is installed on the BlueField card, along with Open vSwitch software, and also on the host server. The Red Hat authors write: “The BlueField-2 does the heavy lifting in the following areas: Geneve encapsulation, IPsec encapsulation/decapsulation and encryption/decryption, routing, and network address translation. The x64 host and container see only simple unencapsulated, unencrypted packets.”

They say: “A single BlueField-2 card can reduce CPU utilisation in an [x86] server by 3x, while maintaining the same network throughput.“

A video of a presentation by Red Hat CTO Chris Wright at Nvidia’s GTC’21 virtual conference, has him discussing composable compute, saying this includes “resource virtualisation and disaggregation”.

Wright says Red Hat sees a trend of moving towards a composable compute model where compute capabilities are software-defined.

A BlueField composability fabric

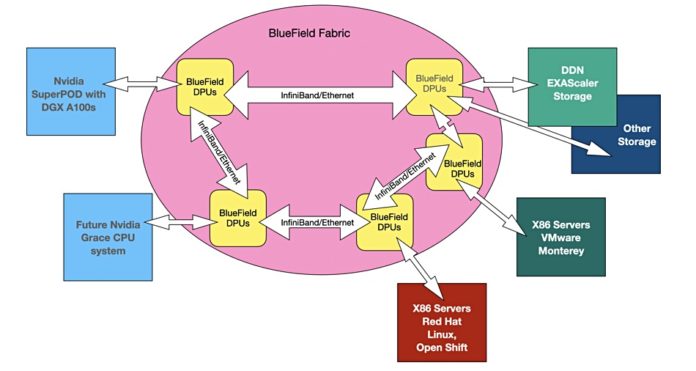

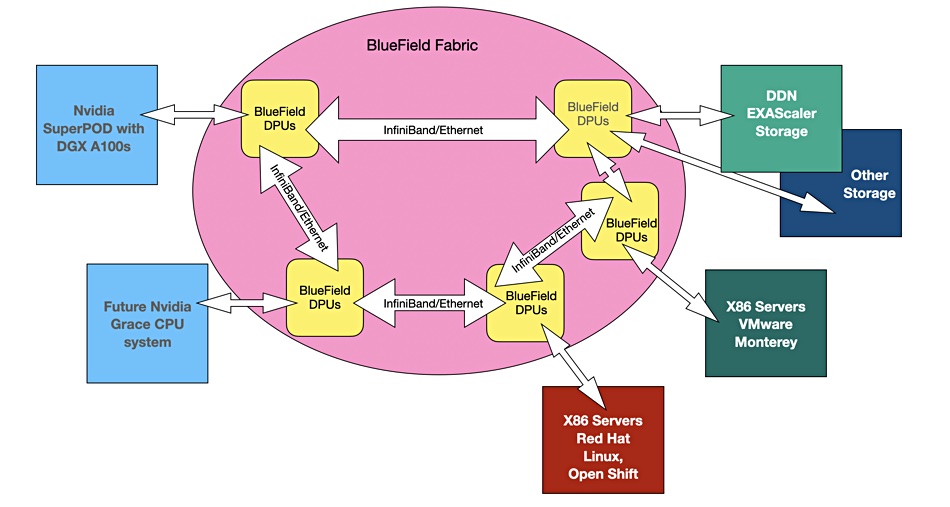

Blocks & Files envisages a Bluefield-managed fabric interconnecting x86 Red Hat and VMware servers, Nvidia’s SuperPOD, other DGX GPU servers, the company’s impending Arm-powered Grace general purpose processing system, and storage – certainly from DDN and probably from NetApp and WekaIO.

BaseCommand will provision or compose SuperPOD workloads for tenants at, we envisage, A100 granularity, with EXAScaler capacity being provisioned for tenants as well. Red Hat and VMware will also provision/compose workloads for their x86 application servers.

We asked Nvidia’s Kevin Dierling, SVP for Networking, about Nvidia and composability. He said: “It’s certainly real.” His session at GTC21 with Chris Wright “specifically talks about the composable data center.”

Blocks and Files witnesses three composable Data centre technologies in development: Fungible DataCenter, Liqid’s CDI orchestration software, and Nvidia’s BlueField.

At this early stage of the composable data centre game two suppliers base their offerings on proprietary hardware, while Liqid has a software approach using the PCIe bus. Does the composability burden require speciality hardware? That will become an important question.