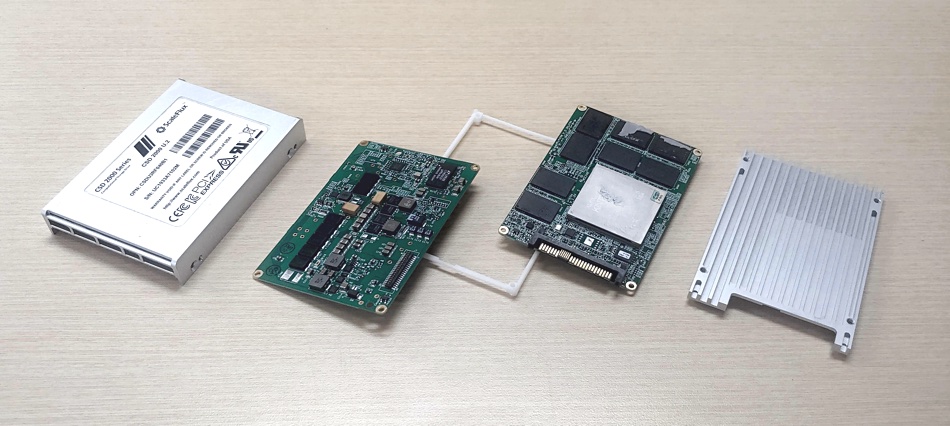

Computational storage – adding compute capability to a storage drive – is becoming a thing. NGD, Eideticon, ScaleFlux have added compute cards to SSDs to enable compute processes to run on stored data, without moving that data into the host server memory and using its CPU to process the data. Video transcoding is said to be a good use case for computational storage drives (CSDs).

But how does the CSD interact with a host server. Blocks & Files interviewed ScaleFlux’s Chief Scientist, Tong Zhang, to find out.

Blocks & Files: Let’s suppose there is a video transcoding or database record processing application. Normally a new video file is written to a storage device in which new records appear in the database. A server application is aware of this and starts up the processing of the new data in the server. When that processing is finished the transformed data is written back to storage. With computational storage the overall process is different. New data is written to storage. The server app now has to tell the drive processor to process the data. How does it do this? How does it tell the drive to process a piece of data?

Tong Zhang: Yes, in order to off-load certain computational tasks into computational storage drives, host applications must be able to adequately communicate with computational storage drives. This demands the standardised programming model and interface protocol, which are being actively developed by the industry (e.g., NVMe TP 4091, and SNIA Computational Storage working group).

Blocks & Files: The drive’s main activity is servicing drive IO, not processing data. How long does it take for the drive CPU to process the data when the drive is also servicing IO requests? Is that length of time predictable?

Tong Zhang: Computational storage drives internally dedicate a number of embedded CPUs (e.g., ARM cores) for serving drive IO, and dedicate a certain number of embedded CPUs and domain-specific hardware engines (e.g., compression, security, searching, AI/ML, multimedia) for serving computational tasks. The CSD controller should be designed to match the performance of the domain-specific hardware engines to the storage IO performance.

As with any other form of computation off-loading (e.g., GPU, TPU, FPGA), developers must accurately estimate the latency/throughput performance metrics when off-loading computational tasks into computational storage drives.

Blocks & Files: When the on-drive processing is complete how does the drive tell the server application that the data has been processed and is now ready for whatever happens next? What is the software framework that enables a host server application to interact with a computational storage device? Is it an open and standard framework?

Tong Zhang: Currently there is no open and standard framework, and the industry is very actively working on it (e.g., NVMe.org, and SNIA Computational Storage working group).

Blocks & Files: Let’s look at the time taken for the processing. Normally we would have this sequence: Server app gets new data written to storage. It decides to process the data. The data is read into memory. It is processed. The data is written back to storage. Let’s say this takes time T-1. With computational storage the sequence is different: Server app gets new data written to storage. It decides to process the data. It tells the drive to process the data. The drive processes the data. It tells the server app when the processing is complete. Let’s say this takes time T-2. Is T-2 greater or smaller than T-1? Is the relationship between T-2 and T-1 constant over time as storage drive IO rises and falls? If it varies then surely computational storage is not suited to critical processing tasks? Does processing data on a drive use less power than processing the same data in the server itself?

Tong Zhang: The relationship between T-1 and T-2 depends on the specific computational task and the available hardware resource at host and inside computational storage drives.

For example, if computational storage drives internally have a domain-specific hardware engine that can very effectively process the task (e.g., compression, security, searching, AI/ML, multimedia), then T2 can be (much) smaller than T-1. However, if computational storage drives have to solely rely on their internal ARM cores to process the task and meanwhile the host has enough idle CPU cycles, then T-2 can be greater than T-1.

Inside computational storage drives, IO and computation tasks are served by different hardware resources. Hence they do not directly interfere with each other. Regarding power consumption, computational storage drives in general consume less power. If current computational tasks can be well served by domain-specific hardware engines inside computational storage drives, of course we have shorter latency and meanwhile lower power consumption.

If current computational tasks are solely served by ARM cores inside computational storage drives, the power consumption can still be less because we largely reduce data-movement-induced power consumption and the low power nature of ARM cores.

Blocks & Files: I get it that 10 or 20 drives could overall process more data faster than having each of these drive’s data be processed by the server app and CPU – but how often is this parallel processing need going to happen?

Tong Zhang: Data-intensive applications (e.g., AI/ML, data analytics, data science, business intelligence) typically demand highly parallel processing over a huge amount of data, which naturally benefit from the parallel processing inside all the computational storage drives.

Comment

For widespread use, CSDs will require a standard way to communicate with a host server so that it can request them to do work and be informed when the work is finished. Dedicated processing hardware on the CSD, separate from the normal drive IO-handling HW, will be needed for this to ensure predictable time is taken for the processing.

Newer analytics-style workloads that require relatively low-level processing of a lot of stored data can benefit from parallel processing by CSDs instead of the host server CPUs doing the job. The development of standards by NVMe.org, and the SNIA’s Computational Storage working group will be the gateway through which CSD adoption has to pass for the technology to become mainstream.

We also think that CSDs will need a standard interface to talk to GPUs. No doubt the standards bodies are working on that too.