Interview A set of containers that would need 20 servers to run in a VM-based environment would need fewer than 4 servers to run in a bare-metal Diamanti environment; stats like this make Blocks & Files want to know how and why Diamanti’s bare metal hyperconverged systems can run Kubernetes workloads faster than either single servers or HCI systems – so we asked.

Brian Waldon, Diamanti’s VP of product, provided the answers to our questions

Blocks & Files: How do containerized workloads run more efficiently in a Diamanti system than in a commodity server+storage system?

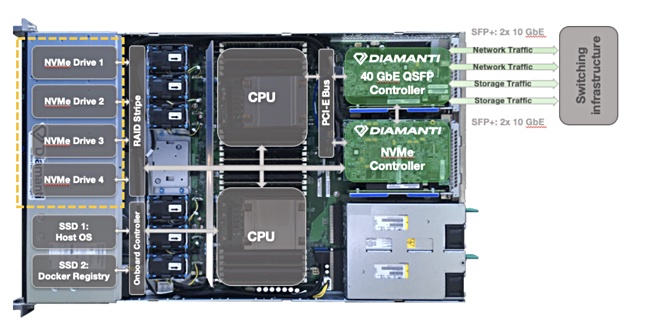

Brian Waldon: Diamanti’s Ultima Network and Storage offload cards and bare metal hyperconverged platform are the greatest drivers to the increased workload efficiency that Diamanti provides.

Diamanti offloads network and storage traffic to dedicated embedded systems, freeing up the host compute resources to power workloads and improve performance. This minimizes iowait, giving customers a 10x to 30x performance improvement on I/O-intensive applications while getting consistent sub-100 microsecond latency and 1 million IOPS per node.

The use of offload cards in the data center is not entirely new – the AWS Nitro architecture has helped to deliver better consolidation for EC2 instances and produce more cost efficiency for AWS. However, by combining offload card technology with purpose-built Kubernetes CNI and CSI drivers and bare metal, optimized hardware (w/ NVMe and SR-IOV), Diamanti has an integrated software and hardware solution that removes extraneous translation layers that slow things down.

Blocks & Files: How much more efficient is a Diamanti cluster than a Dell EMC VxRail system on the one hand and a Nutanix AHV system on the other?

Brian Waldon: Generally, we are compared to virtualized infrastructure, and again here our offload technology provides far greater efficiencies. We have a holistic platform that is designed for containerized workloads. While we happen to use X86 servers behind the scenes we have eliminated a lot of the layers between the X86 server and the actual workload, which is another key component to the efficiency with our platform.

Given that we have a hyperconverged platform, Diamanti can use the bare minimum amount of software to achieve the same goals as competitive solutions and really drive efficiency. Our platform can drive up to 95 per cent server utilisation; alternatively, virtualised infrastructure can use up to 30 per cent of server resources just to perform infrastructural functions such as storage and networking using expensive CPU resources.

One of our customers was running an Elasticsearch application on 16 standard x86 servers supporting 2.5 billion events per day. They accomplished the same on just 2 nodes [servers] of the Diamanti platform while also reducing index latency by 80 per cent.

Another customer saw similar improvements with their MongoDB application – reducing their hardware requirements from a 15-node cluster to just 3 nodes of Diamanti while still getting 3x greater IOPS.

Separately, and with Redis Enterprise, Diamanti supported average throughput of more than 100,000 ops/sec at a sub-millisecond latency with only four shards running on a three-node cluster with two serving nodes.

Blocks & Files: How does the Diamanti system compare to industry-standard servers plus a storage array with a CSI plugin?

Brian Waldon: The key differences are the innovations behind the storage offload cards and the full-stack approach from CSI down to the hardware.

Because Diamanti has a holistic hyperconverged system we can provide guaranteed Quality of Service (QoS), access and throughput, from the perspective of storage, and honour that from end to end. We have a built-in storage platform to drive local access to disk while still providing enterprise-grade storage features such as mirroring, snapshots, backup/restore, and asynchronous replication.

Diamanti also minimises staff set up and support time by providing a turnkey Kubernetes cluster with everything built-in. We give customers an appliance that automatically clusters itself and provides a Kubernetes interface.

Comment

Diamanti claims a 3-node cluster can achieve more than 2.4 million IOPS and have sub-100μs cross-cluster latency. Basically it’s a lot more-bangs-for-your-bucks message. The company says it knocks performance spots off commodity servers running Linux, virtualised servers and hyperconverged systems running virtual machine hypervisors.

It’s software uses up less of a server’s resources and its offload cards get data to and from containers faster. Result; you run more containers and run them faster.

You can check out a Diamanti white paper about running containers on its bare metal to find out more. A second Diamanti white paper looks at Redis Enterprise testing.