Snowflake Computing has taken in $450m in a second 2018 funding round to advance its data warehouse in the cloud business. Total funding now stands at $923m.

I interviewed CEO Bob Muglia in my news story for our sister title The Register about the funding round. You can see what he has to say about what Snowflake will do with all the money

Here we explore a little deeper why the VCs are so keen to stump up some much cash.

Snowflake is competing against two whales: AWS with RedShift and Microsoft Azure with SQL Data Warehouse. These are both cloud-generation data warehouses and not on-premises product pigs with cloud lipstick rubbed on their faces.

AWS and Azure have huge resources at their disposal and Snowflake has to meet and beat their features, out-perform them with better code, and out-market and out-price them as well, while selling cloud style with on-demand / subscription pricing and not having customers buy perpetual licenses.

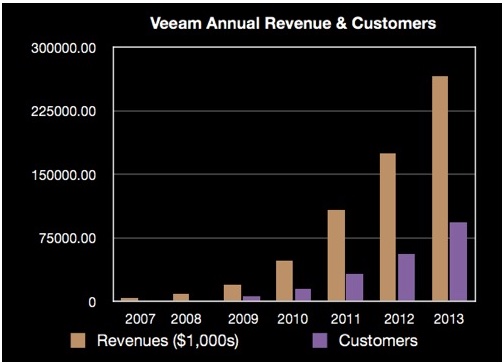

This does not seem like a promising battleground for any startup, but, so far, the VCs see Snowflake is doing well, tripling its customer count in 12 months to pass the 1,000 mark, for example. They now value the company at $3.9bn but it needs to be bigger, to scale more.

In other words, the company has a huge need for dollars and could well have a prodigious cash burn rate, which is sustainable as long as the company’s business keeps on growing such that reaching critical mass and break-even can be forecast with a, hopefully, massive exit coming the VCs’ way.

Snowflake wants to recruit more and more customers, blizzards of them, to its multi-cloud data warehouse, such that it ushers in a cloud data warehouse ice age, with a Snowflake ice-cap dominating the market, and burying its competition.

And why are the VCs bankrolling such grand designs?

Blitzscaling

We think the answer could be blitzscaling. Jedidiah Yueh’s “Disrupt or Die” book on Silicon Valley startups has a chapter that looks at blitzscaling; Execution, page 187.

Yueh founded and ran Avamar, which was bought by EMC for $165m in 2006, and also funded and is the executive chairman of database virtualizer Delphix.

He says the blitzscaling idea, according to Reid Hoffman, a Greylock partner and chairman and founder of LinkedIn, is “the science and art of rapidly building out a company to serve a large and usually global market, with the goal of becoming the first mover at scale.”

In a section called Pay to Grow ( page 228), Yueh says a VC is driven by getting a larger payoff when a funded company is acquired or goes public. So VCs have a force-feeding tendency; blitzfeeding.

Yueh suggests VC-funded blitzscaling can lead to undisciplined financial management and poor capital efficiency.

He gives an example of two exit scenarios by a startup company that nets one billion dollars. Scenario A has the company funded by the VC to the tune of $20m, and the VC gets a $200m payoff – 20 per cent of the $1b. Not bad.

In scenario B the VC invests $100m to build the $1bn exit; “netting the VCs 51 per cent or $501m.” Yueh says VCs want scenario B. The more money they put in, the larger the percentage of the company they own and the larger their payoff.

He says; “The highest returns for VCs come from the early rounds (Series Seed, A and B) but the safest places to put large amounts to work are big, later rounds, especially when the VCs already have clear view on how well a company is performing.”

Which brings us back to Snowflake and its enormous 2018 funding rounds.