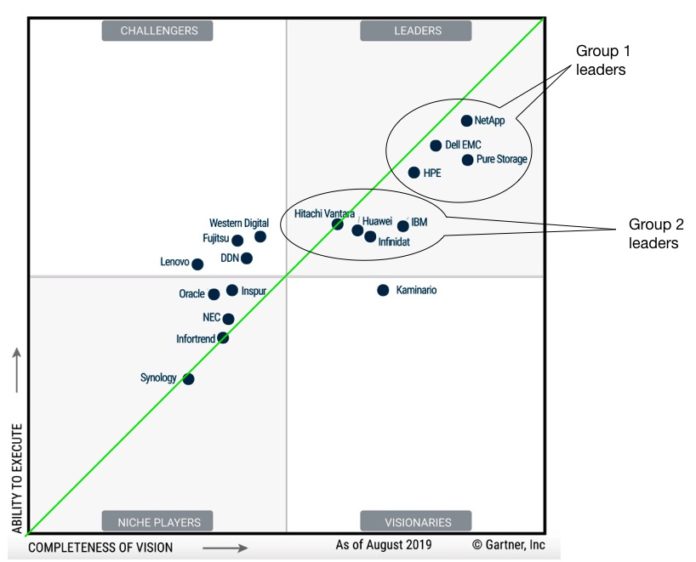

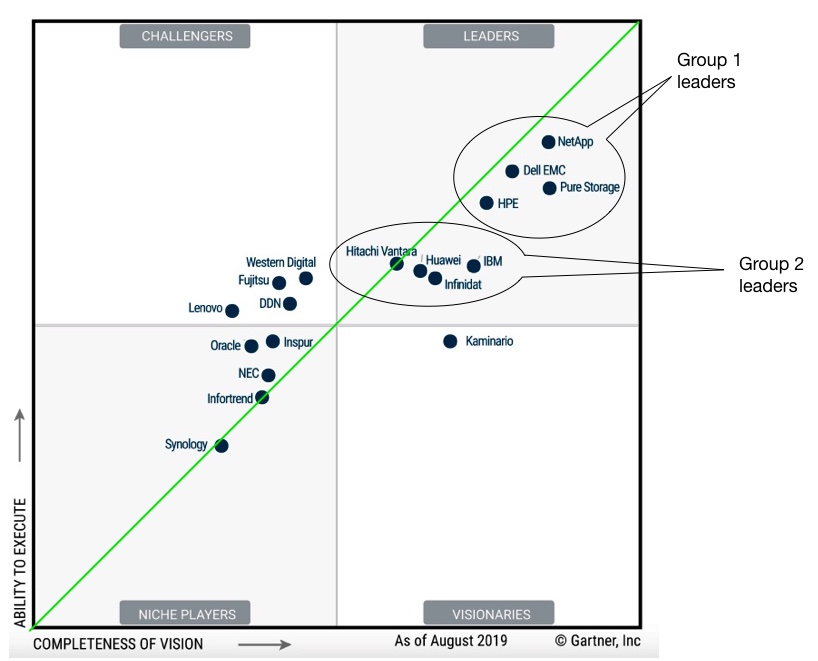

Western Digital is abandoning the storage systems business and is selling the IntelliFlash array unit to DDN. It has also put its ActiveScale archival storage array business up for sale.

This is an abrupt and unexpected about turn for Western Digital which acquired IntelliFlash when it bought Tegile in August 2017 for an undisclosed sum. As recently as July this year WD extended IntelliFlash capabilities with entry-level NVMe models, a higher-capacity SAS array, live dataset migration and an S3 connector.

The sale to DDN suggests to Blocks & Files that the Tegile acquisition was a WD mistake. This excursion into enterprise storage arrays and archive systems reflects poorly on Western Digital leadership.

The conclusion many will draw is that selling data centre storage systems to the highly competitive enterprise market was a step too far for WD. This business gets more than 80 per cent of its revenues from selling disk drives and SSDs to OEMs and consumers, where it faces limited competition.

A WD statement described: “Western Digital’s strategic intention to exit Storage Systems, which consists of the IntelliFlash and ActiveScale businesses. The company is exploring strategic options for ActiveScale. These actions will allow Western Digital to optimize its Data Center Systems portfolio around its core Storage Platforms business, which includes the OpenFlex platform and fabric-attached storage technologies.”

Mike Cordano, WD COO, said in a prepared statement; “Scaling and accelerating growth opportunities for IntelliFlash and ActiveScale will require additional management focus and investment to ensure long-term success.”

Alex Bouzari, CEO and co-founder of DDN, said in a canned quote: “We are delighted to add Western Digital’s high-performance enterprise hybrid, all flash and NVMe solutions to DDN’s… data management at scale product portfolio.”

IntelliFlash inside DDN

The joint DDN-WD announcement said IntelliFlash customer will benefit from DDN’s focus on storage and data management challenges, deep expertise in service and support and a rich, broad technology portfolio. DDN has a set of capabilities that WD lacks as well as a willingness to invest.

IntelliFlash staff will join DDN, which now has more than 10,000 customers and 500 partners worldwide. WD and DDN will work to deliver a seamless transition for customers and partners with ongoing product availability and support continuity. DDN is to invest in an accelerated roadmap of the IntelliFlash line.

The deal includes a mutual global sourcing agreement in which Western Digital will become a customer of IntelliFlash from DDN and a preferred HDD and SSD supplier to DDN.

DDN bought the crashed Tintri business for $60m in September 2018 and Nexenta for an undisclosed sum in May this year. DDN now has three newly-acquired product lines to integrate and locate in its product space and marketing messages; Tintri, Nexenta and Tegile. This is already starting to happen with Nexenta file capabilities added last month to Tintri systems.

Broadly speaking, Tintri and IntelliFlash compete. IntelliFlash is an enterprise array as is Tintri. It has hybrid and all-flash models but lacks Tintri’s capabilities in virtualized server integration. This software capability could be grafted onto the IntelliFlash OS and we might also expect Tintri and IntelliFlash to evolve towards a common hardware chassis.

The two lines should probably merge – unless DDN can convincingly differentiate them.

And let’s not forget ActiveScale

ActiveScale is an archival storage array, and WD got into the archive vault business when it bought HGST. As well as making disk drives HGST sold the ActiveArchive archive system. The basis for this was HGST’s acquisition of Amplidata in 2015.

ActiveScale arrived in late 2016 and it became the lead archival product. Now it is an unwanted product. It is not an acquisition target for DDN which has its own WOS object storage line and Nexenta object storage software. It does not need a third object storage technology.

The DDN – WD transaction is expected to close later this year subject to closing conditions. WD’s storage systems business exit is expected to generate an annual non-GAAP EPS benefit for WD of at least $0.20, starting in the fiscal 2020 third quarter ending April 3, 2020. It will incur as yet unquantified restructuring and other charges.